The new school year is upon us, and learners of all ages are looking for tech devices that have the capabilities they will need in the coming year. The tech marketplace can be confusing, and competing claims can be hard to navigate. The XPRTs are here to help! Whether you’re shopping for a new phone, tablet, Chromebook, laptop, or desktop, the XPRTs can provide reliable, industry-trusted performance scores that can cut through all the noise.

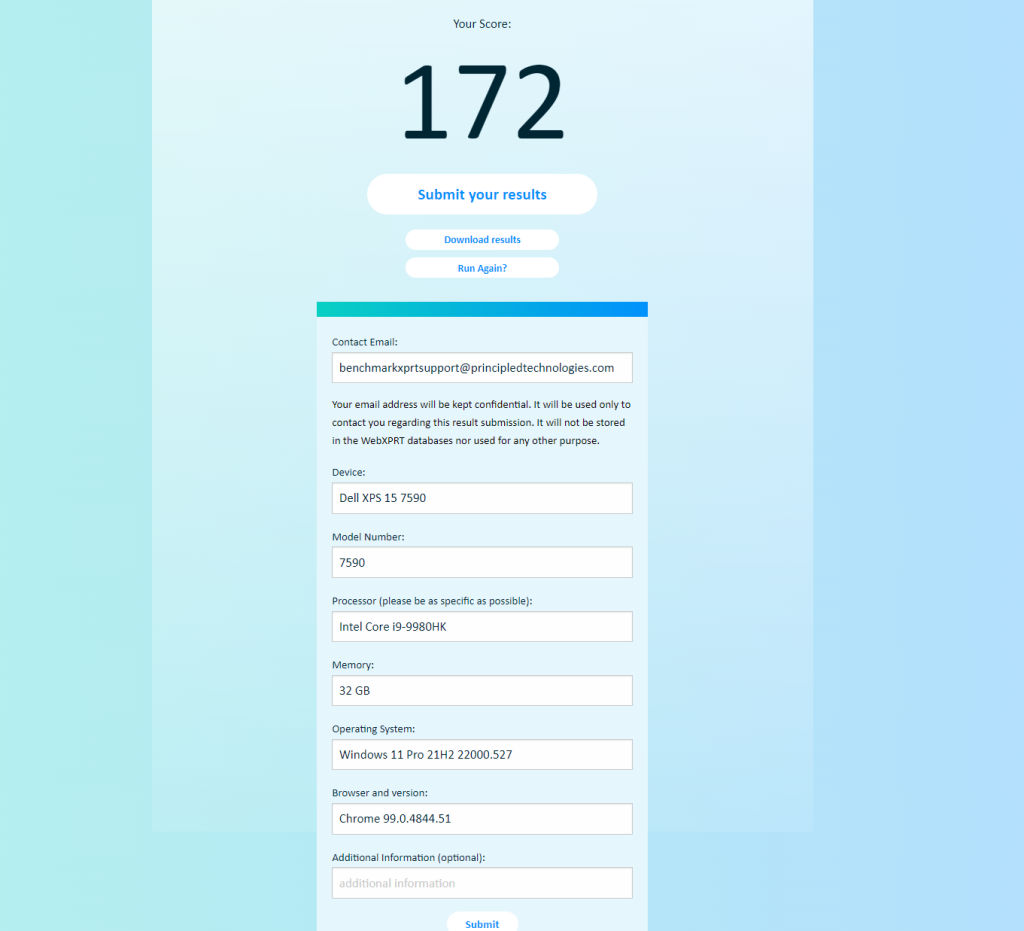

A good place to start looking for scores is the WebXPRT 4 results viewer. The viewer displays WebXPRT 4 scores from over 175 devices—including many hot new releases—and we’re adding new scores all the time. To learn more about the viewer’s capabilities and how you can use it to compare devices, check out this blog post.

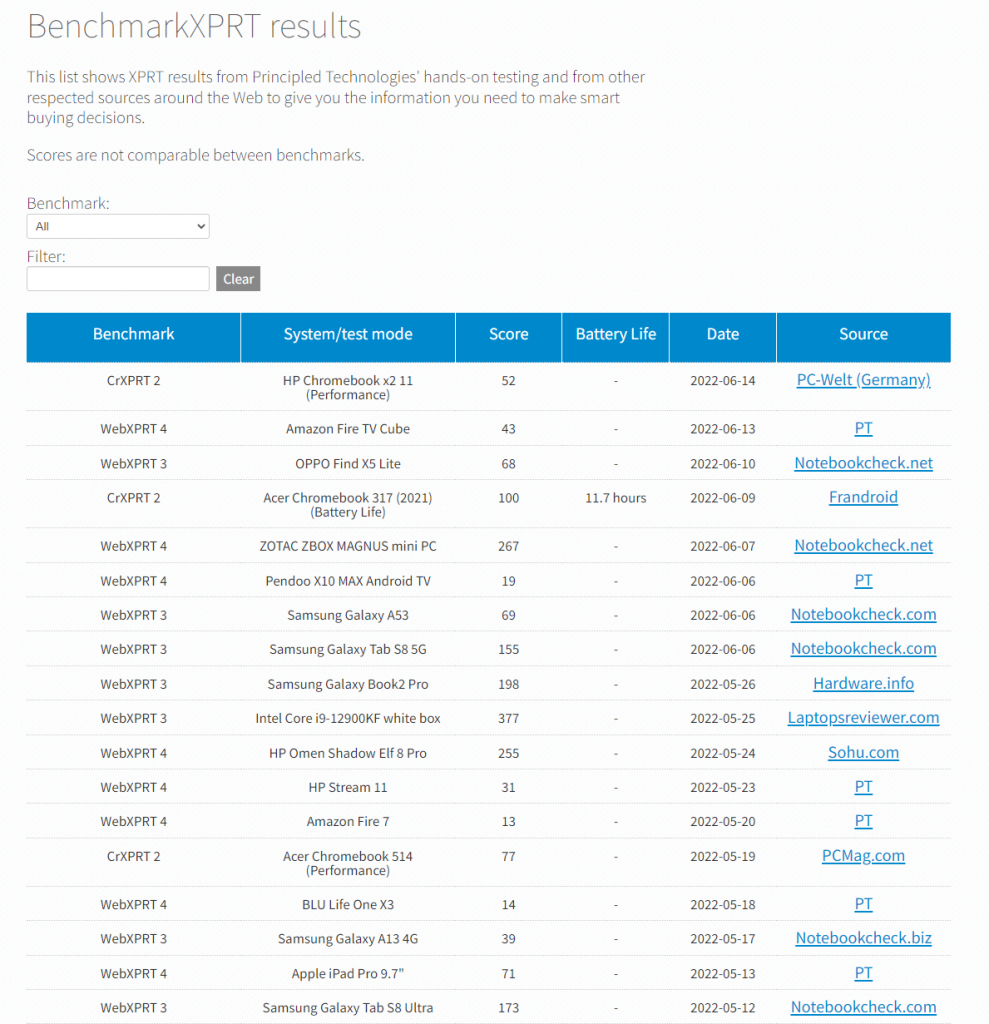

Another resource we offer is the XPRT results browser. The browser is the most efficient way to access the XPRT results database, which currently holds more than 3,000 test results from over 120 sources, including major tech review publications around the world, OEMs, and independent testers. It offers a wealth of current and historical performance data across all of the XPRT benchmarks and hundreds of devices. You can read more about how to use the results browser here.

Also, if you’re considering a popular device, chances are good that a recent tech review includes an XPRT score for that device. Two quick ways to find these reviews: (1) go to your favorite tech review site and search for “XPRT” and (2) go to a search engine and enter the device name and XPRT name (e.g., “Apple MacBook Air” and “WebXPRT”). Here are a few recent tech reviews that use one of the XPRTs to evaluate a popular device:

- Notebookcheck used WebXPRT in reviews of the Acer Swift X 16, Apple MacBook Air, ASUS ROG Flow X16, Lenovo V17G2, Nothing Phone (1); and a recent article titled, “The Best Smartphones.”

- PCMag used WebXPRT 3 to compare the M1 Max and M1 Ultra versions of the Apple Mac Studio, and to review the Apple Macbook Air (2022, M2).

- PCWorld used CrXPRT 2 in a feature called, “The best Chromebooks: Best overall, best battery life, and more.”

- ZDNet used CrXPRT 2 in a review titled, “The 5 best Chromebooks for students: Top back-to-school picks.”

The XPRTs can help consumers make better-informed and more confident tech purchases. As this school year begins, we hope you’ll find the data you need on our site or in an XPRT-related tech review. If you have any questions about the XPRTs, XPRT scores, or the results database please feel free to ask!

Justin