The holiday shopping season is upon us, and trying to find the right tech gift for your friends or loved ones (or yourself!) can be a daunting task. If you’re considering new phones, tablets, Chromebooks, laptops, or desktops as gifts this year—and are unsure where to get reliable device information—the XPRTs can help!

The XPRTs provide industry-trusted and time-tested measures of a device’s performance that can help you cut through the fog of competing marketing claims. For example, instead of guessing whether the performance of a new gaming laptop justifies its price, you can use its WebXPRT performance score to see how it stacks up against both older models and competitors while tackling everyday tasks.

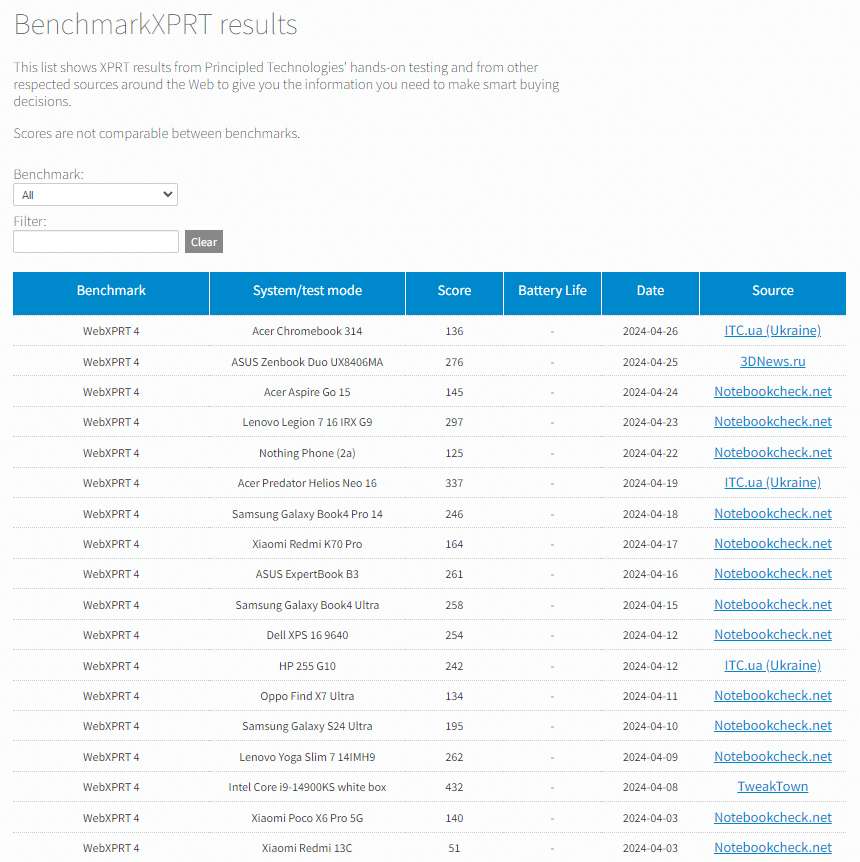

A great place to start looking for device scores is our XPRT results browser, which lets you access our database of more than 3,700 test results—across all the XPRT benchmarks and hundreds of devices—from over 155 sources, including major tech review publications around the world, OEMs, our own Principled Technologies (PT) testing, and independent submissions. For tips on how to use the XPRT results browser, check out this blog post.

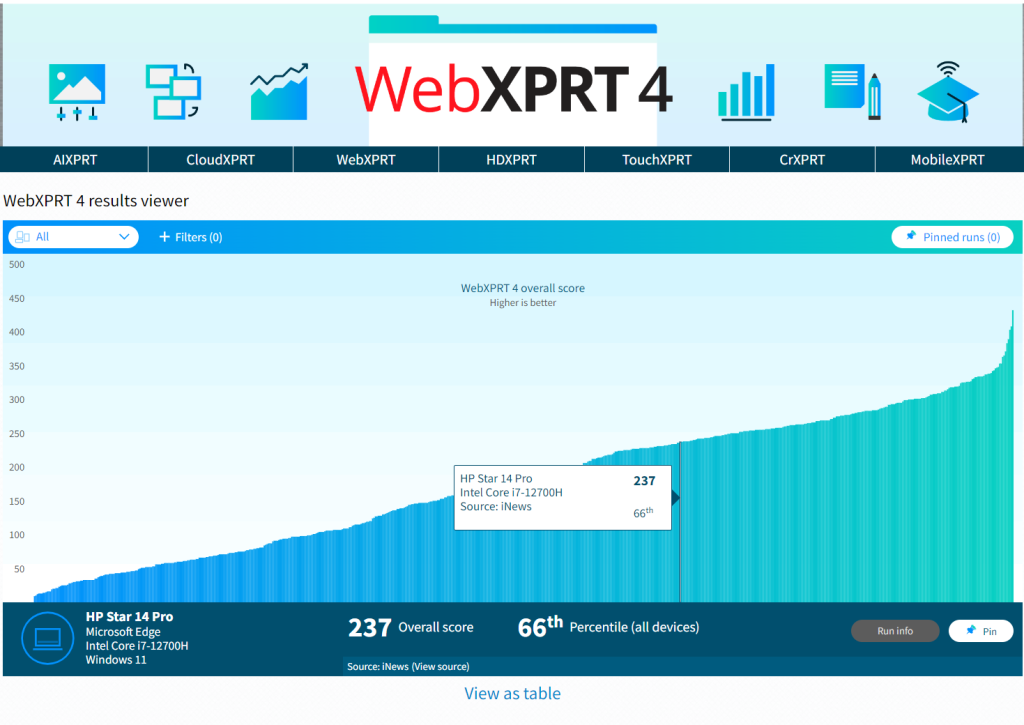

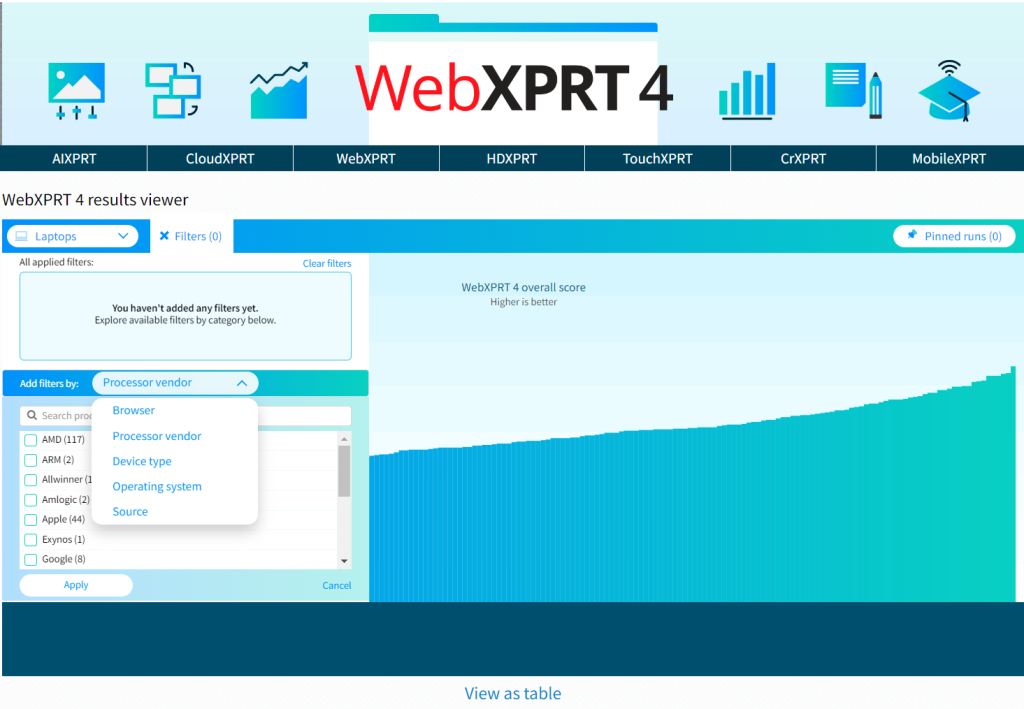

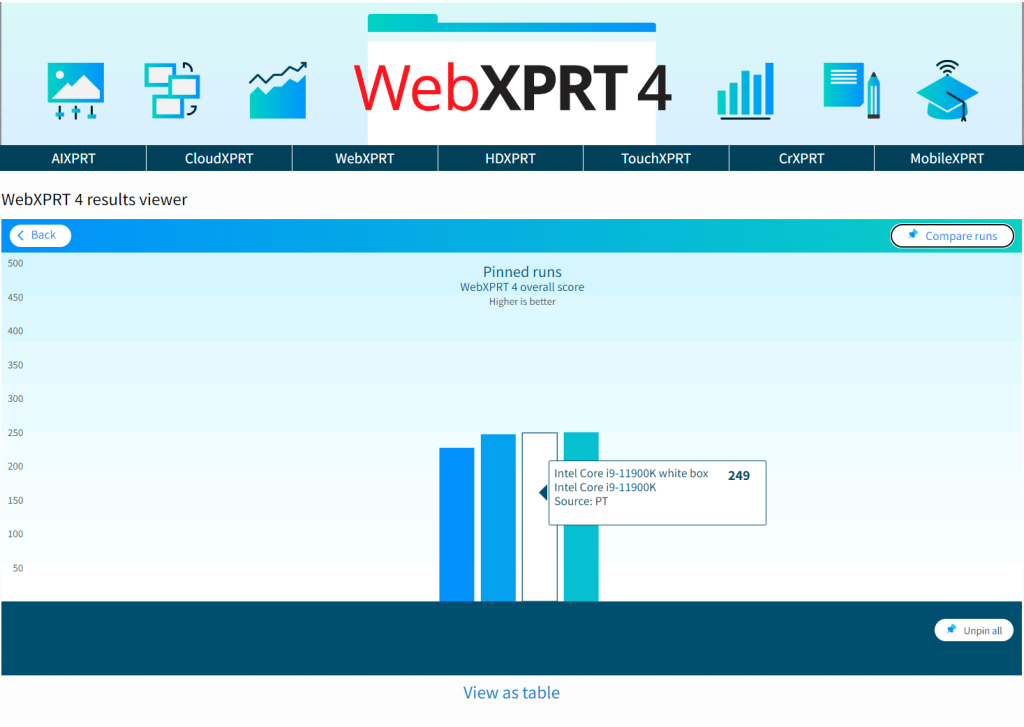

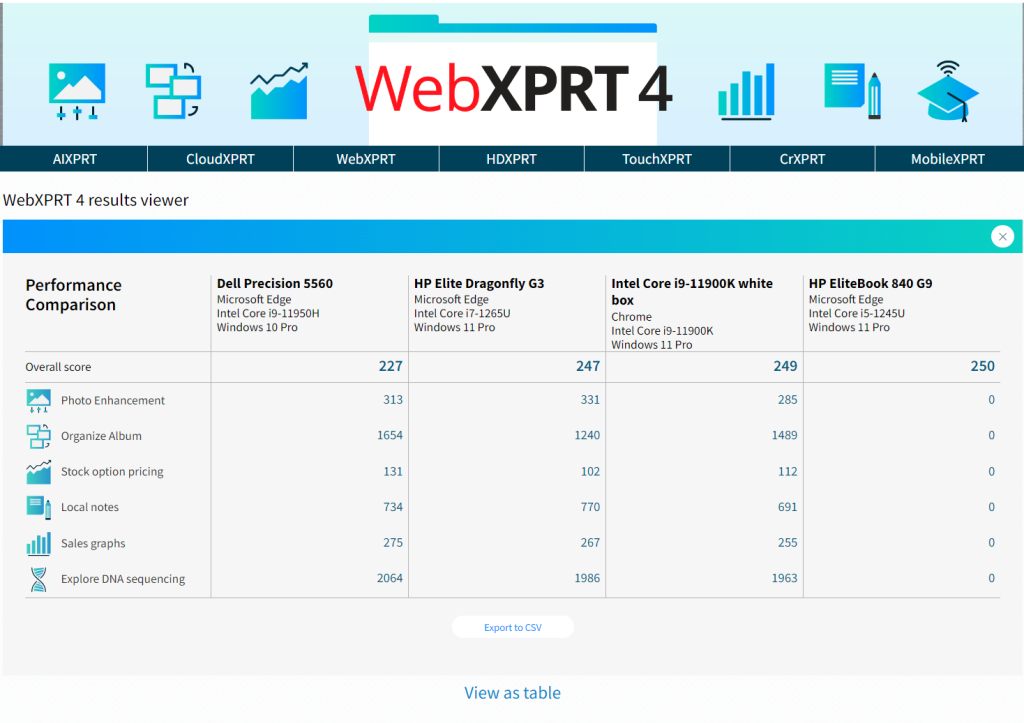

Another way to view information in our results database is by using the WebXPRT 4 results viewer. The viewer provides an information-packed, interactive tool that we created to help people explore data from the set of almost 800 WebXPRT 4 results we’ve curated and published to date on our site. You’ll find detailed instructions in this blog post for how to use the WebXPRT 4 results viewer tool.

If you’re considering a popular device, it’s likely that a recent tech press review includes an XPRT score for it. To find those scores, go to your favorite tech review site and search for “XPRT,” or enter the name of the device and the appropriate XPRT (e.g., “iPhone” and “WebXPRT”) in a search engine. Here are a few recent tech reviews that used the XPRTs to evaluate popular devices:

- GSMArena used WebXPRT to assess the performance of the ASUS Vivobook S15 Copilot+ PC.

- Notebookcheck used WebXPRT in reviews of the Apple iPhone 16 Plus, the Google Pixel 9, the HP OmniBook Ultra 14, the Lenovo Yoga Slim 7 15, and the Samsung Galaxy Book4 Edge 16.

- PCWorld used CrXPRT to evaluate the Acer Chromebook Spin 714 (2024).

- Tom’s Guide used WebXPRT in a review of the Lenovo Flex 5i Chromebook Plus.

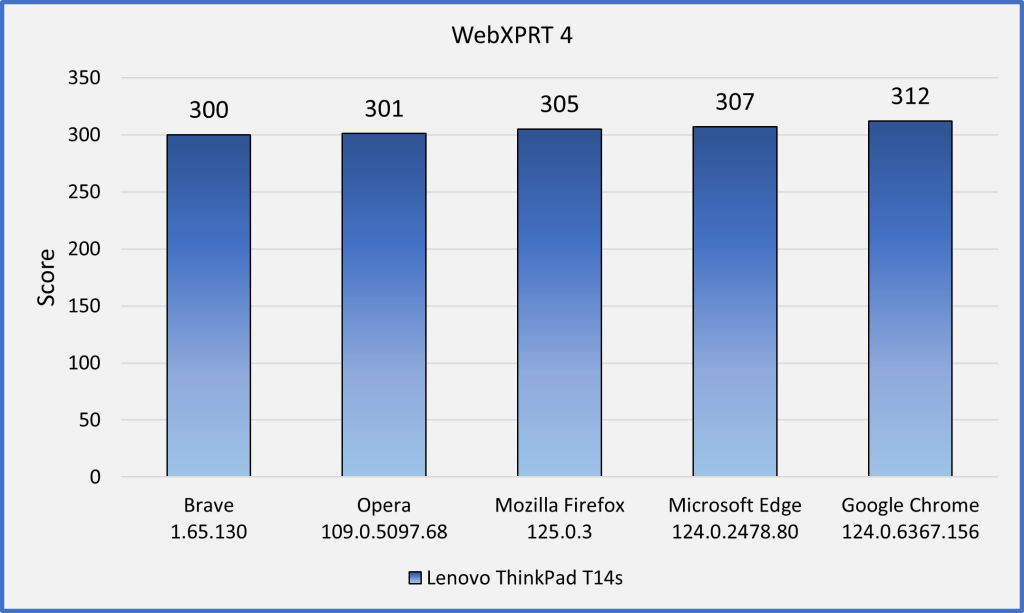

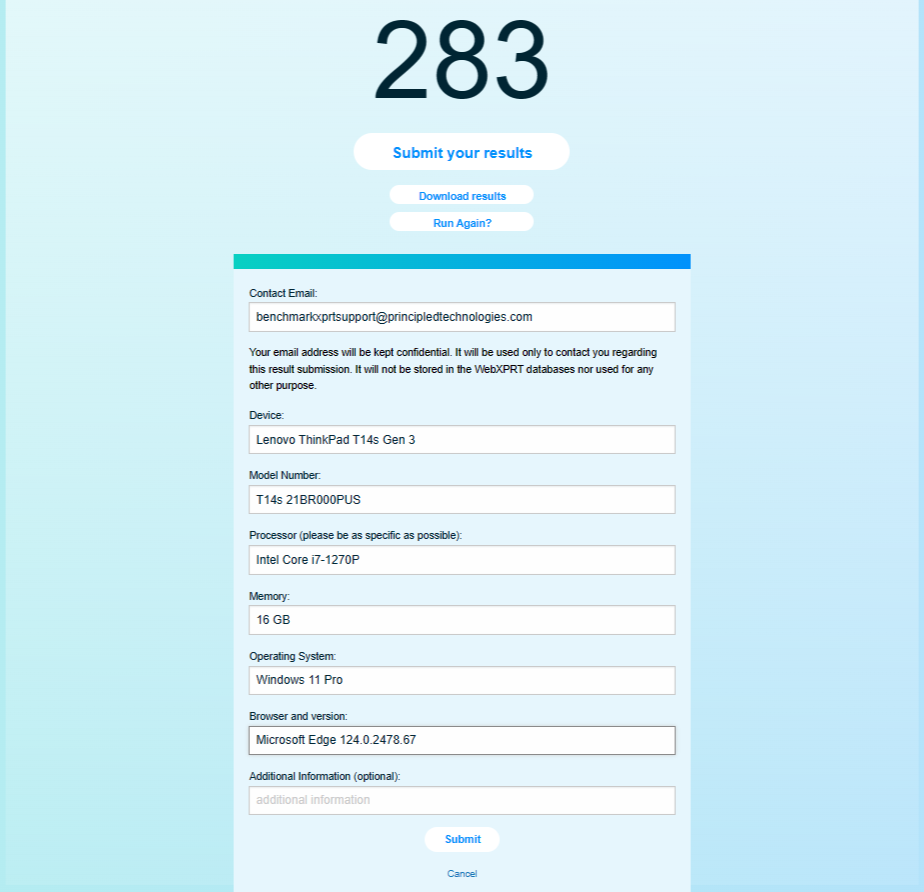

In addition to XPRT-related resources in the tech press, here at PT we frequently publish reports that evaluate the performance of hot new consumer devices, and many of those reports include WebXPRT scores. For example, check out the results from our extensive testing of a Dell Latitude 7450 AI PC or our in-depth evaluation of three new Lenovo ThinkPad and ThinkBook laptops.

The XPRTs can help you make better-informed and more confident tech purchases this holiday season. We hope you’ll find the data you need on our site or in an XPRT-related tech review. If you have any questions about the XPRTs, XPRT scores, or the results database, please feel free to ask!

Justin