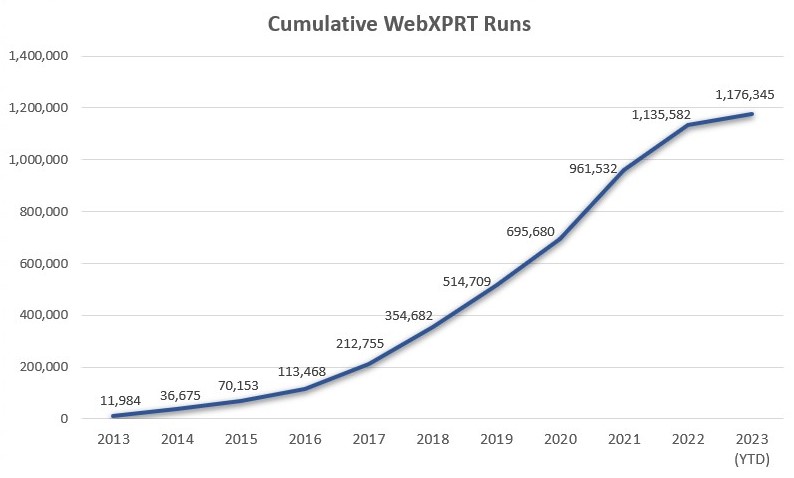

In our last blog post, we reflected on the 10-year anniversary of the WebXPRT launch by looking at the consistent growth in the number of WebXPRT runs over the last decade. Today, we wrap up our focus on WebXPRT’s anniversary by sharing some data about the benchmark’s truly global reach.

We occasionally update the community on some of the reach metrics we track by publishing a new version of the “XPRTs around the world” infographic. The metrics include completed test runs, benchmark downloads, and mentions of the XPRTs in advertisements, articles, and tech reviews. This information gives us insight into how many people are using the XPRT tools, and publishing the infographic helps readers and community members see the impact the XPRTs are having around the world.

WebXPRT is our most widely used benchmark by far, and is responsible for much of the XPRT’s global reach. Since February 2013, users have run WebXPRT more than 1,176,000 times. Those test runs took place in over 924 cities located in 81 countries on six continents. Some interesting new locations for completed WebXPRT runs include Rajarampur, Bangladesh; Al Muharraq, Bahrain; Manila, The Philippines; Skopje, Macedonia; and Ljubljana, Slovenia.

We’re pleased that WebXPRT has proven to be a useful and reliable performance evaluation tool for so many people in so many geographically distant locations. If you’ve ever run WebXPRT in a country that is not highlighted in the “XPRTs around the world” infographic, we’d love to hear about it!

Justin