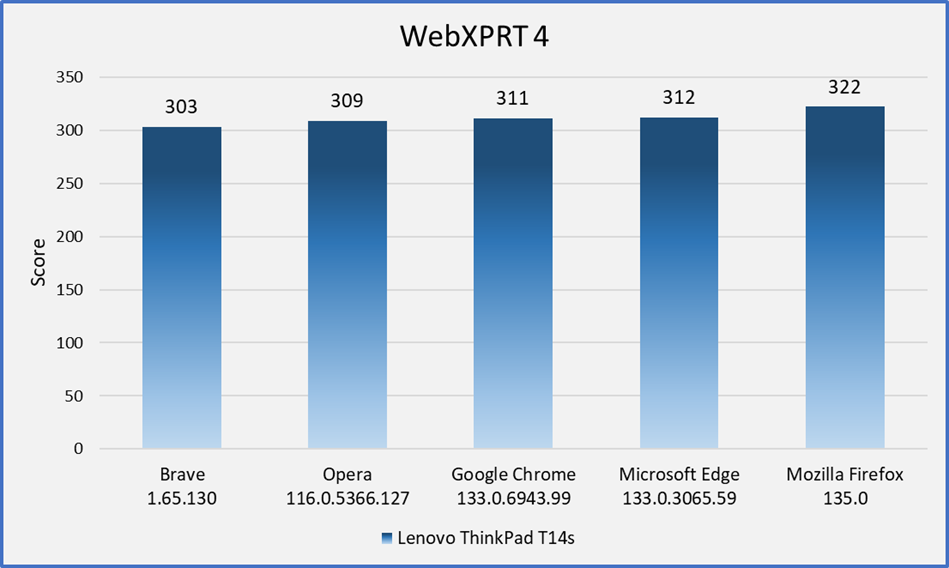

Once or twice per year, we refresh our ongoing series of WebXPRT comparison tests to see if software version updates have reordered the performance rankings of popular web browsers. We published our most recent comparison last June, when we used WebXPRT 4 to compare the performance of five browsers—Brave, Google Chrome, Microsoft Edge, Mozilla Firefox, and Opera—on a Lenovo ThinkPad T14s Gen 3. When assessing performance differences, it’s worth noting that all the browsers—except for Firefox—are built on a Chromium foundation. In the last round of tests, the scores were very tight, with a difference of only four percent between the last-place browser (Brave) and the winner (Chrome). Firefox’s score landed squarely in the middle of the pack.

Recently, we conducted a new set of tests to see how performance scores may have changed. To maintain continuity with our last comparison, we stuck with the same ThinkPad T14s as our reference system. That laptop is still in line with current mid-range laptops, so our comparison scores are likely to fall within the range of scores we would see from a typical user today. The ThinkPad is equipped with an Intel Core i7-1270P processor and 16 GB of RAM, and it’s running Windows 11 Pro, version 23H2 (22631.4890).

Before testing, we installed all current Windows updates, and we updated each of the browsers to the latest available stable version. After the update process was complete, we turned off updates to prevent any interference with test runs. We ran WebXPRT 4 five times on each of the five browsers. In Figure 1 below, each browser’s score is the median of the five test runs.

In this round of tests, the gap widened a bit between first and last place scores, with a difference of just over six percent between the lowest median score of 303 (Brave) and the highest median score of 322 (Firefox).

In this round of tests, the distribution of scores indicates that most users would not see a significant performance difference if they switched between the latest versions of these browsers. The one exception may be a change from the latest version of Brave to the latest version of Firefox. Even then, the quality of your browsing experience will often depend on other factors. The types of things you do on the web (e.g., gaming, media consumption, or multi-tab browsing), the type and number of extensions you’ve installed, and how frequently the browsers issue updates and integrate new technologies—among other things—can all affect browser performance over time. It’s important to keep such variables in mind when thinking about how browser performance comparison results may translate to your everyday web experience.

Have you tried using WebXPRT 4 in your own browser performance comparison? If so, we’d love to hear about it! Also, please let us know if there are other types of WebXPRT comparisons you’d like to see!

Justin