Real-world testing. Real-world value.

Explore some of the many workloads we can use to prove your solution’s benefits.

3DMark

Acoustic testing

Aerospike Database

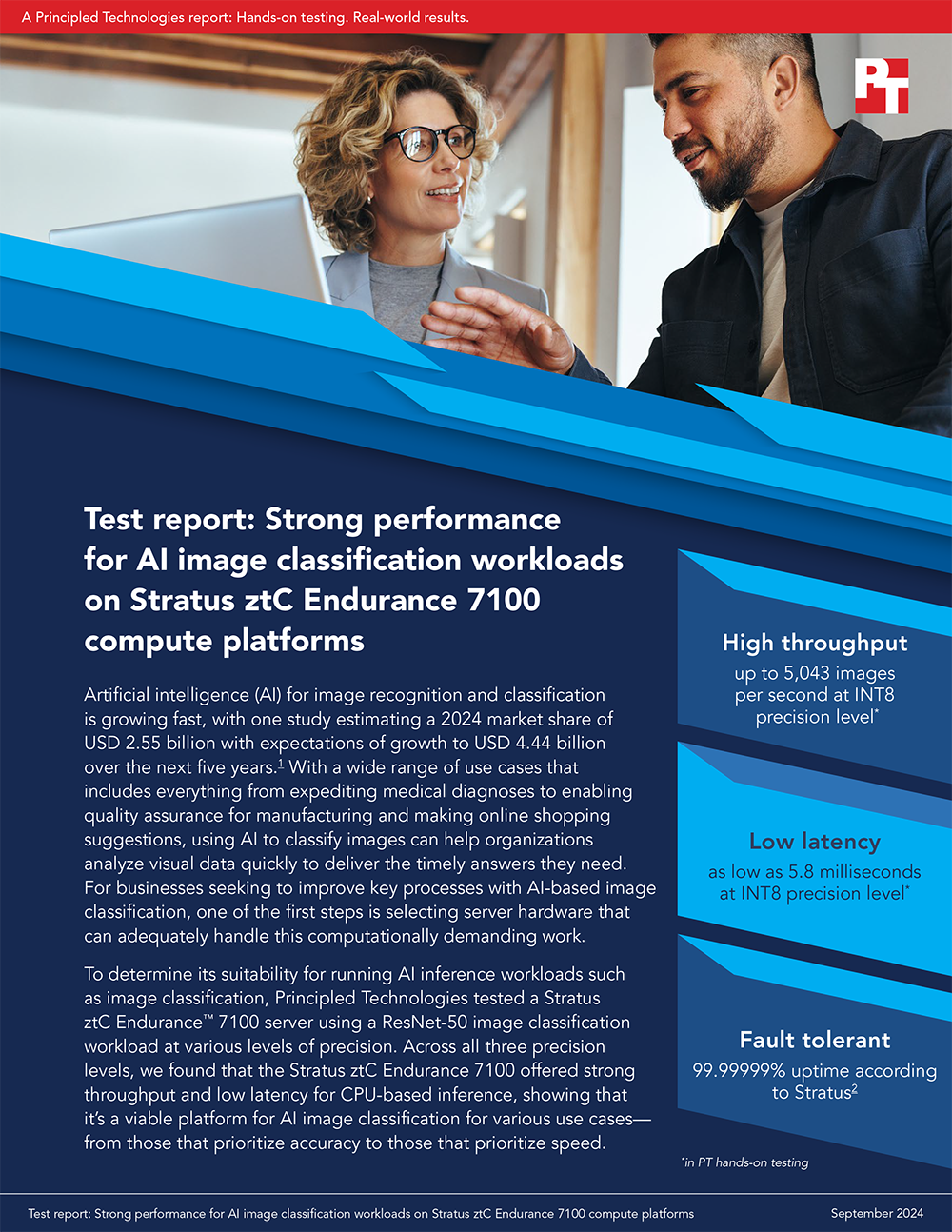

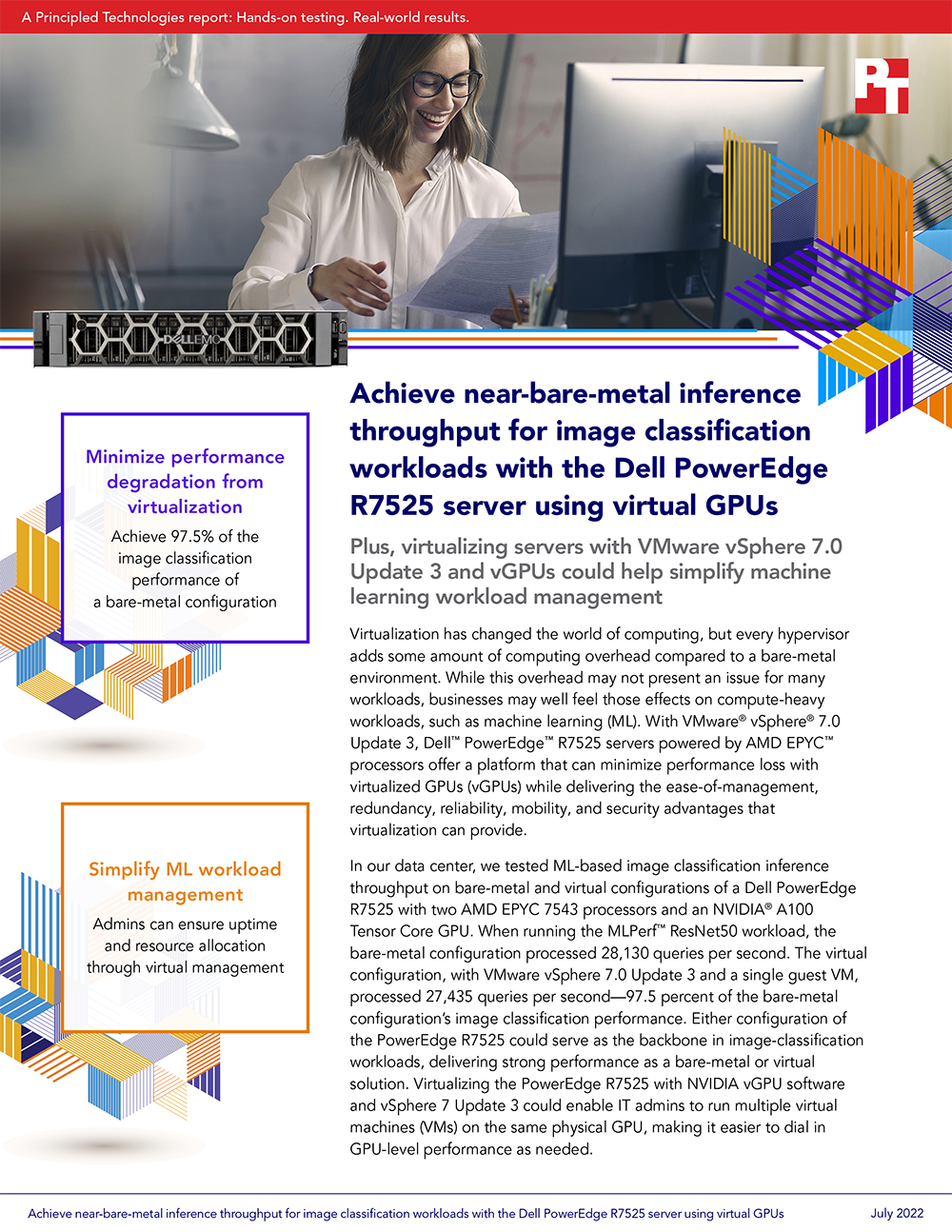

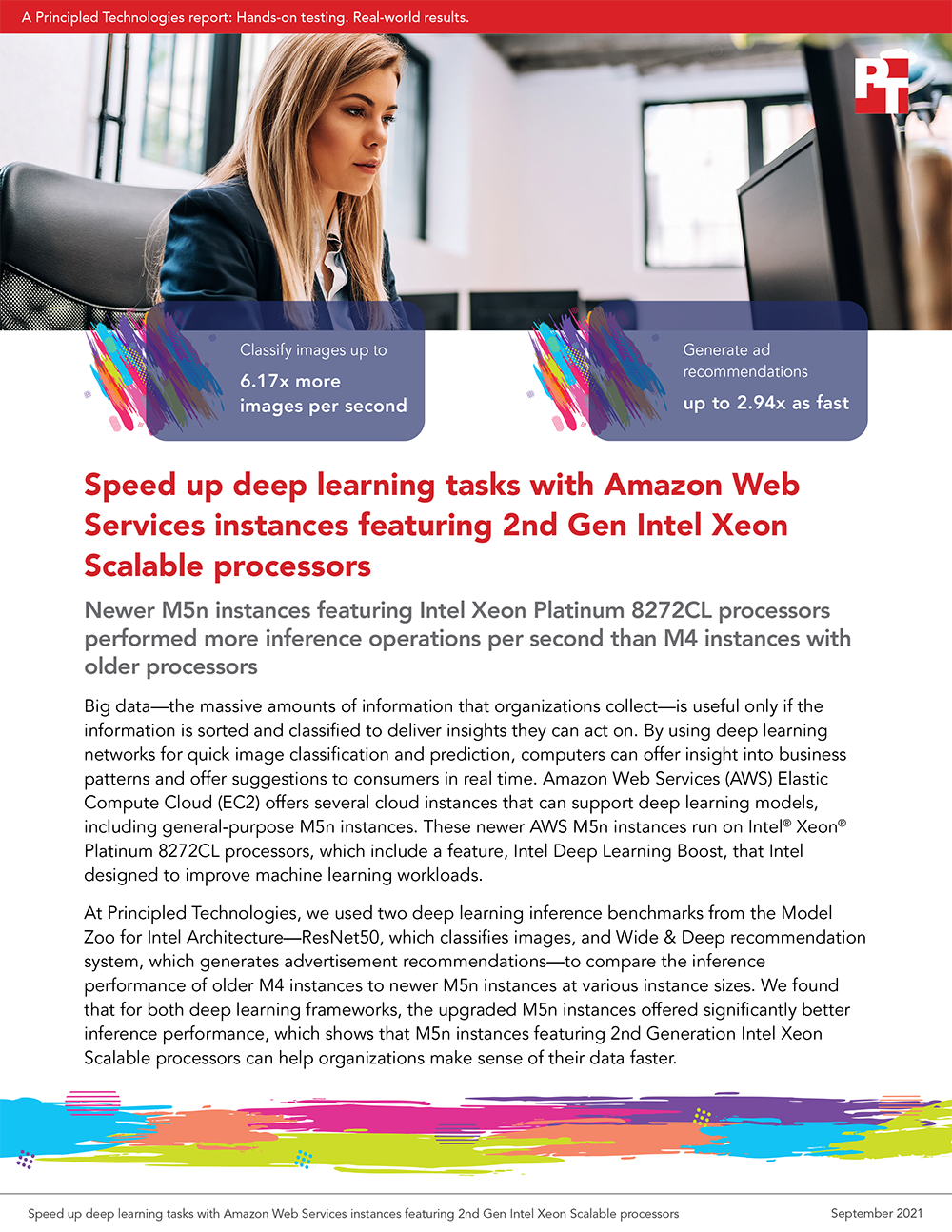

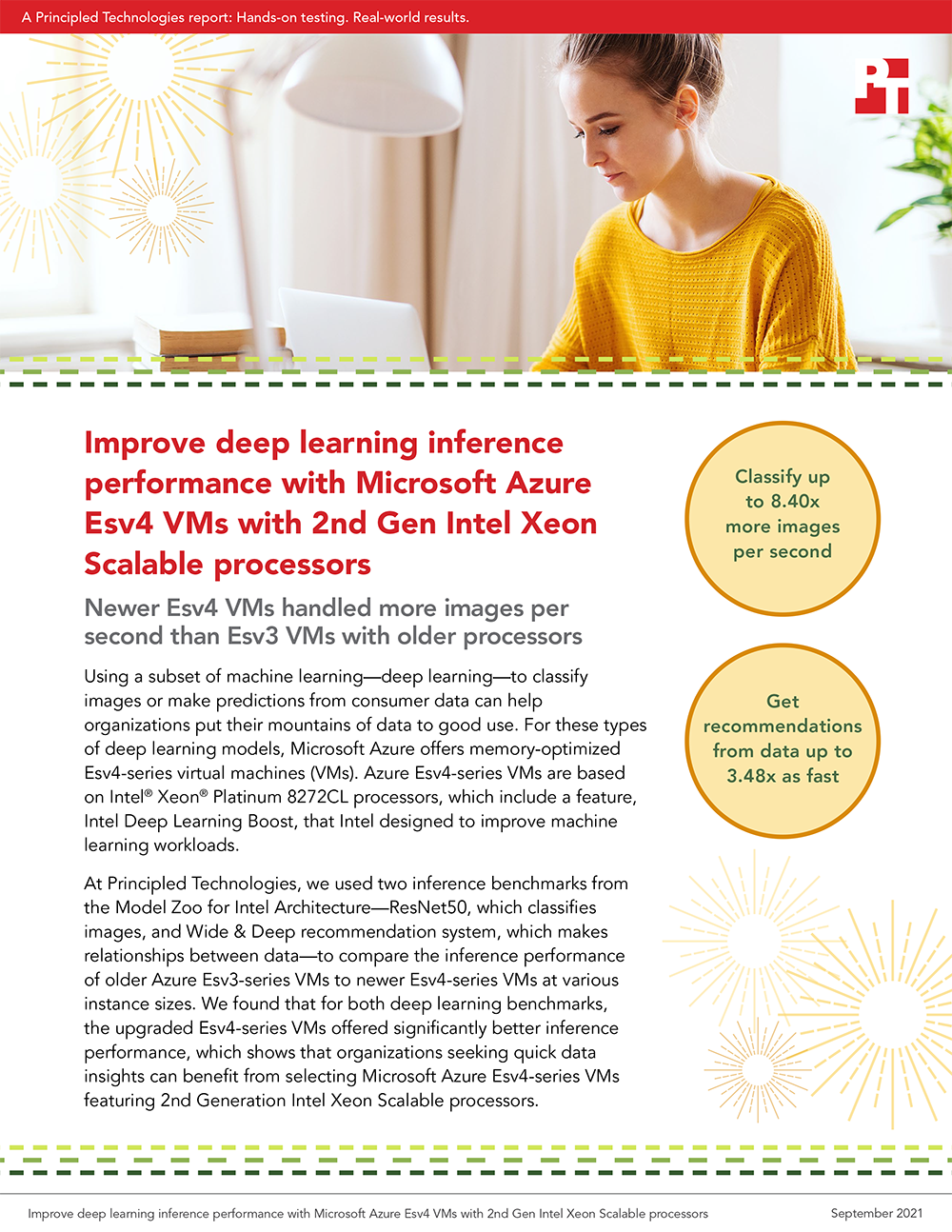

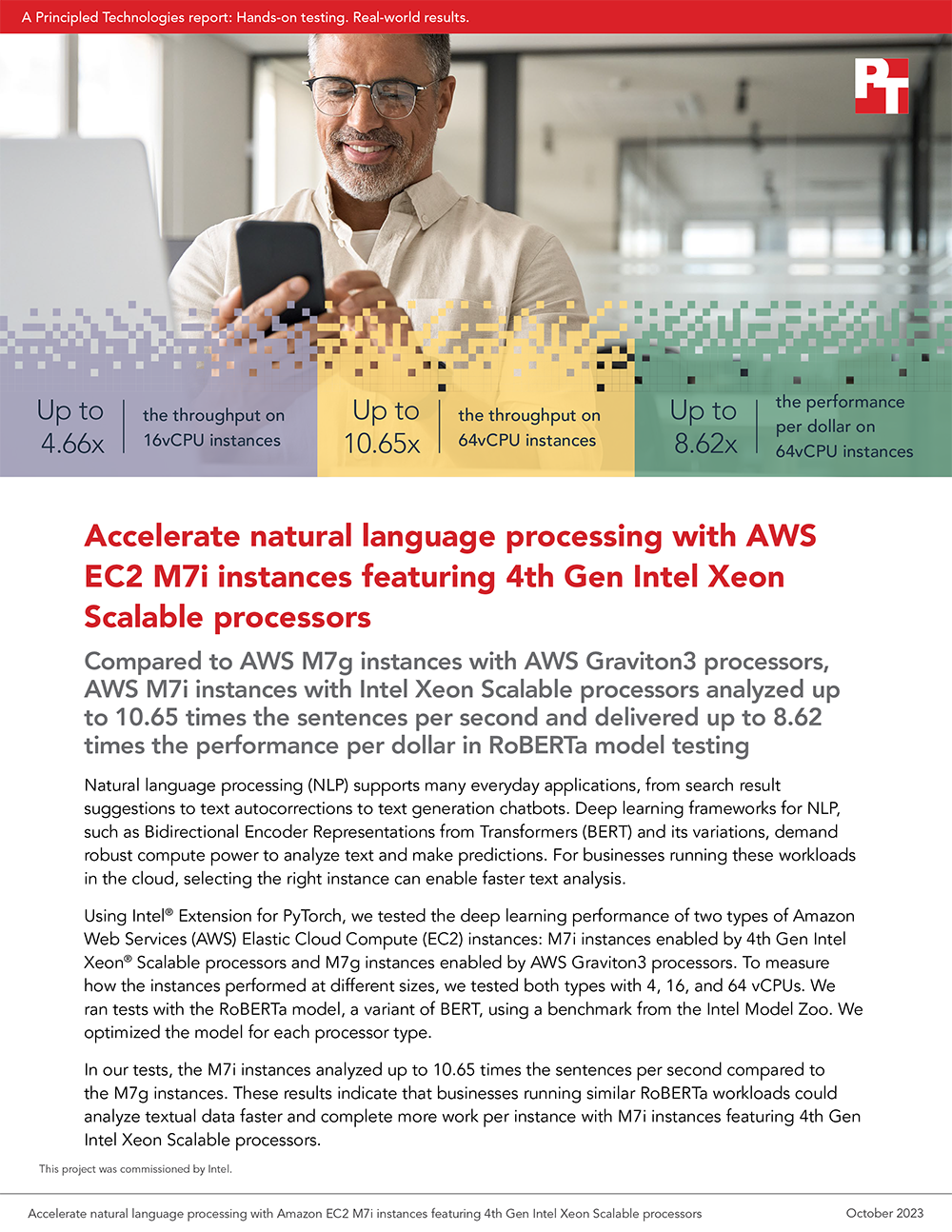

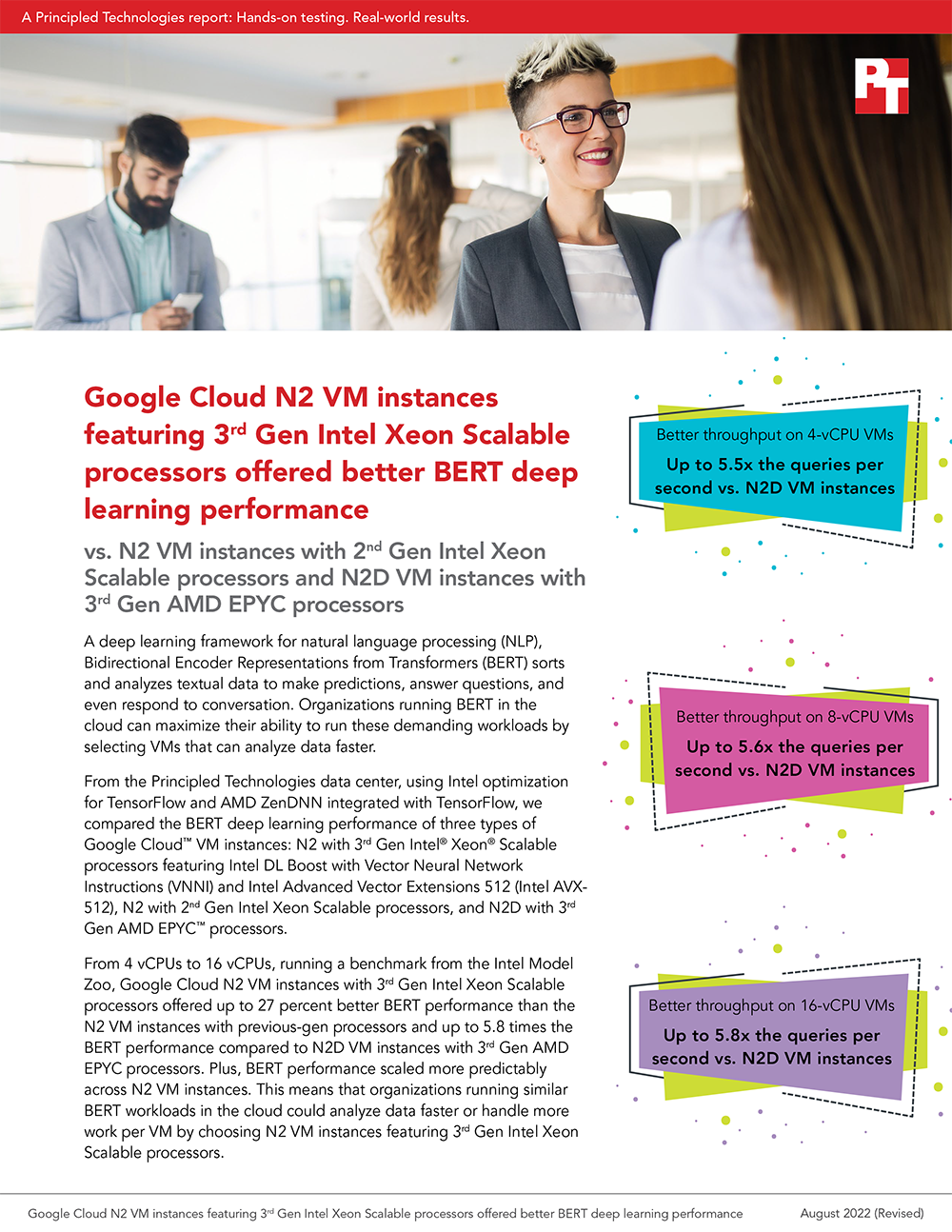

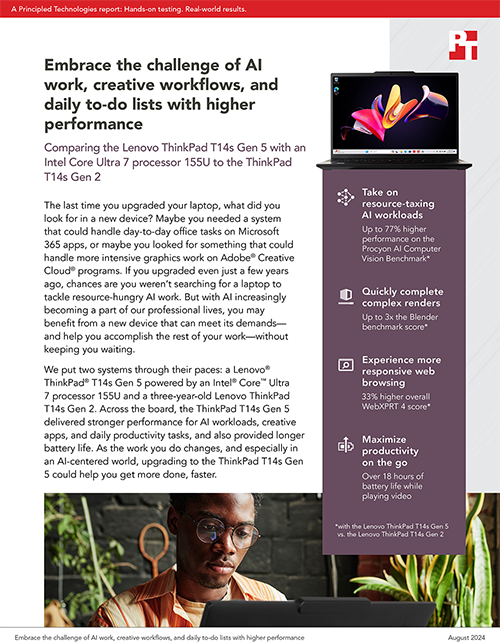

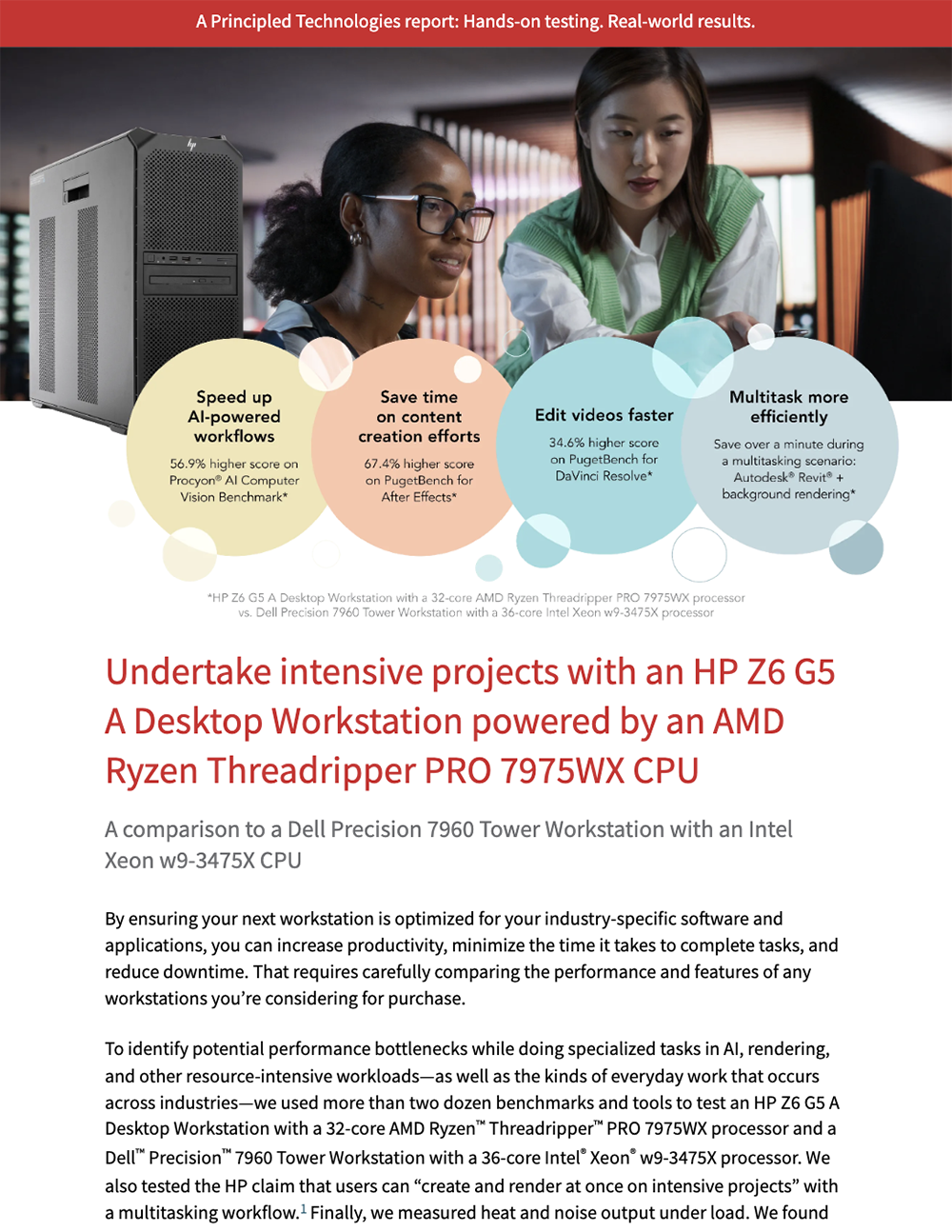

AI/ML testing: Image classification

AI/ML testing: Image segmentation

AI/ML testing: Language processing and LLMs

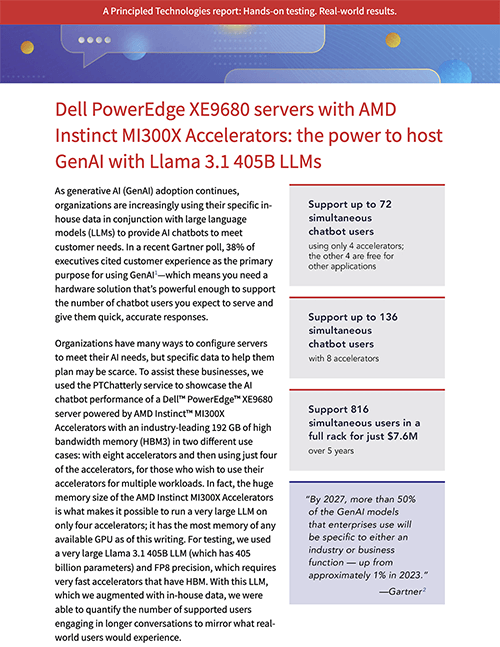

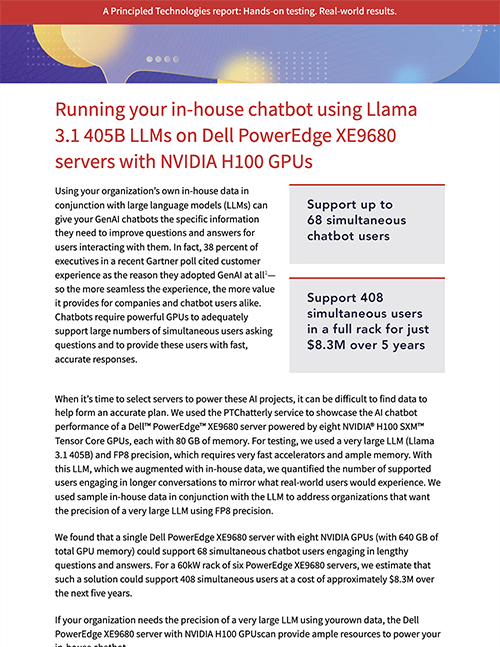

AI/ML testing: LLM testing with PTChatterly

AI/ML testing: Object detection

AI/ML testing: Recommendations

AI/ML testing: Speech recognition

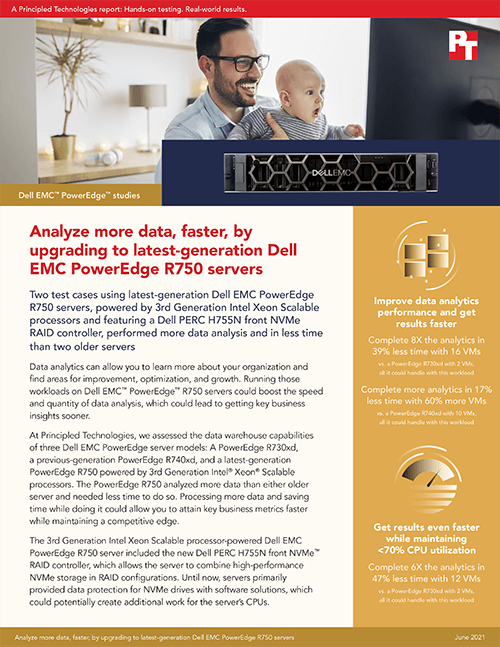

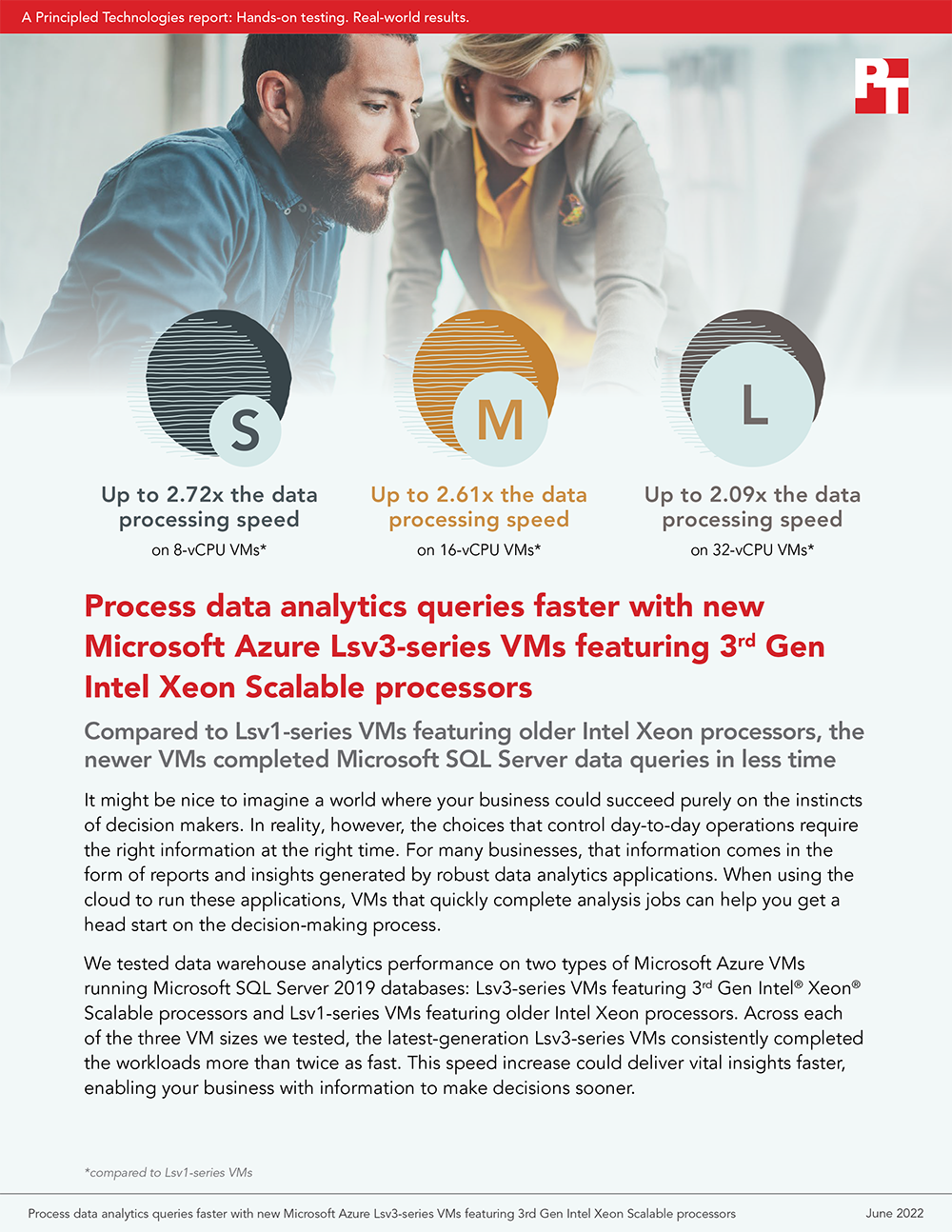

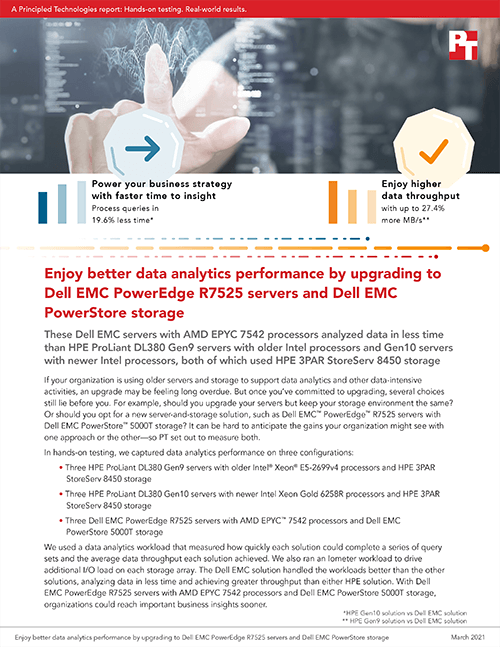

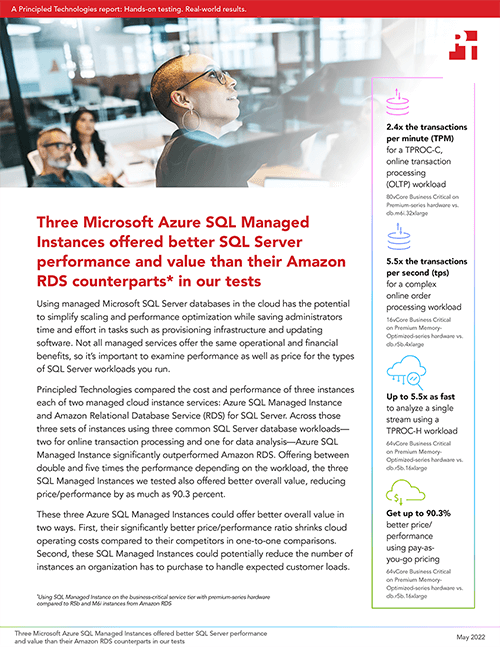

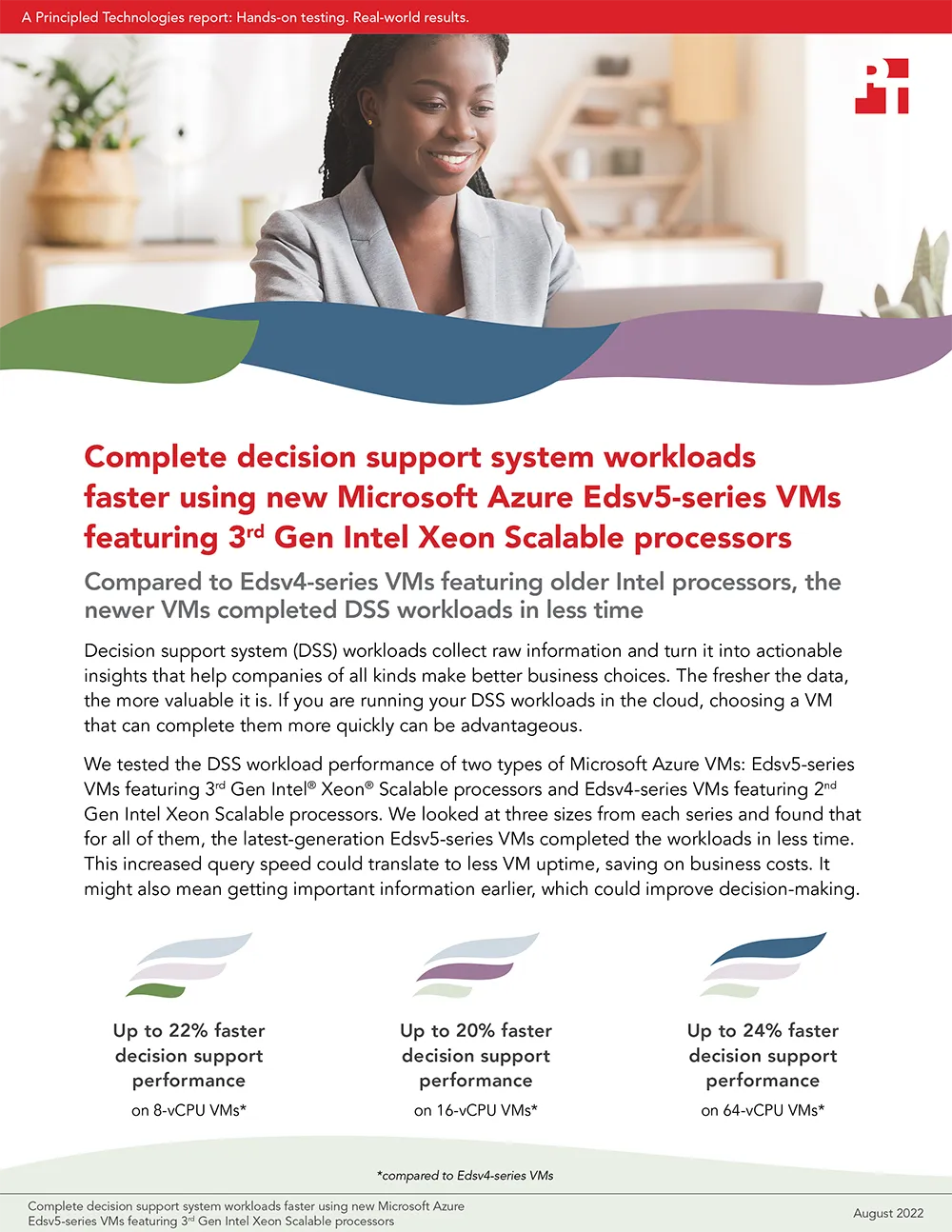

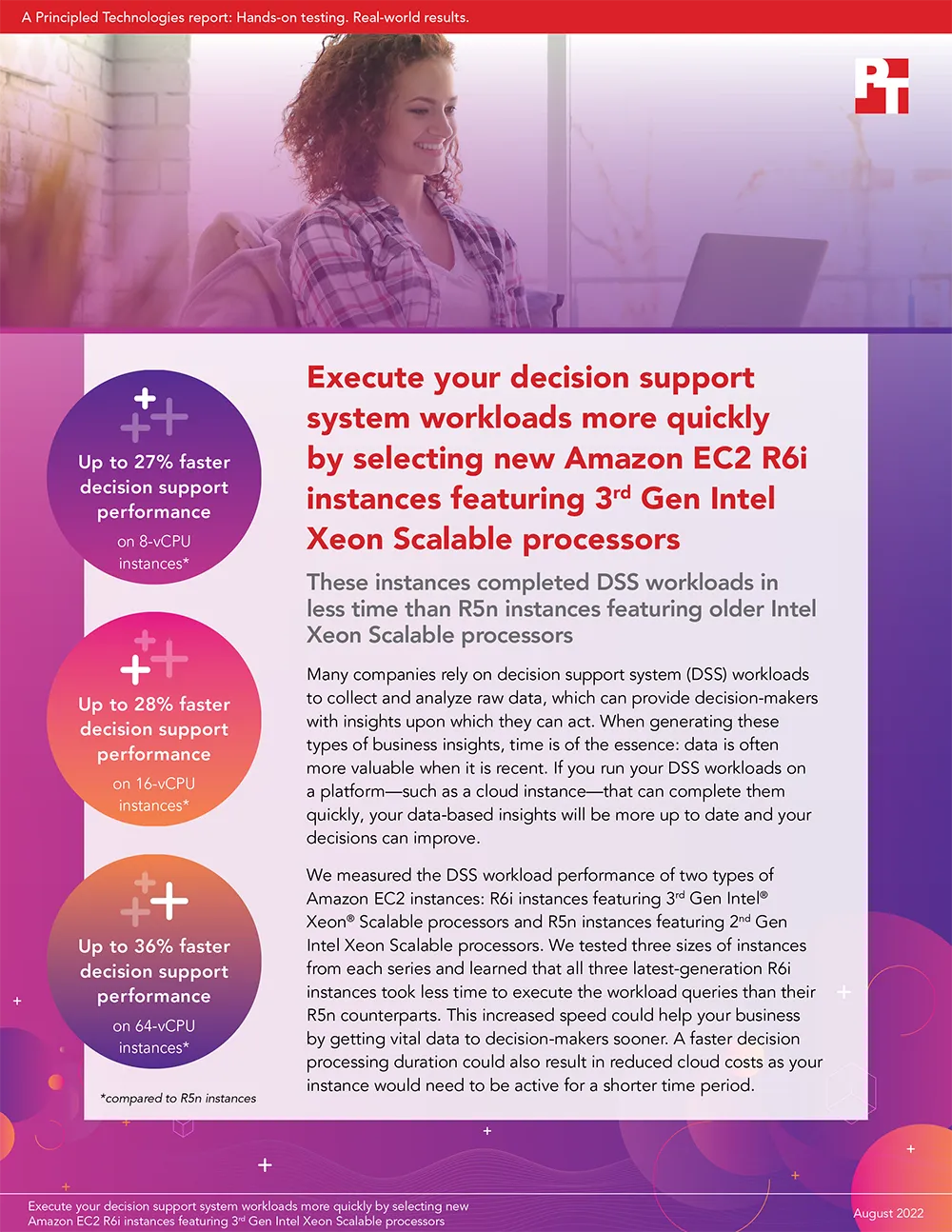

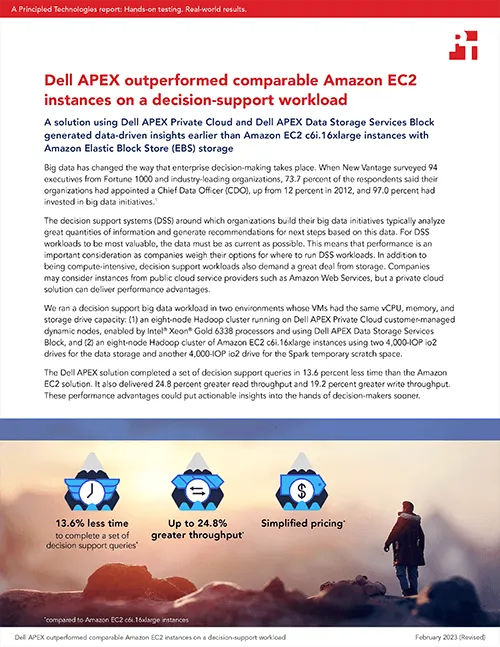

Analytics with TPROC-H

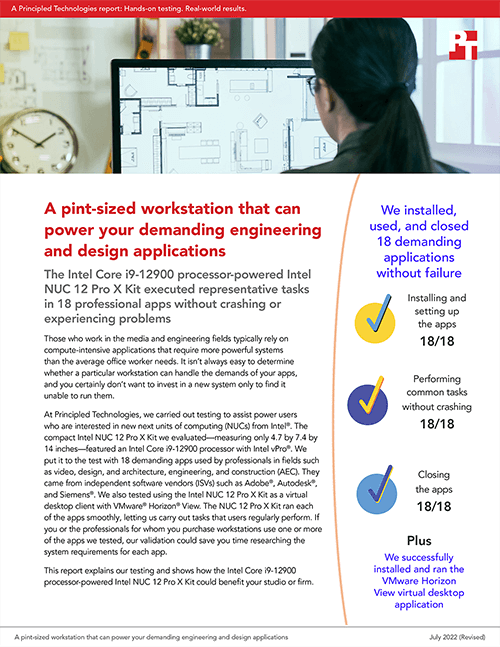

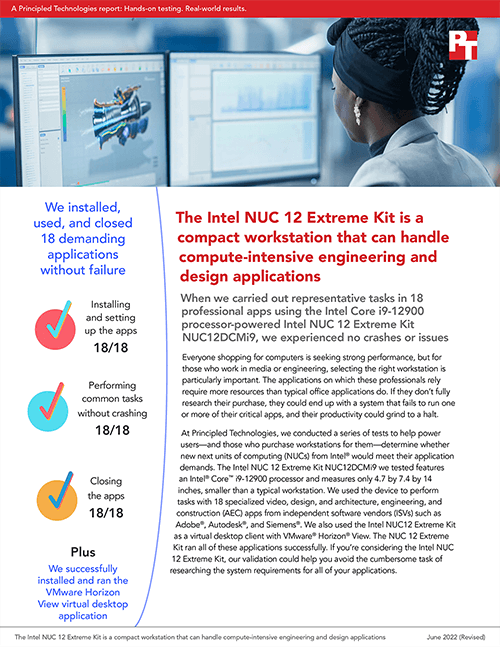

Application verification

Backup and recovery studies (end-user)

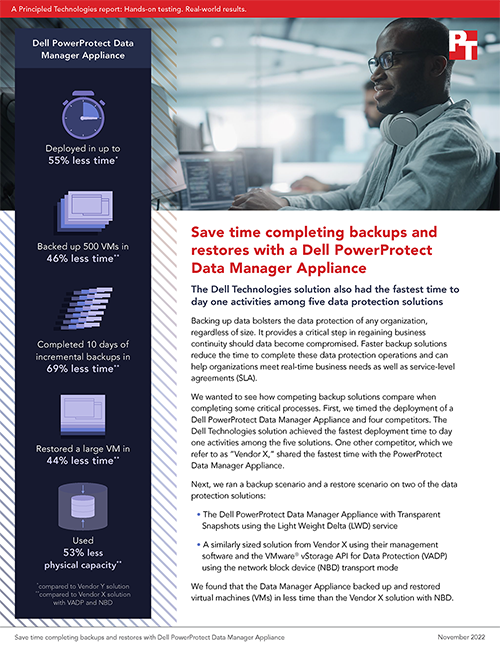

Backup and recovery studies (enterprise)

Basemark GPU

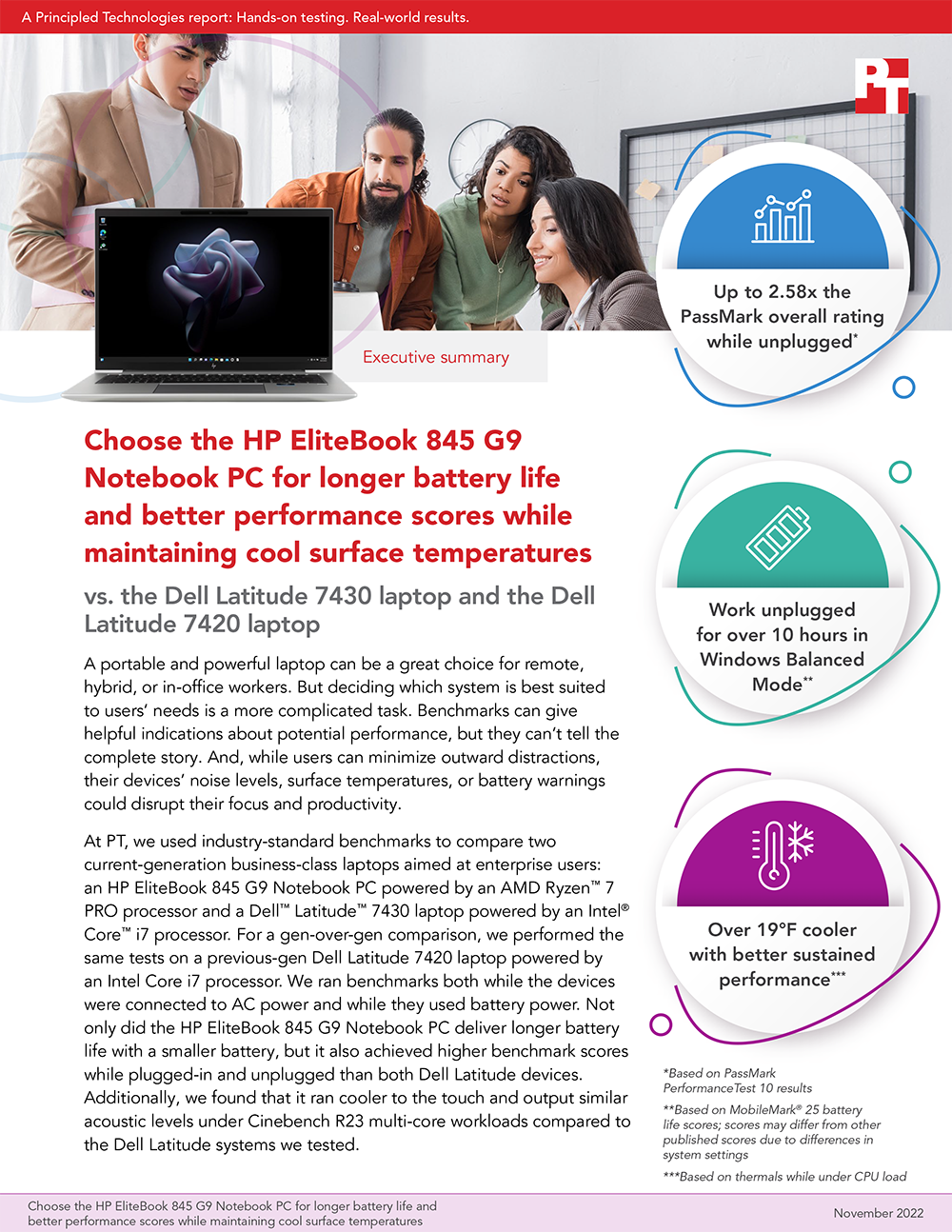

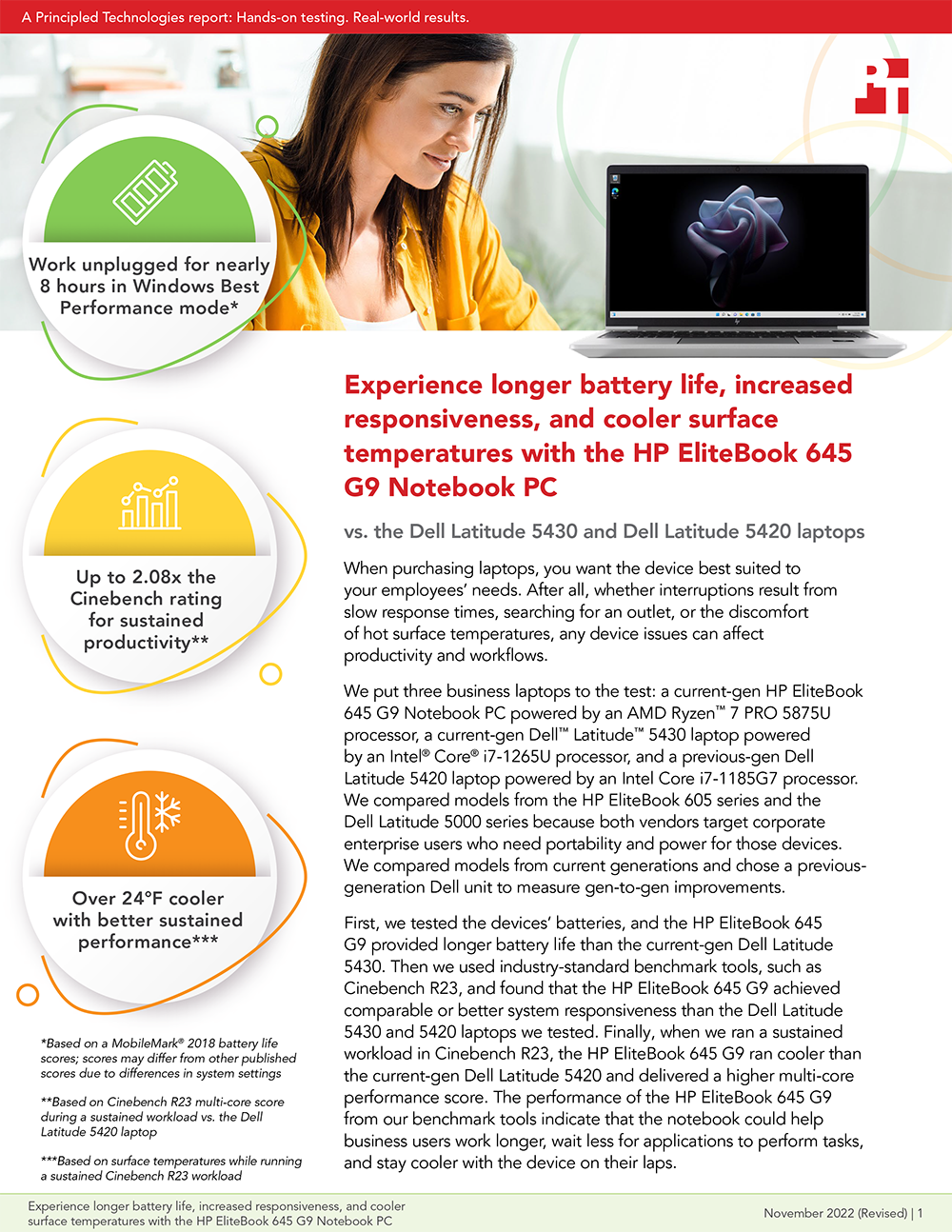

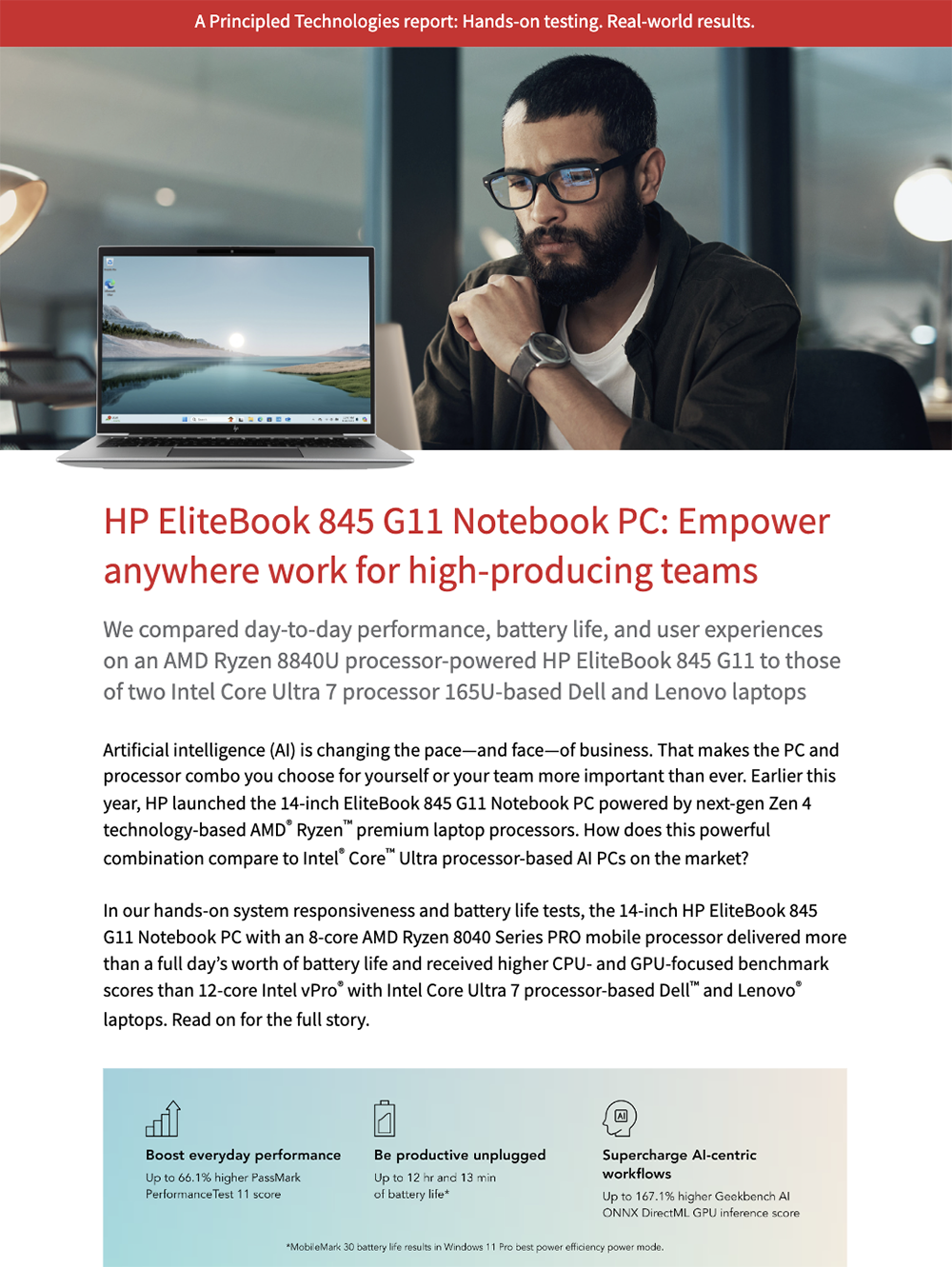

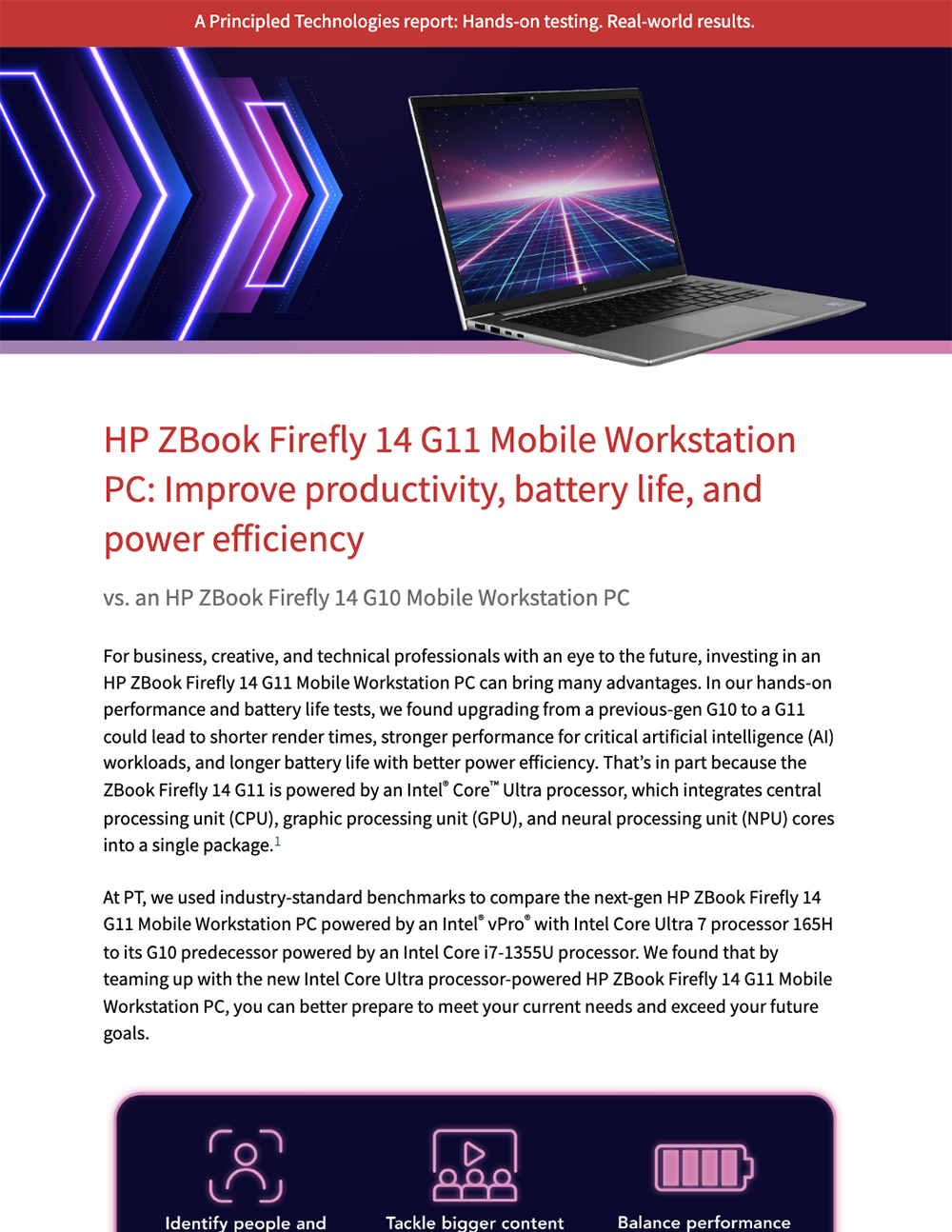

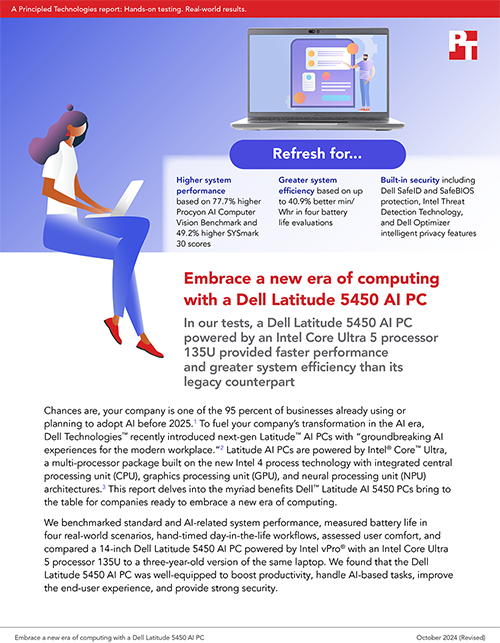

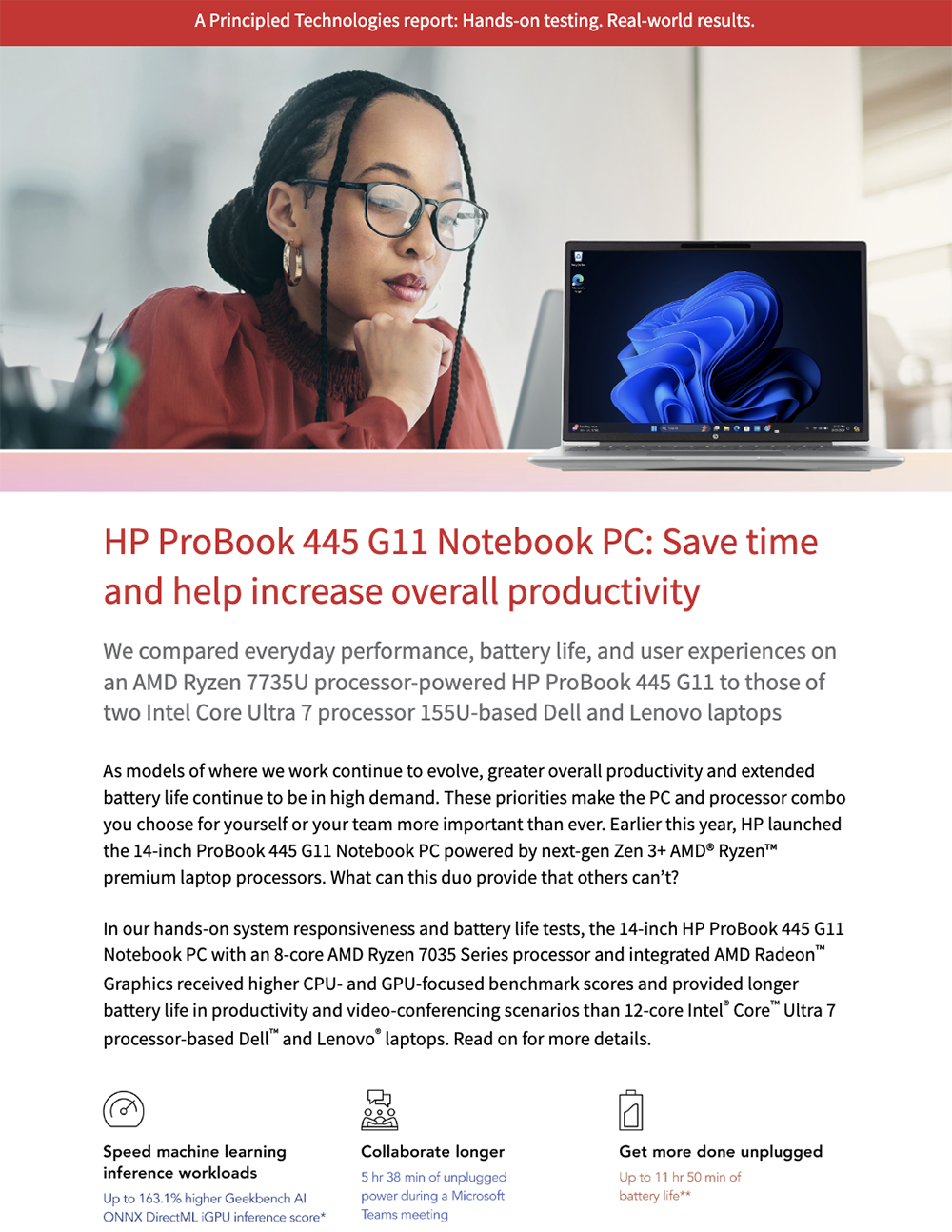

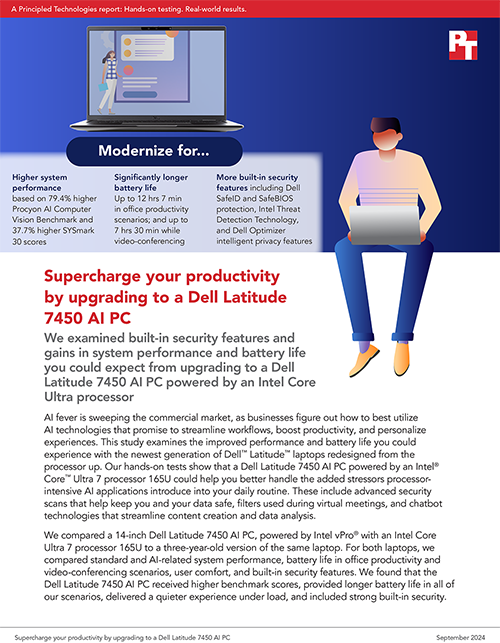

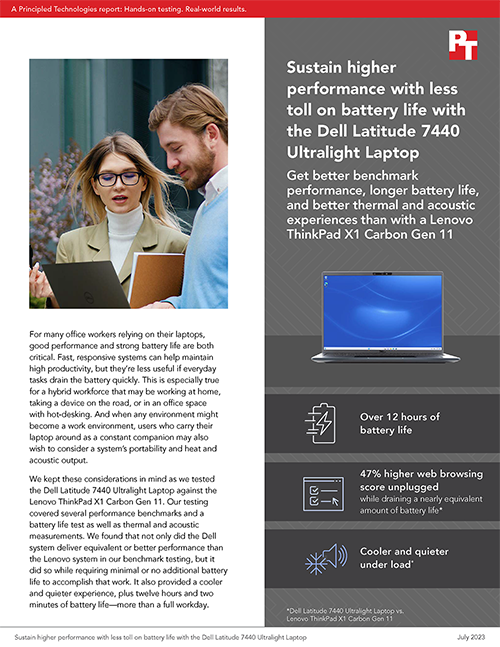

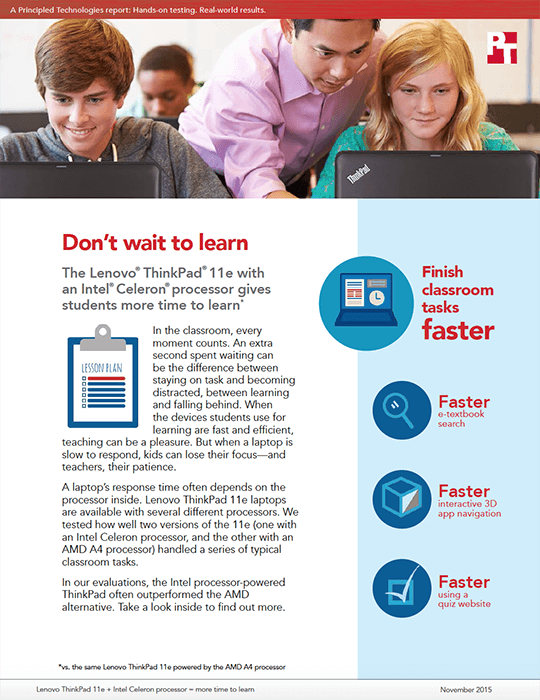

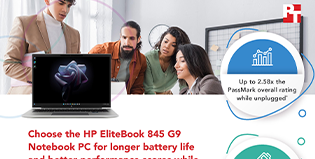

Battery life testing

Bayes (HiBench)

Benchcraft

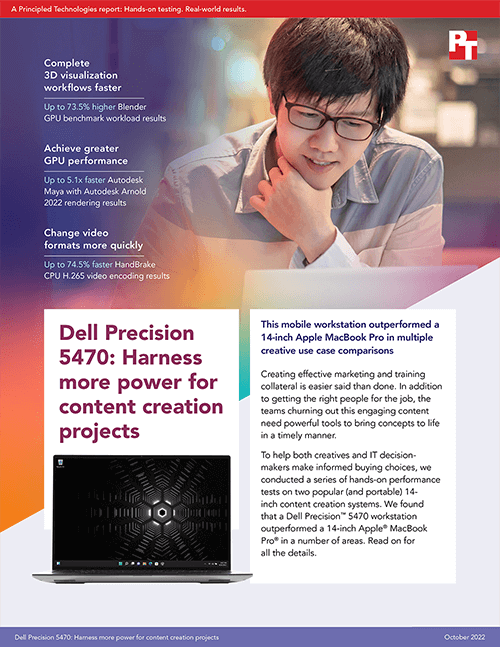

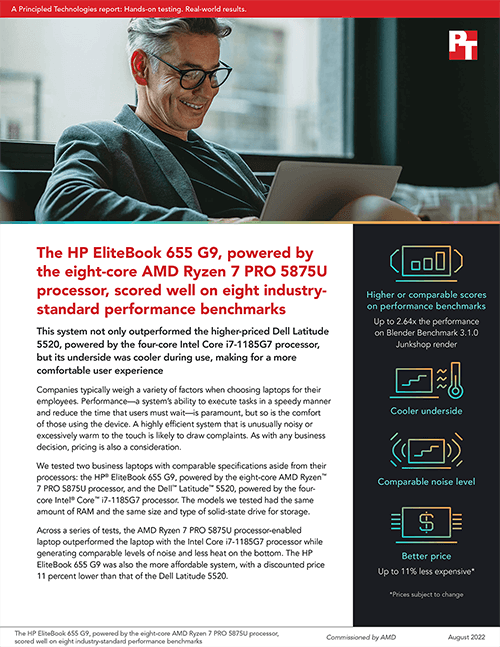

Blender

Cinebench

CrossMark

CrXPRT

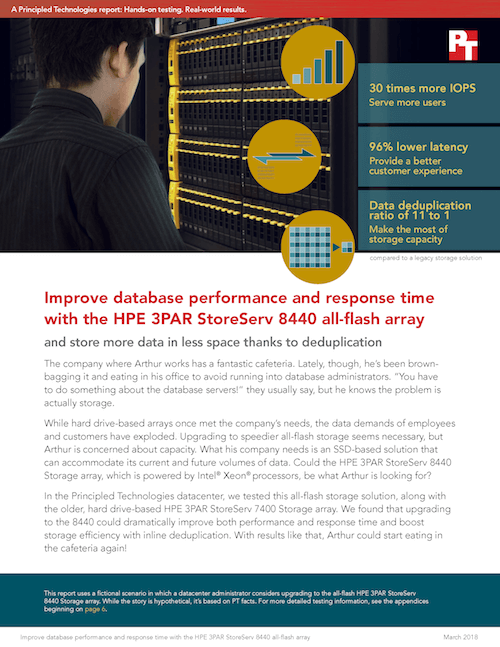

Data reduction testing

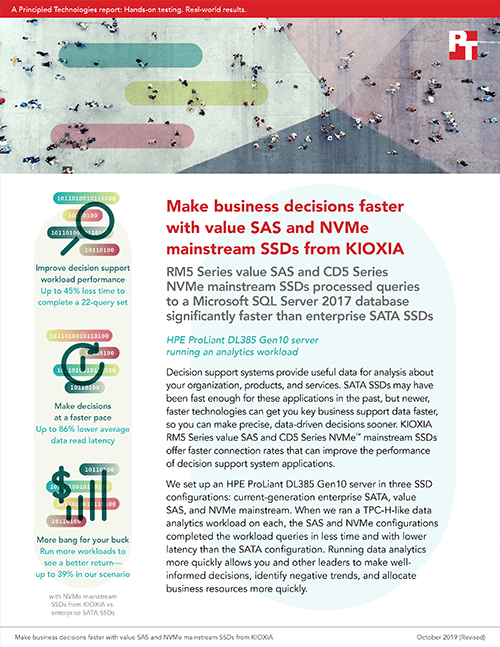

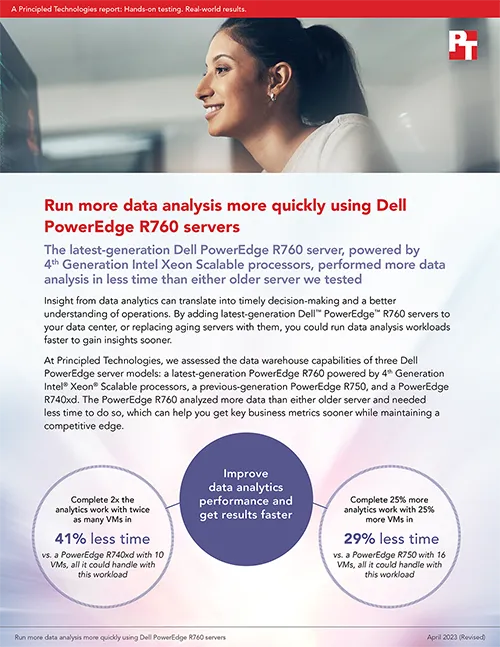

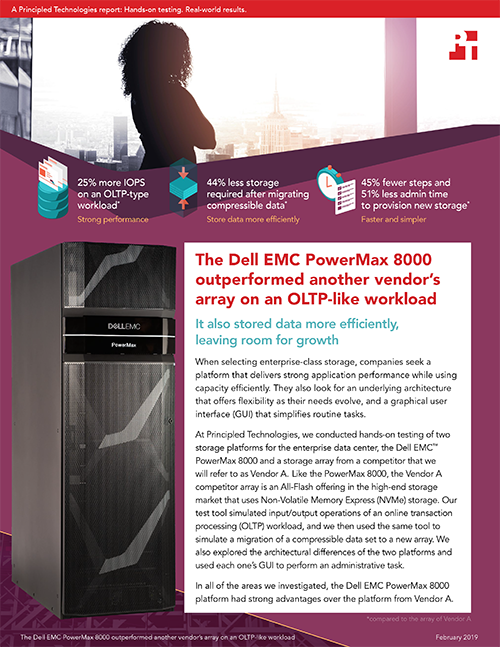

Decision support workload

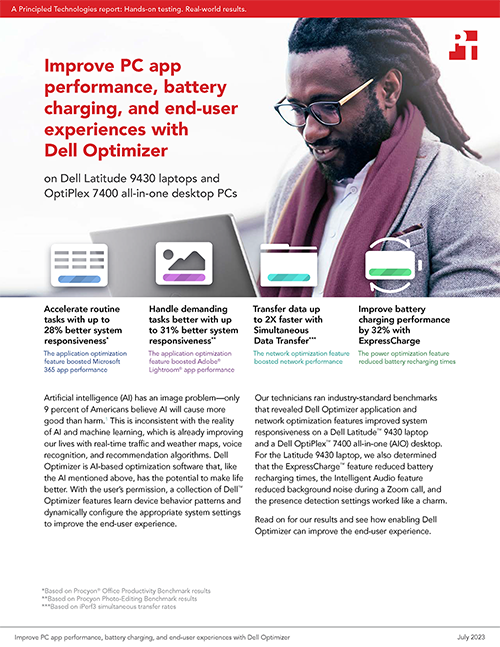

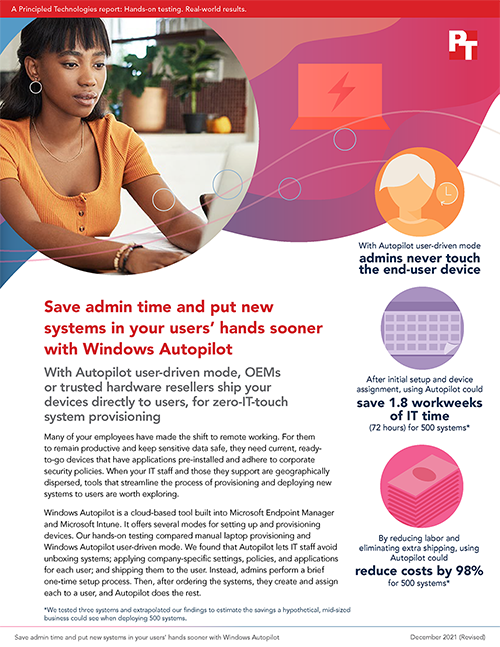

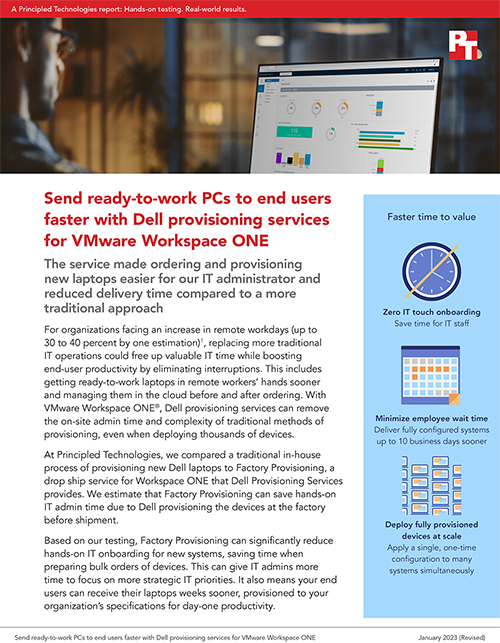

Deployment studies (on end-user devices)

Deployment studies (enterprise)

DISKSPD

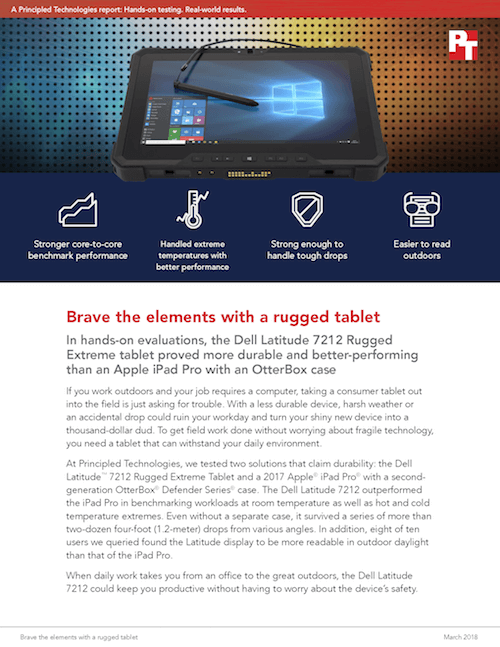

Durability testing (end-user)

Durability testing (enterprise)

Feature comparison

Frametest

Geekbench

HDXPRT

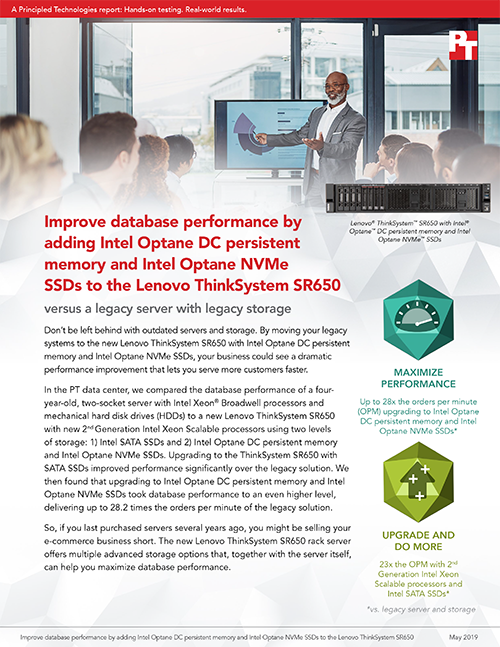

I/O testing with FIO

I/O testing with Iometer

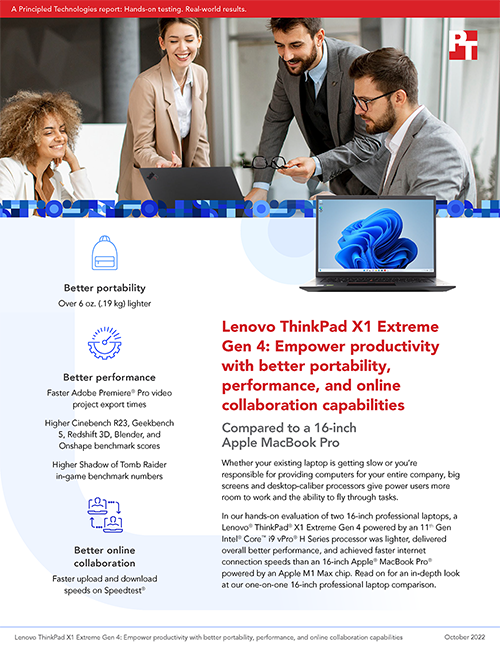

In-game benchmarks

Intel Edge Insights for Industrial

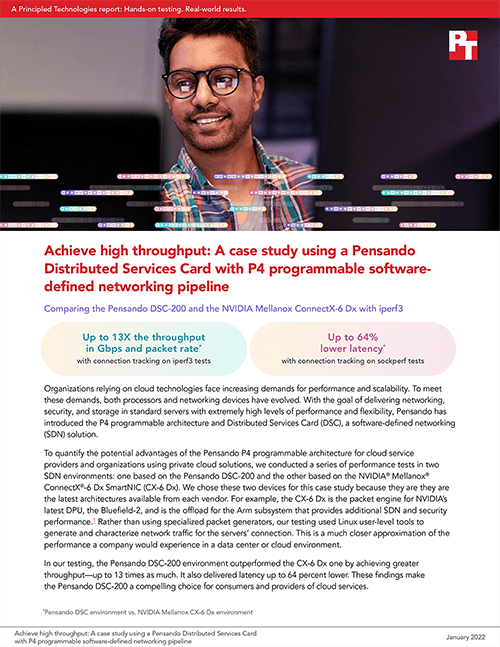

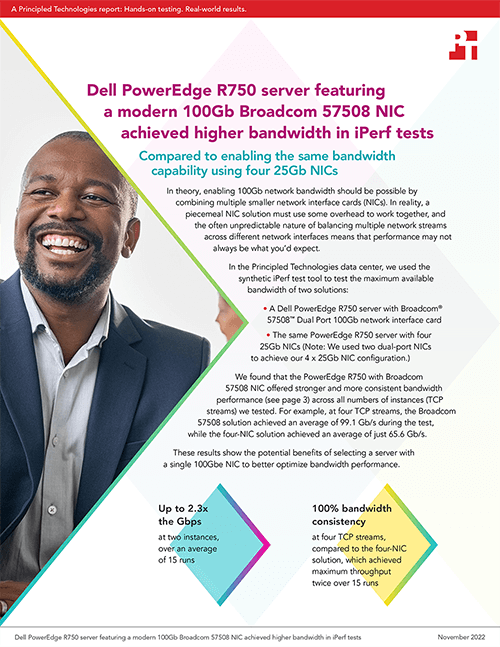

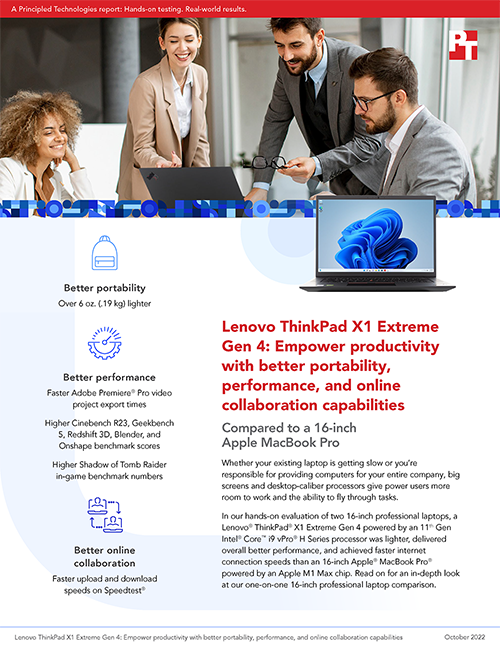

iPerf

Jetstream

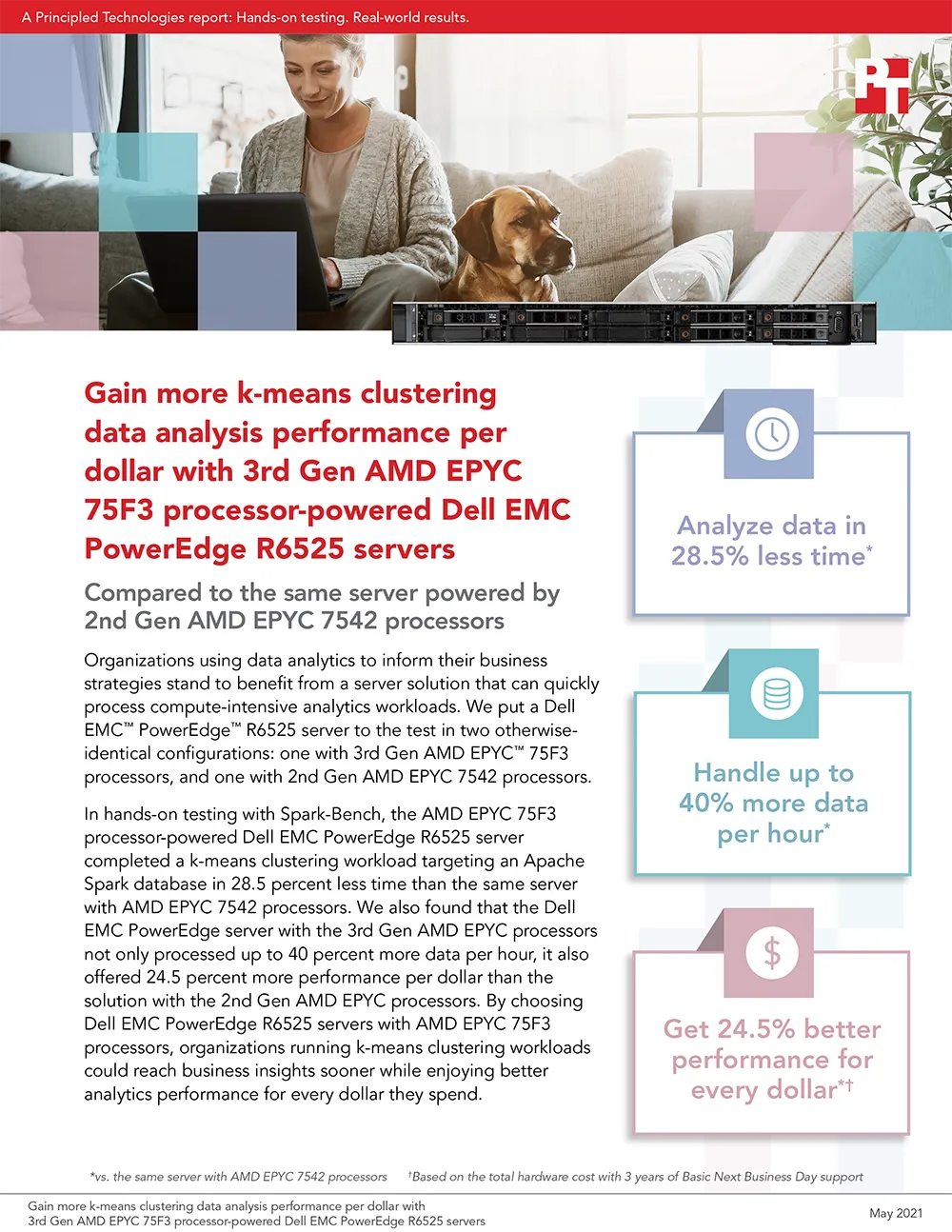

K-means

LAMMPS

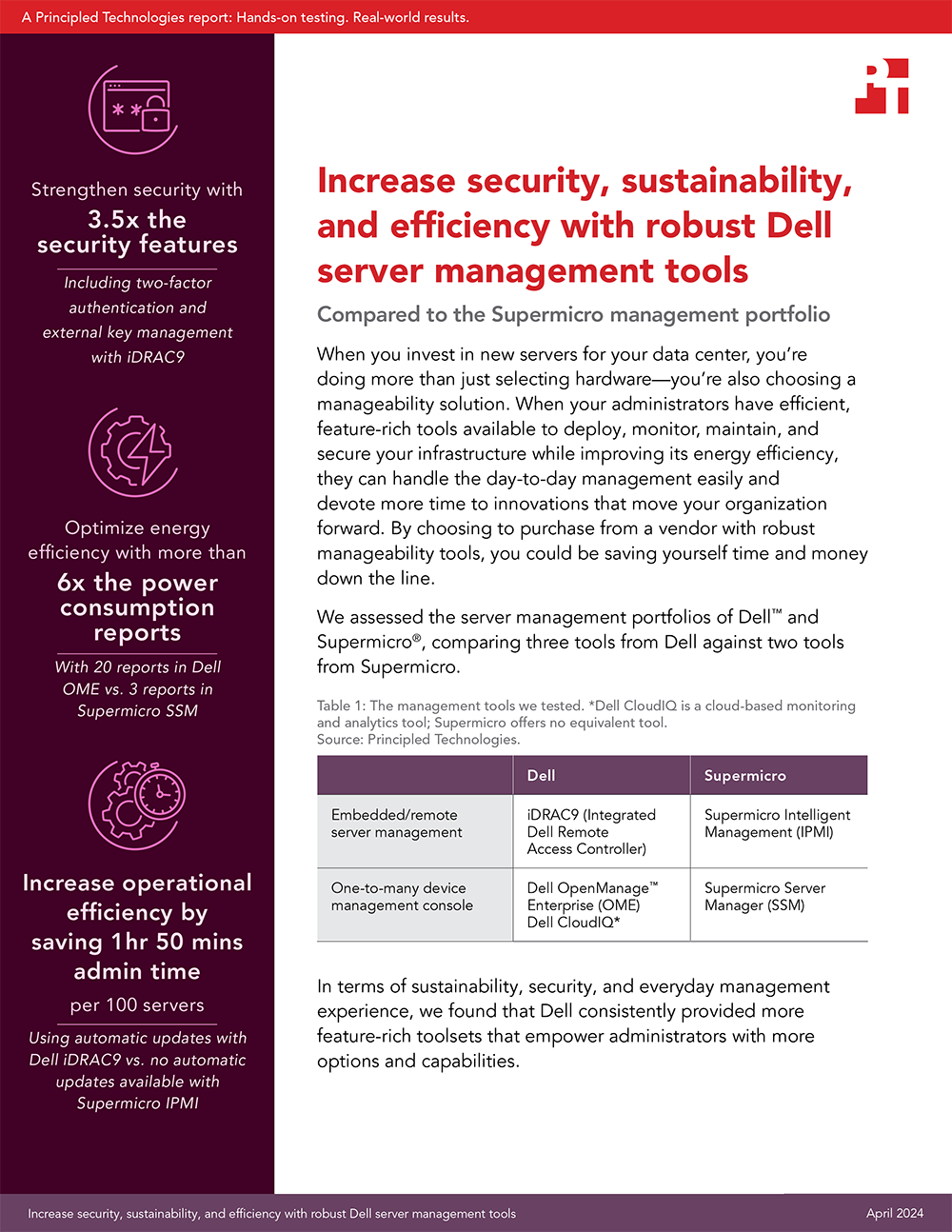

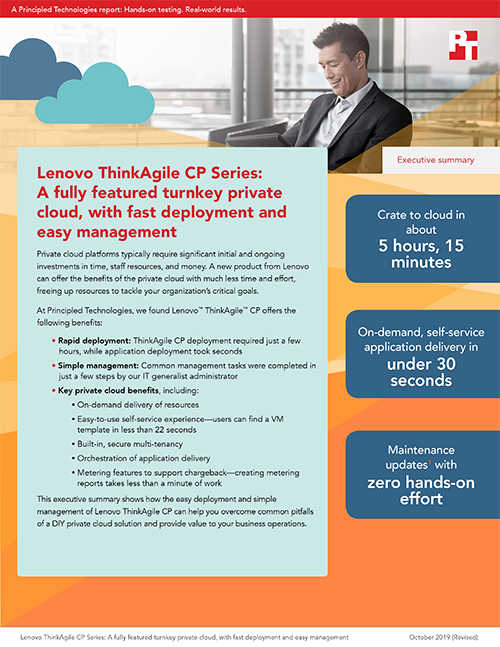

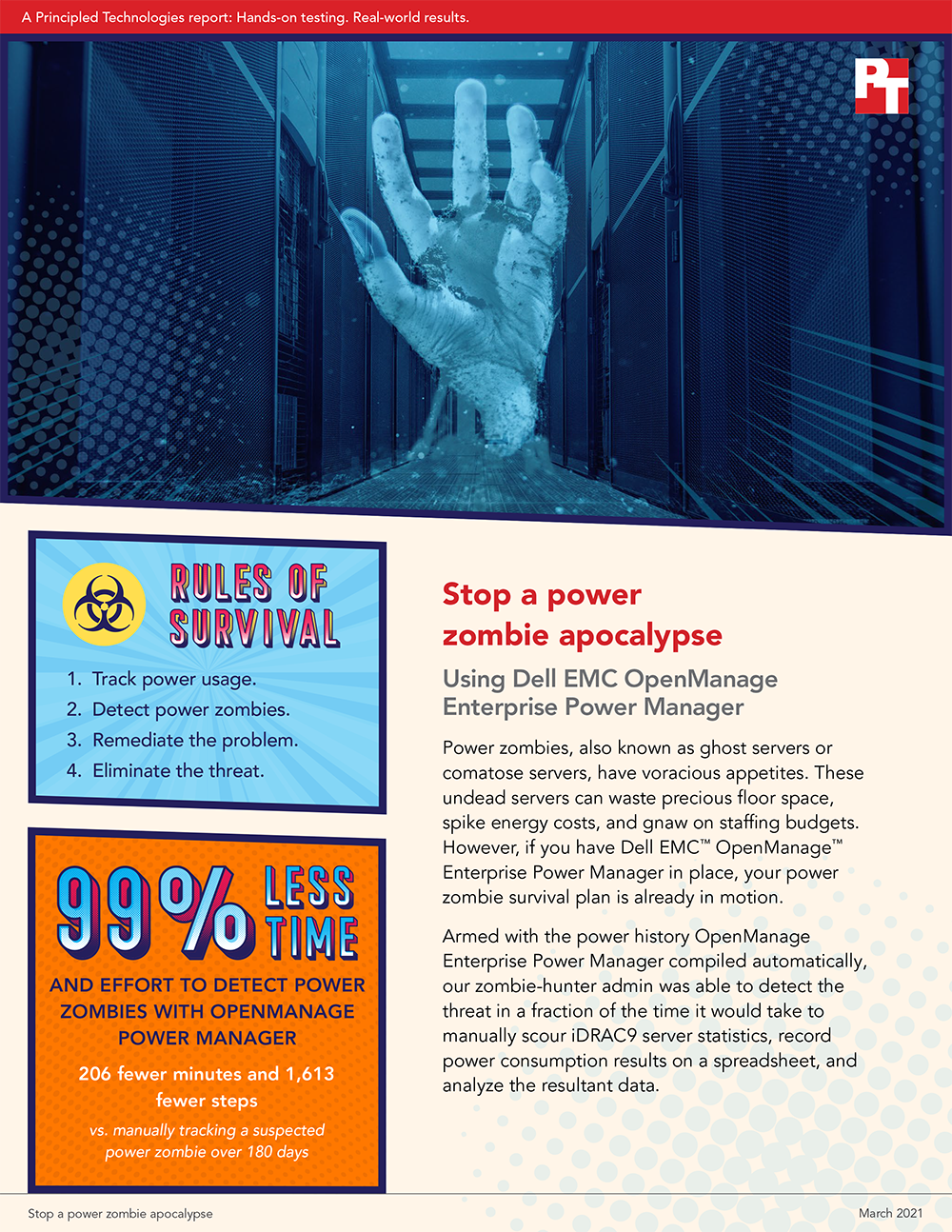

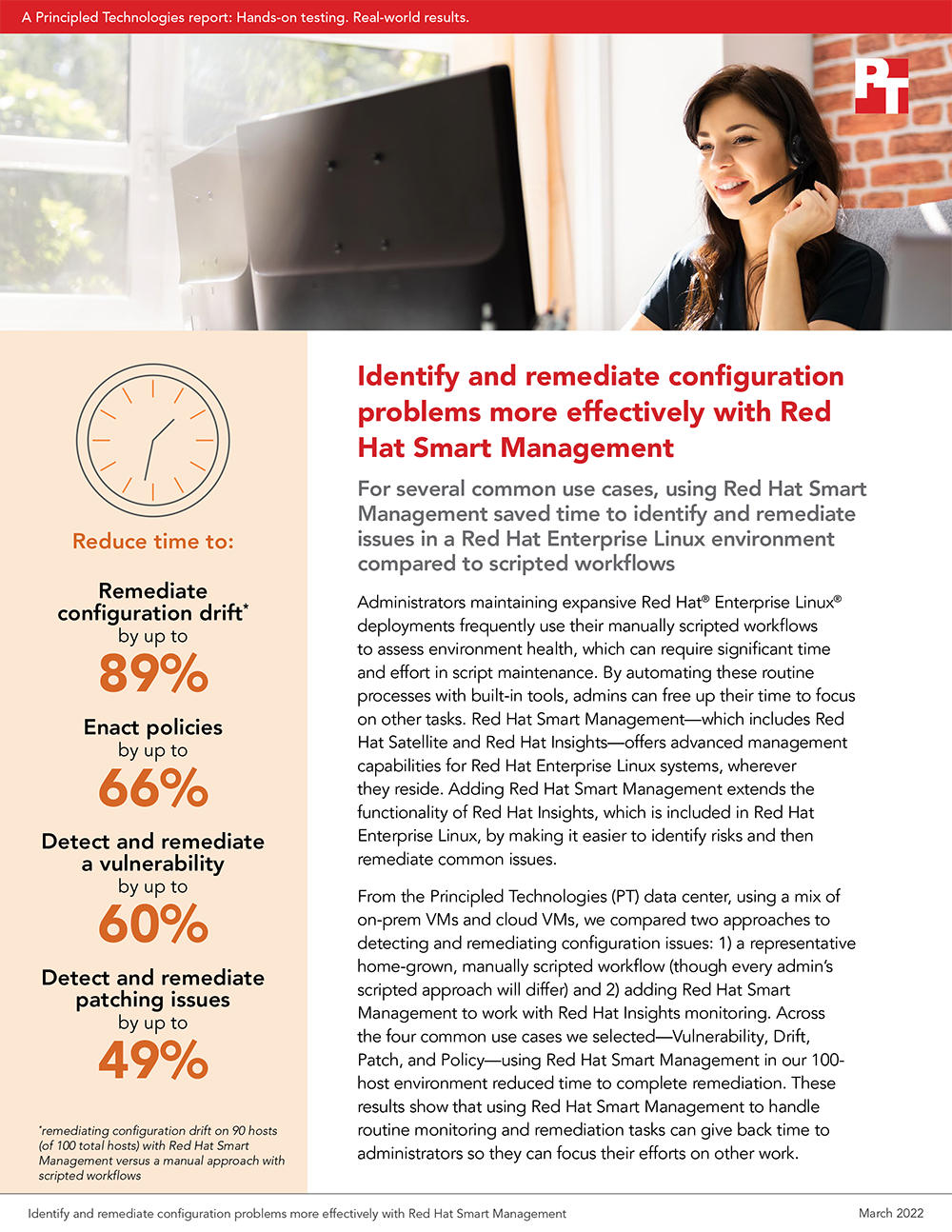

Manageability studies

Maxon Redshift

Microphone quality testing

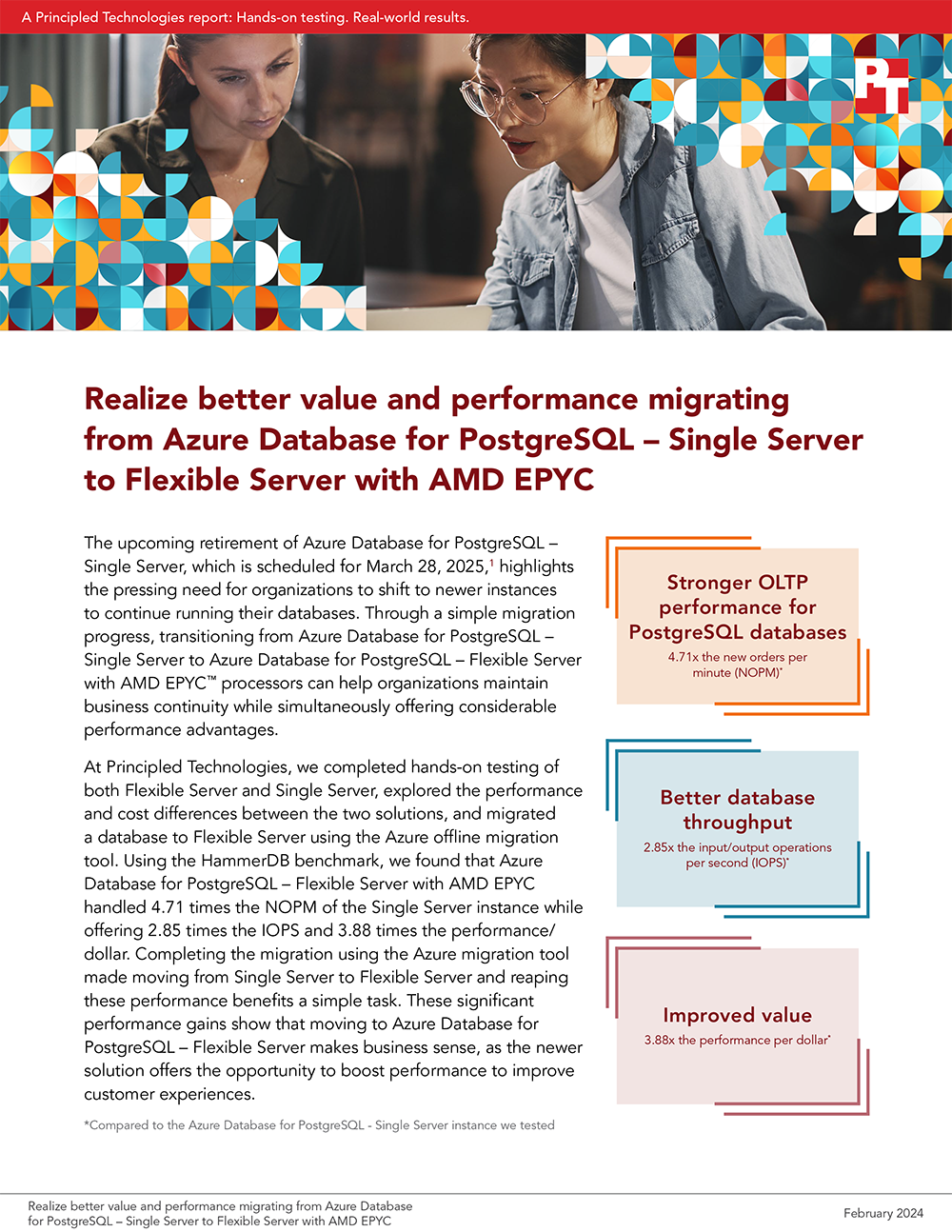

Migration studies

MobileMark

MobileXPRT

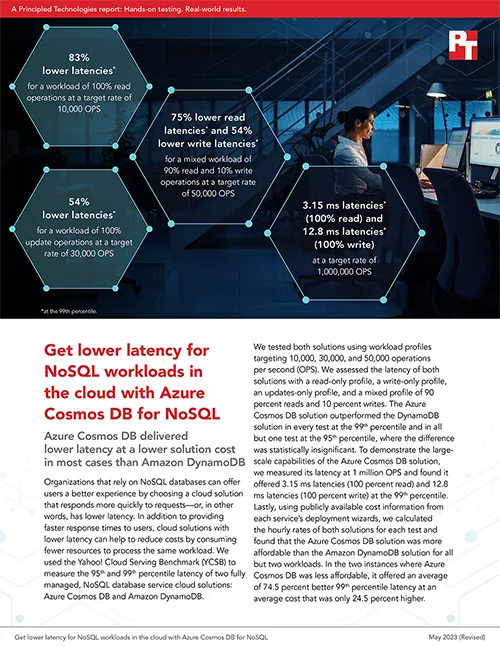

NoSQL with YCSB

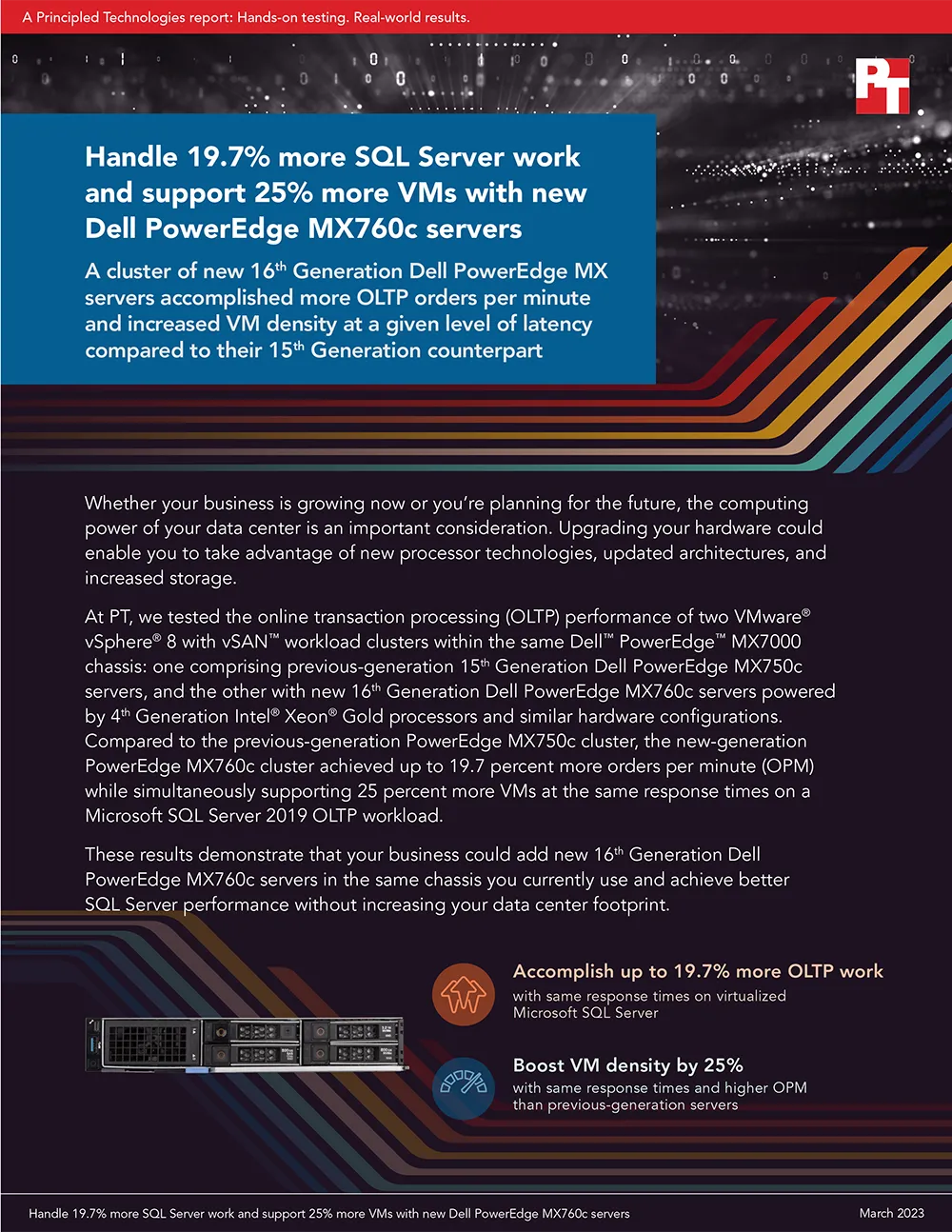

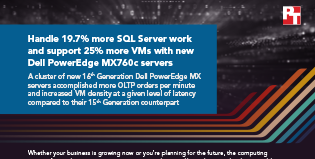

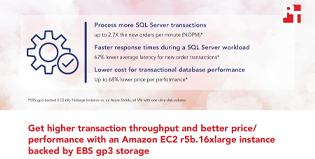

OLTP with DVD Store

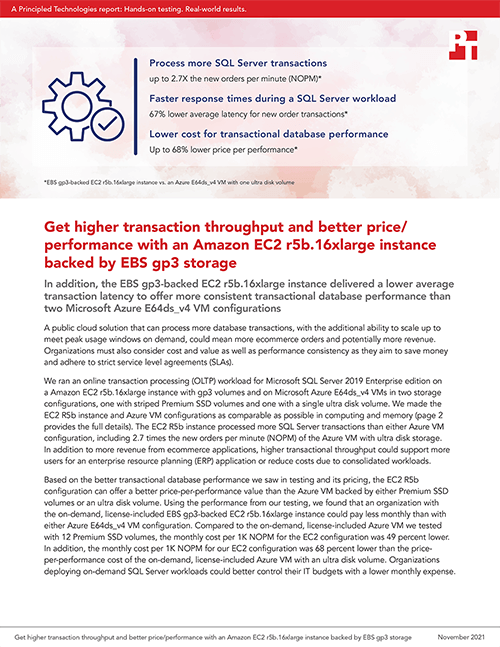

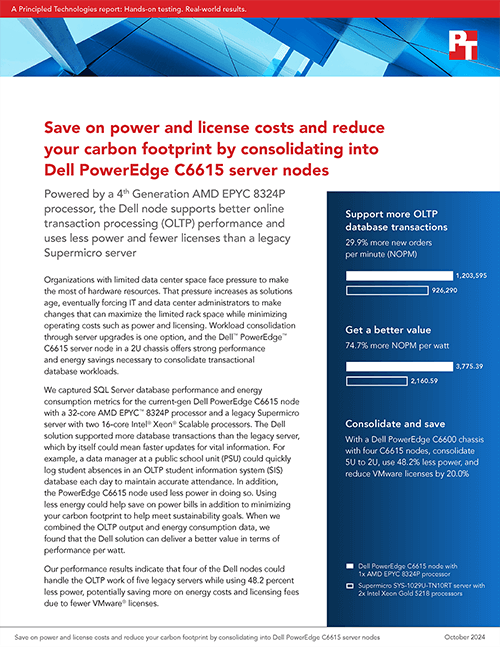

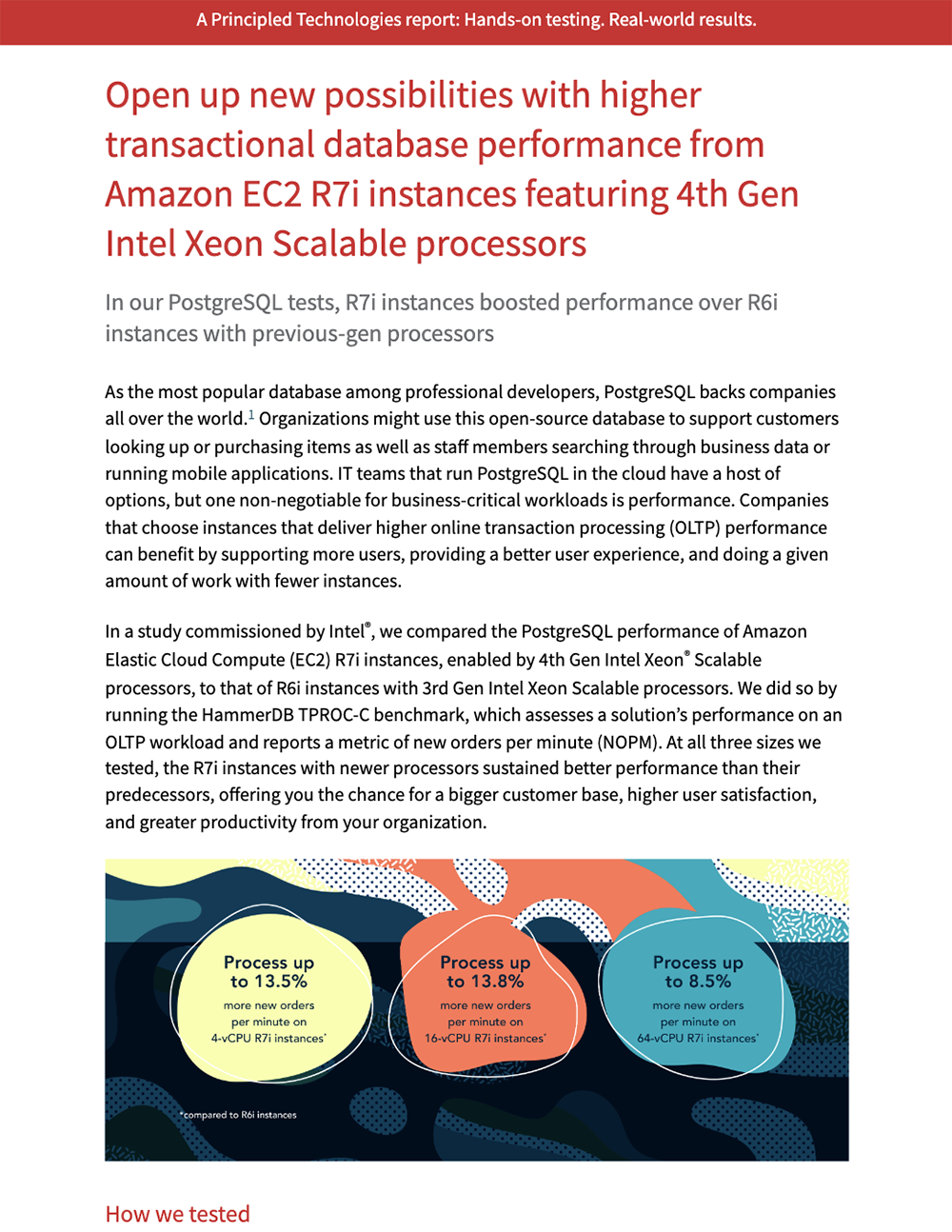

OLTP with TPROC-C

Onshape

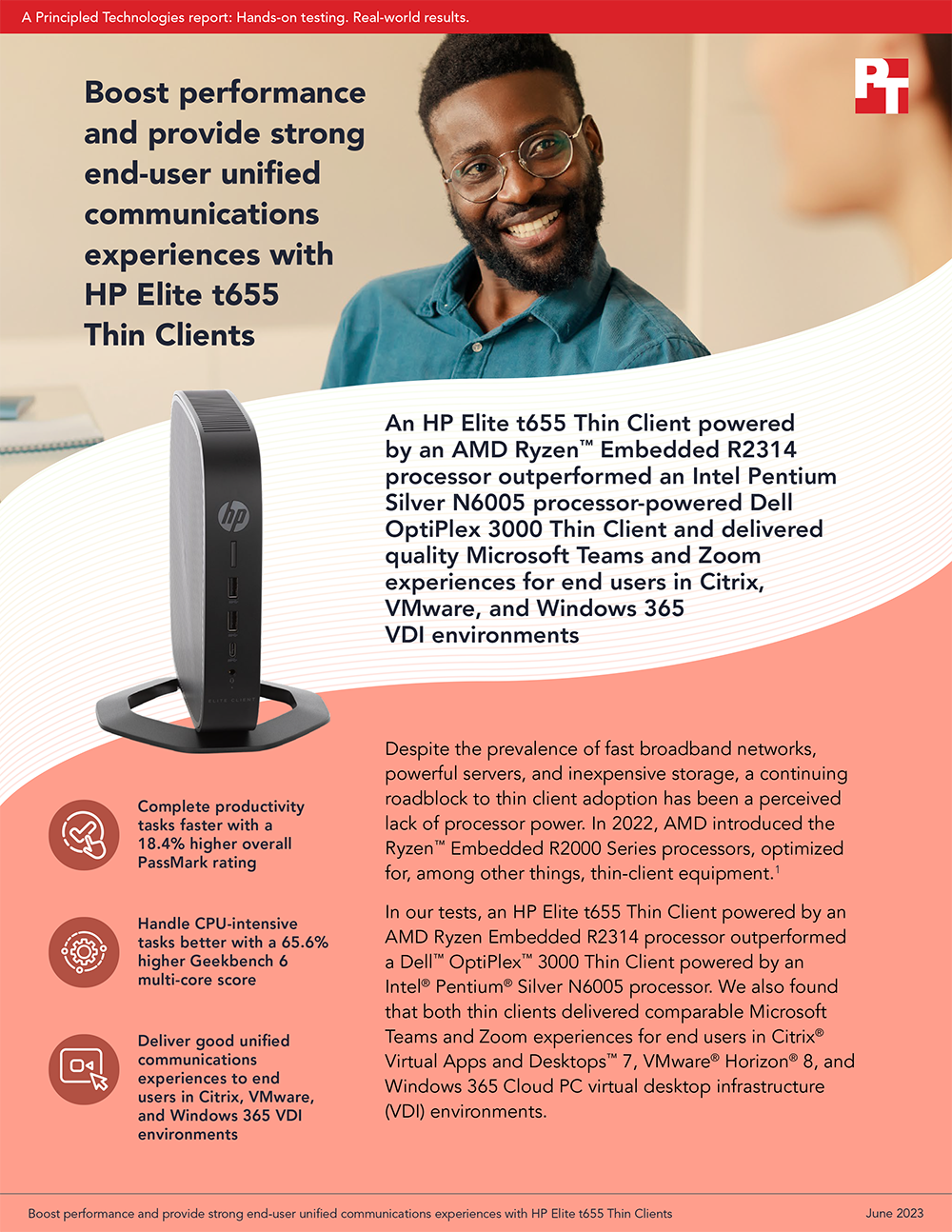

PassMark

PCMark

Power efficiency testing

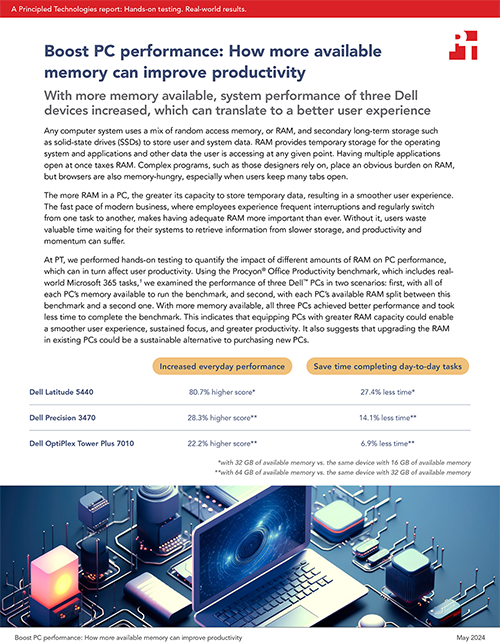

Procyon benchmark suite

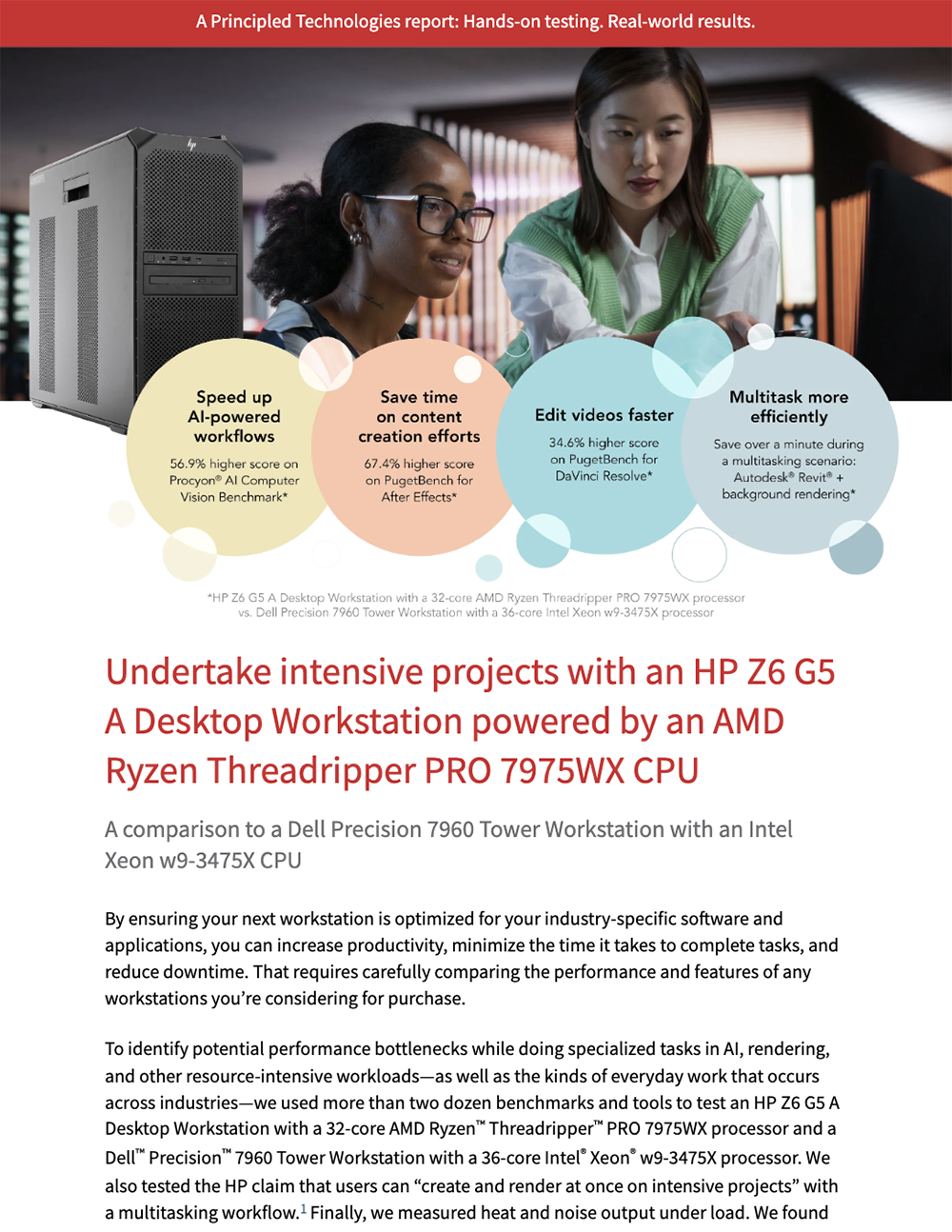

PugetBench

Random Forest

Repairability testing

SLOB

Spark LDA

Spark LR

Speaker loudness & quality testing

SPECapc benchmarks

SPECfp

SPECint

SPECjbb

SPECviewperf

SPECworkstation

Speedometer

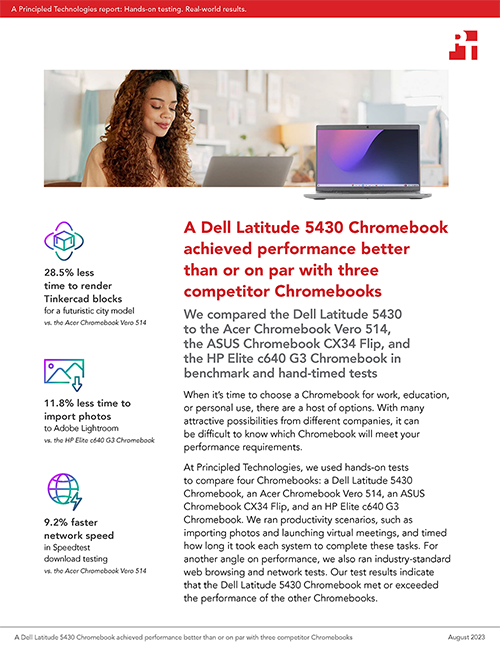

Speedtest

SYSmark

Task-based time testing

TeraSort

Thermal testing (end-user)

Thermal testing (enterprise)

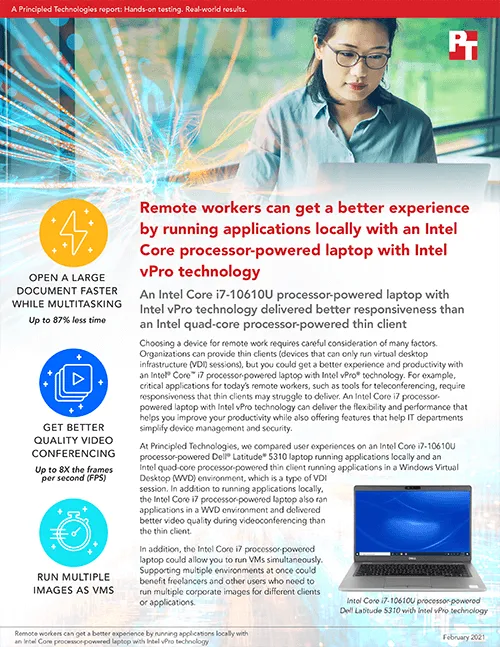

Thin client testing

TouchXPRT

Unigine benchmarks

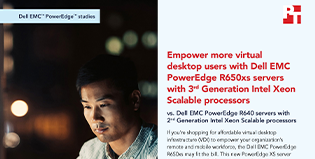

VM and container density testing

VMFleet

Vdbench and HCIBench

VDI testing with Login Enterprise

Video AI

Video encoding

Weathervane

WebXPRT

WordCount

WordPress

Principled Technologies is more than a name: Those two words power all we do. Our principles are our north star, determining the way we work with you, treat our staff, and run our business. And in every area, technologies drive our business, inspire us to innovate, and remind us that new approaches are always possible.