People choose a default web browser based on several factors. Speed is sometimes the deciding factor, but privacy settings, memory load, ecosystem integration, and web app capabilities can also come into play. Regardless of the motivations behind a person’s go-to browser choice, the dominance of software-as-a-service (SaaS) computing means that new updates are always right around the corner. In previous blog posts, we’ve talked about how browser speed can increase or decrease significantly after an update, only to swing back in the other direction shortly thereafter. OS-specific optimizations can also affect performance, such as with Microsoft Edge on Windows and Google Chrome on Chrome OS.

Windows 11 began rolling out earlier this month, and tech press outlets such as AnandTech and PCWorld have used WebXPRT 3 to evaluate the impact of the new OS—or specific settings in the OS—on browser performance. Our own in-house tests, which we discuss below, show a negligible impact on browser performance when updating our test system from Windows 10 to Windows 11. It’s important to note that depending on a system’s hardware setup, the impact might be more significant in certain scenarios. For more information about such scenarios, we encourage you to read the PCWorld article discussing the impact of the Windows 11 default virtualization-based security (VBS) settings on browser performance in some instances.

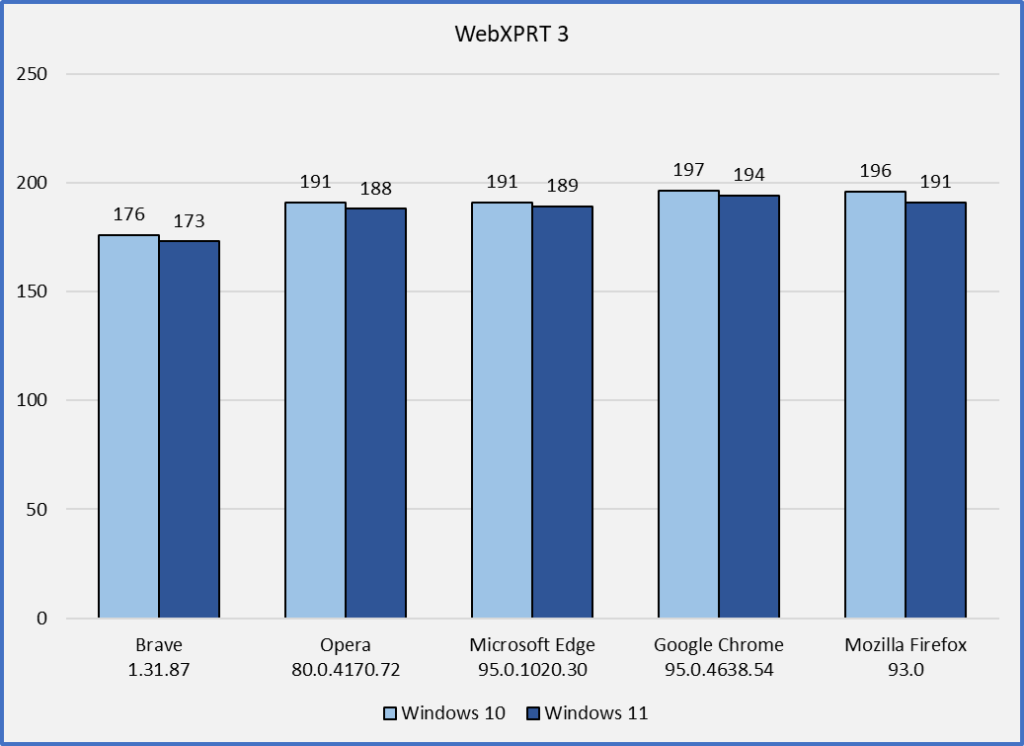

In our comparison tests, we used a Dell XPS 13 7930 with an Intel Core i3-10110U processor and 4 GB of RAM. For the Windows 10 tests, we used a clean Windows 10 Home image updated to version 20H2 (19042.1165). For the Windows 11 tests, we updated the system to Windows 11 Home version 21H2 (22000.282). On each OS version, we ran WebXPRT 3 three times on the latest versions of five browsers: Brave, Google Chrome, Microsoft Edge, Mozilla Firefox, and Opera. For each browser, the score we post below is the median of the three test runs.

In our last round of tests on Windows 10, Firefox was the clear winner. Three of the Chromium-based browsers (Chrome, Edge, and Opera) produced very close scores, and the performance of Brave lagged by about 7 percent. In this round of Windows 10 testing, performance on every browser improved slightly, with Google Chrome taking a slight lead over Firefox.

In our Windows 11 testing, we were interested to find that without exception, browser scores were slightly lower than in Windows 10 testing. However, none of the decreases were statistically significant. Most users performing daily tasks are unlikely to notice that degree of difference.

Have you observed any significant differences in WebXPRT 3 scores after upgrading to Windows 11? If so, let us know!

Justin