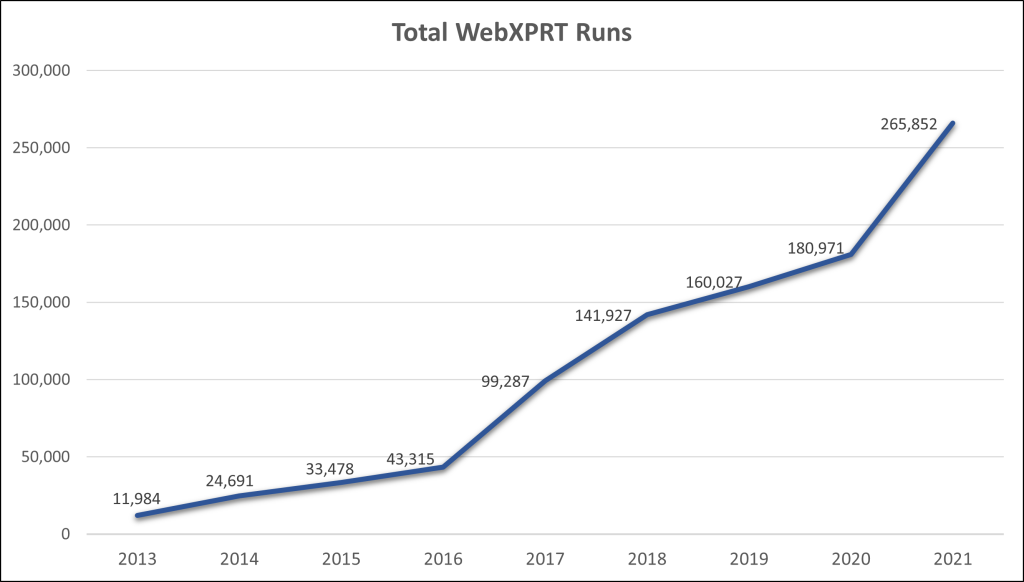

At over 412,000 runs and counting, WebXPRT is our most popular benchmark. From the first release in 2013, it’s been popular with device manufacturers, developers, tech journalists, and consumers because it’s easy to run, it runs on almost anything with a web browser, and it evaluates device performance using the types of web-based tasks that people are likely to encounter on a daily basis.

With each new version of WebXPRT, we analyze browser development trends to make sure the test’s underlying web technologies and workload scenarios adequately reflect the ways people are using their browsers to work and play. BenchmarkXPRT Development Community members can play an important part in that process by sending us feedback on existing tests and suggestions for new workloads to include.

For example, when we released WebXPRT 3, we updated the photo workloads with new images and a deep learning task used for image classification. We also added an optical character recognition task in the Encrypt Notes and OCR scan workload, and combined part of the DNA Sequence Analysis scenario with a writing sample/spell check scenario to simulate online homework in an all-new Online Homework workload.

Consider for a moment what an ideal future version of WebXPRT would look like for you. Are there new web technologies or workload scenarios that you would like to see? Would you be interested in an associated battery life test? Should we include experimental tests? We’re interested in what you have to say, so please feel free to contact us with your thoughts or questions.

If you’re just now learning about WebXPRT, we offer several resources to help you better understand the benchmark and its range of uses. For a general overview of why WebXPRT matters, watch our video titled What is WebXPRT and why should I care? To read more about the details of the benchmark’s development and structure, check out the Exploring WebXPRT 3 white paper. To see WebXPRT 2015 and WebXPRT 3 scores from a wide range of processors, visit the WebXPRT 3 Processor Comparison Chart.

We look forward to hearing from you!

Justin