PCWorld recently published the results of a head-to-head browser performance comparison between Google Chrome, Microsoft Edge, Mozilla Firefox, and Opera. As we’ve noted about similar comparisons, no single browser was the fastest in every test. Browser speed sounds like a straightforward metric, but the reality is complex.

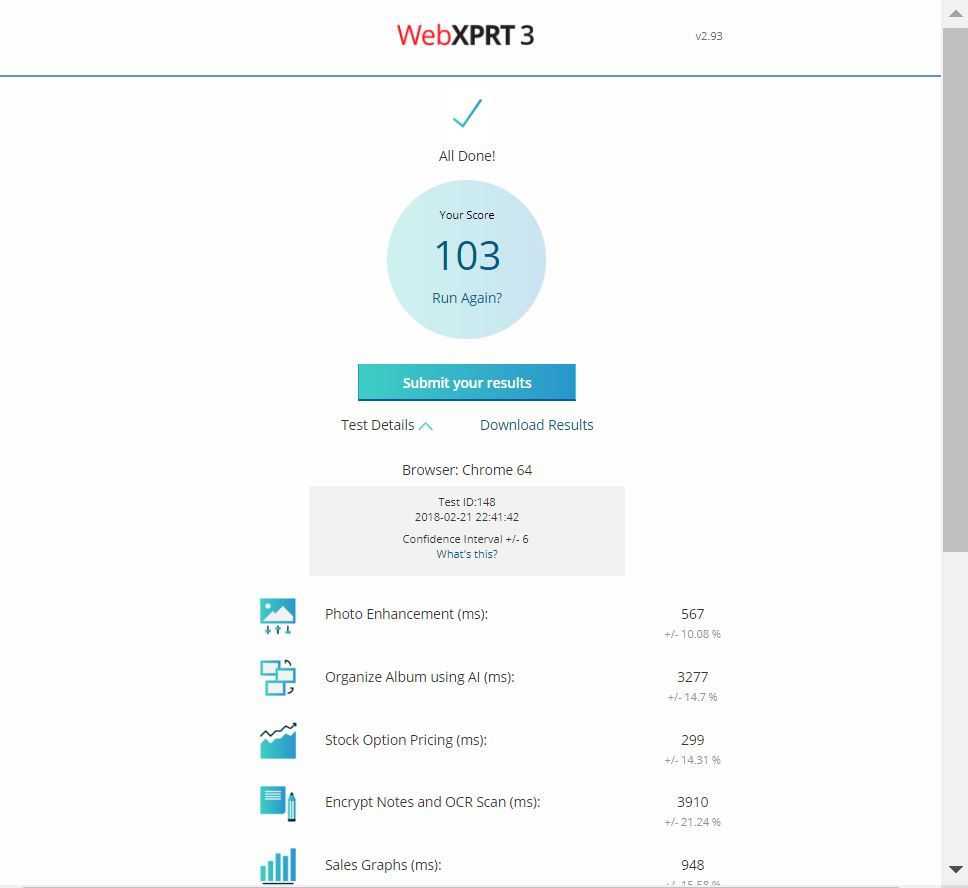

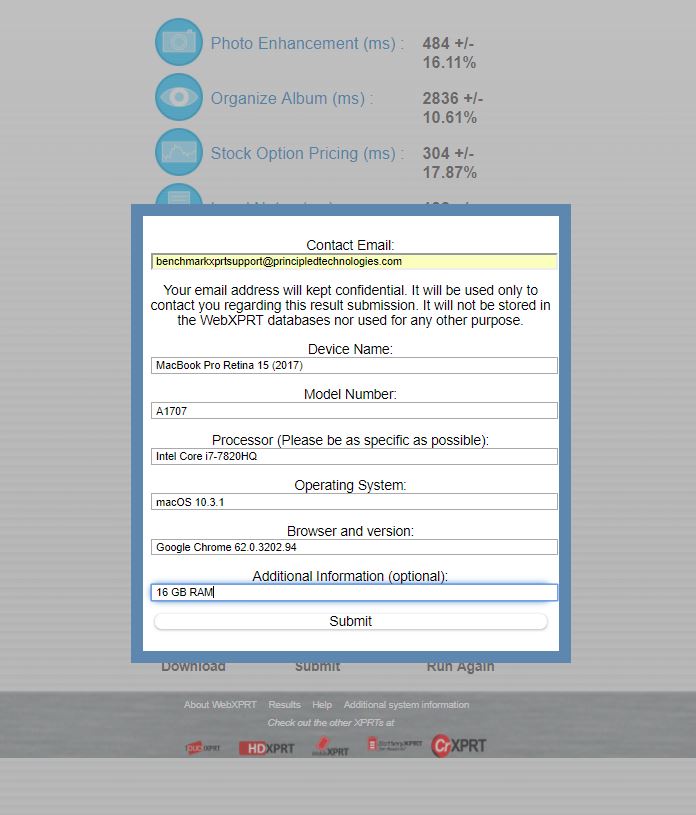

For the comparison, PCWorld used three JavaScript-centric test suites (JetStream, SunSpider, and Octane), one benchmark that simulates user actions (Speedometer), a few in-house tests of their own design, and one benchmark that simulates real-world web applications (WebXPRT). Edge came out on top in JetStream and SunSpider, Opera won in Octane and WebXPRT, and Chrome had the best results in Speedometer and PCWorld’s custom workloads.

The reason that the benchmarks rank the browsers so differently is that each one has a unique emphasis and tests a specific set of workloads and technologies. Some focus on very low-level JavaScript tasks, some test additional technologies such as HTML5, and some are designed to identify strengths or weakness by stressing devices in unusual ways. These approaches are all valid, and it’s important to understand exactly what a given score represents. Some scores reflect a very broad set of metrics, while others assess a very narrow set of tasks. Some scores help you to understand the performance you can expect from a device in your everyday life, and others measure performance in scenarios that you’re unlikely to encounter. For example, when Eric discussed a similar topic in the past, he said the tests in JetStream 1.1 provided information that “can be very useful for engineers and developers, but may not be as meaningful to the typical user.”

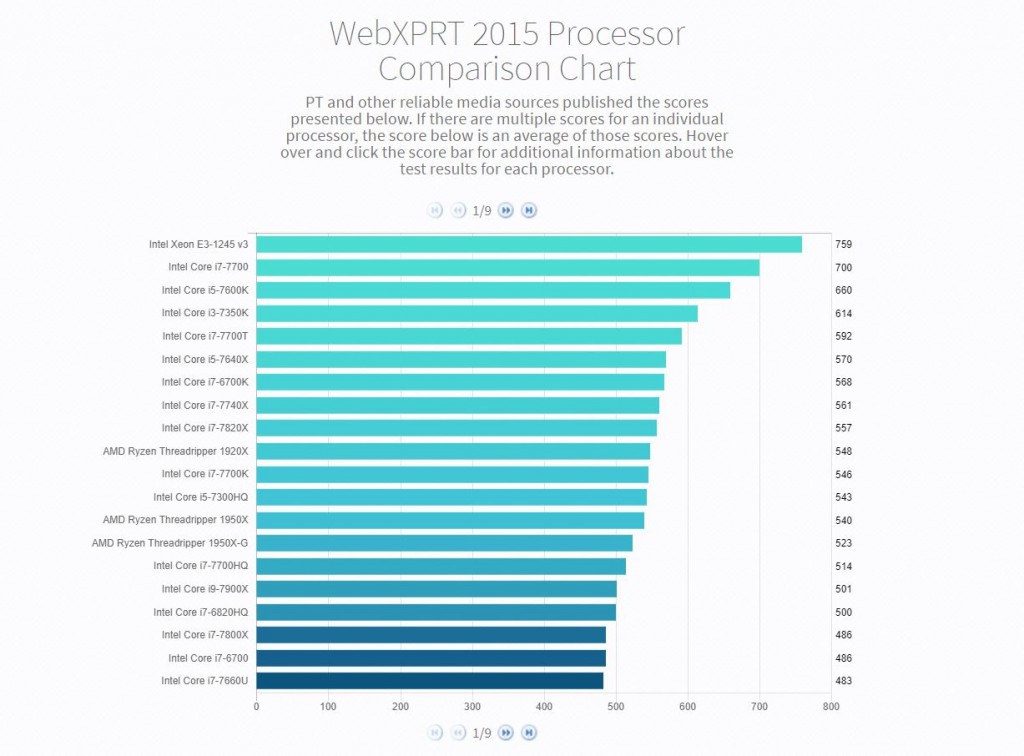

As we do with all the XPRTs, we designed WebXPRT to test how devices handle the types of real-world tasks consumers perform every day. While lab techs, manufacturers, and tech journalists can all glean detailed data from WebXPRT, the test’s real-world focus means that the overall score is relevant to the average consumer. Simply put, a device with a higher WebXPRT score is probably going to feel faster to you during daily use than one with a lower score. In today’s crowded tech marketplace, that piece of information provides a great deal of value to many people.

What are your thoughts on browser testing? We’d love to hear from you.

Justin