Around the beginning of each new year, we like to take the opportunity to look back and summarize the XPRT highlights from the previous year. Readers of our newsletter are familiar with the stats and updates we include each month, but for our blog readers who don’t receive the newsletter, we’ve compiled highlights from 2023 below.

Benchmarks

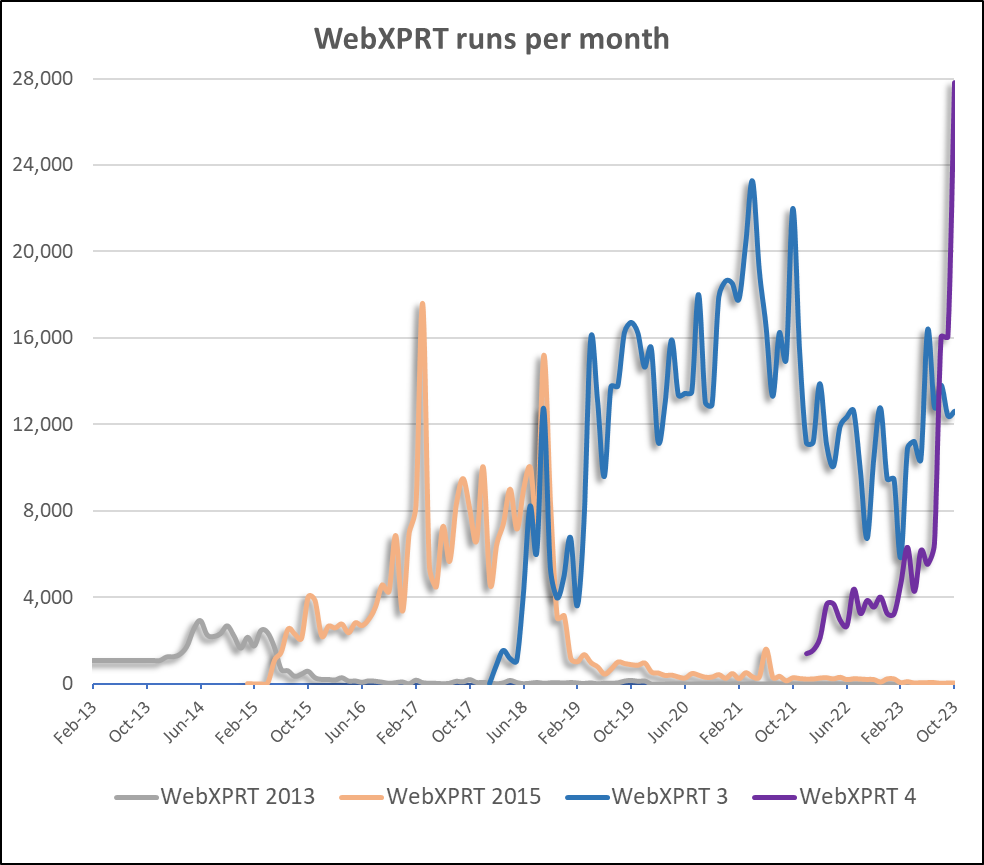

In March, we celebrated the 10-year anniversary

of WebXPRT! WebXPRT 4 has now taken the lead

as the most commonly-used version of WebXPRT, even as the overall number of

runs has continued to grow.

XPRTs in the media

Journalists, advertisers, and

analysts referenced the XPRTs thousands of times in 2023. It’s always rewarding

to know that the XPRTs have proven to be useful and reliable assessment tools

for technology publications around the world. Media sites that used the XPRTs

in 2023 include 3DNews (Russia), AnandTech, Benchlife.info (China), CHIP.pl

(Poland), ComputerBase (Germany), eTeknix, Expert Reviews, Gadgetrip (Japan), Gadgets

360, Gizmodo, Hardware.info, IT168.com (China), ITC.ua (Ukraine), ITWorld

(Korea), iXBT.com (Russia), Lyd & Bilde (Norway), Notebookcheck, Onchrome

(Germany), PCMag, PCWorld, QQ.com (China), Tech Advisor, TechPowerUp, TechRadar,

Tom’s Guide, TweakTown, Yesky.com (China), and ZDNet.

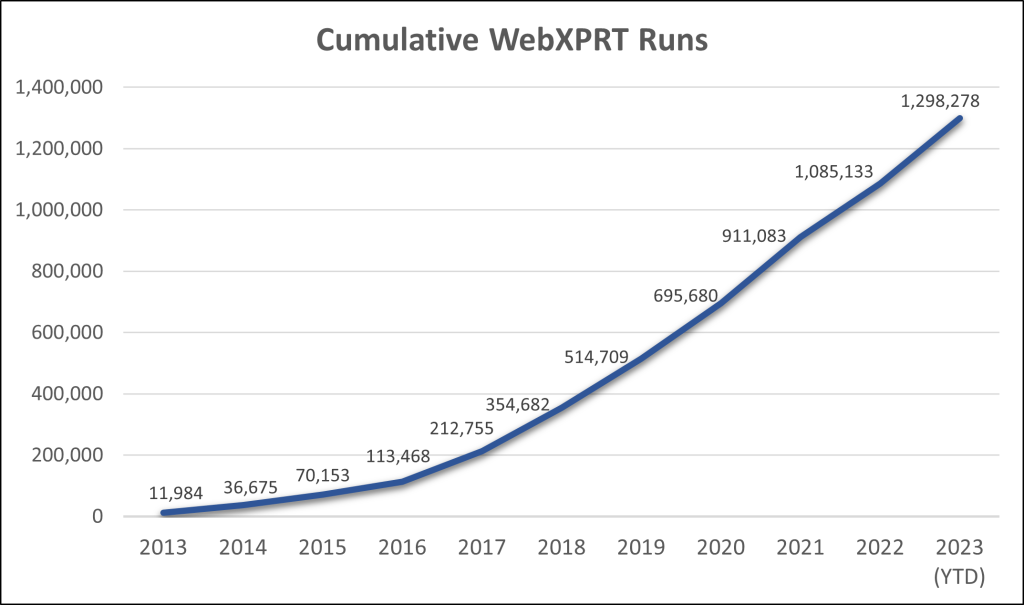

Downloads and confirmed runs

In 2023, we had more than 16,800 benchmark downloads and 296,800 confirmed runs. Users have run our most popular benchmark, WebXPRT, more than 1,376,500 times since its debut in 2013! WebXPRT continues to be a go-to, industry-standard performance benchmark for OEM labs, vendors, and leading tech press outlets around the globe.

Trade shows

In January, Justin attended the 2023 Consumer Electronics Show (CES) Las Vegas.

In March, Mark attended Mobile World Congress (MWC) 2023 in Barcelona. You can

view Justin’s recap of CES here and Mark’s thoughts from MWC here.

We’re thankful for everyone who used the XPRTs and sent questions and suggestions throughout 2023. We’re excited to see what’s in store for the XPRTs in 2024!

Justin