It’s been a while since we last discussed the process for submitting WebXPRT results to be considered for publication in the WebXPRT results browser and the WebXPRT Processor Comparison Chart, so we thought we’d offer a refresher.

Unlike sites that publish all results they receive, we hand-select results from internal lab testing, user submissions, and reliable tech media sources. In each case, we evaluate whether the score is consistent with general expectations. For sources outside of our lab, that evaluation includes confirming that there is enough detailed system information to help us determine whether the score makes sense. We do this for every score on the WebXPRT results page and the general XPRT results page. All WebXPRT results we publish automatically appear in the processor comparison chart as well.

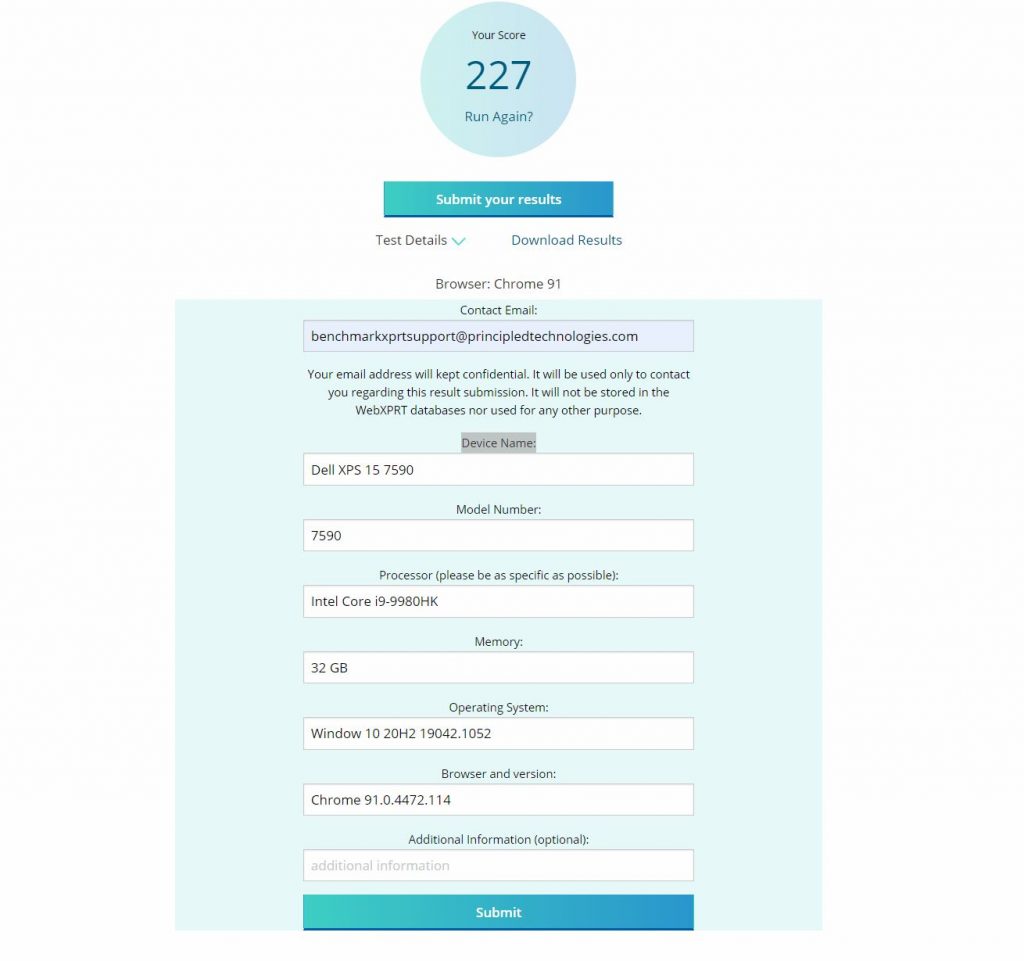

Submitting your score is quick and easy. At the end of the WebXPRT test run, click the Submit your results button below the overall score, complete the short submission form, and click Submit again. The screenshot below shows how the form would look if I submitted a score at the end of a WebXPRT 3 run on my personal system.

After you submit your score, we’ll contact you to confirm how we should display the source. You can choose one of the following:

- Your first and last name

- “Independent tester” (for those who wish to remain anonymous)

- Your company’s name, provided that you have permission to submit the result in their name. To use a company name, we ask that you provide a valid company email address.

We will not publish any additional information about you or your company without your permission.

We look forward to seeing your score submissions, and if you have suggestions for the processor chart or any other aspect of the XPRTs, let us know!

Justin