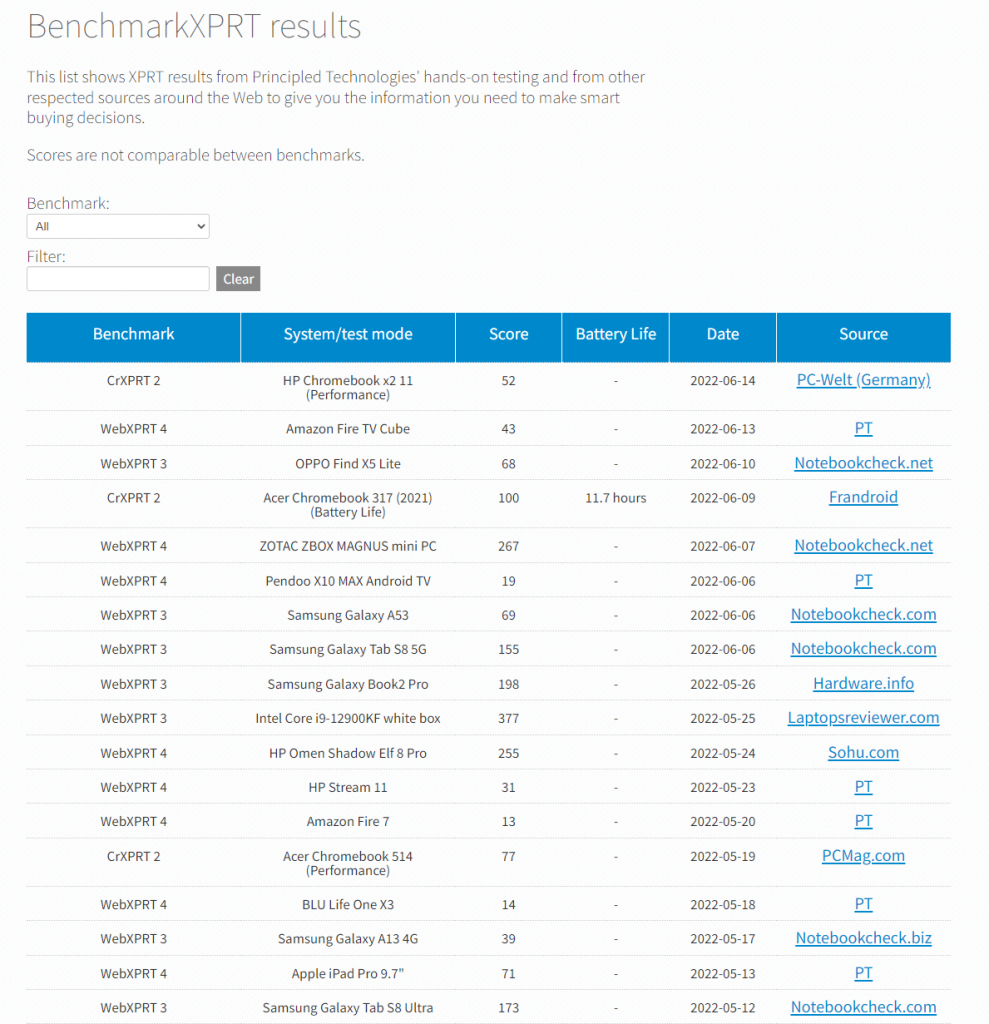

The WebXPRT 5 Preview has been available for only a few weeks, but users have already started submitting test results for us to review for publication in the WebXPRT 5 Preview results viewer. We’re excited to receive those submissions, but we know that some of our readers are either new to WebXPRT or may never have submitted a test result. In today’s post, we’ll cover the straightforward process of submitting your WebXPRT 5 Preview test results for publication in the viewer.

Unlike sites that automatically publish all results submissions, we publish only results that meet a set of evaluation criteria. Those results can come from OEM labs, third-party labs, tech media sources, or independent user submissions. What’s important to us is that the scores must be consistent with general expectations, and for sources outside of our labs and data centers, each must include enough detailed system information that we can determine whether the score makes sense. That said, if your scores are different from what you see in our database, please don’t hesitate to send them to us; we may be able to work it out together.

The actual result submission process is simple. On the end-of-test results page that displays after a test run, click the Submit your results button below the overall score. Then, complete the short submission form that pops up, and click Submit.

When filling in the system information fields in the submission form, please be as specific as possible. Detailed device information helps us assess whether individual scores represent valid test runs.

That’s all there is to it!

Figure 1 below shows the end-of-test results screen and the Submit your results button below the overall score.

Figure 2 below shows how the results submission form would look if I filled in the necessary information and submitted a score at the end of a recent WebXPRT 5 Preview run on one of the systems here in our lab.

After you submit your test result, we’ll review the information. If the test result meets the evaluation criteria, we’ll contact you to confirm how we should display its source in our database. For that purpose, you can choose one of the following:

- Your first and last name

- “Independent tester” (if you wish to remain anonymous)

- Your company’s name, if you have permission to submit the result under that name. If you want to use a company name, please provide a valid corresponding company email address.

As always, we will not publish any additional information about you or your company without your permission.

We look forward to seeing your scores! If you have questions about WebXPRT 5 Preview testing or results submission—or you’d like to share feedback on WebXPRT 5—please let us know!

Justin