About a month ago, we posted an update on the CloudXPRT development process. Today, we want to provide more details about the three workloads we plan to offer in the initial preview build:

- In the web-tier microservices workload, a simulated user logs in to a web application that does three things: provides a selection of stock options, performs Monte-Carlo simulations with those stocks, and presents the user with options that may be of interest. The workload reports performance in transactions per second, which testers can use to directly compare IaaS stacks and to evaluate whether any given stack is capable of meeting service-level agreement (SLA) thresholds.

- The machine learning (ML) training workload calculates XGBoost model training time. XGBoost is a gradient-boosting framework that data scientists often use for ML-based regression and classification problems. The purpose of the workload in the context of CloudXPRT is to evaluate how well an IaaS stack enables XGBoost to speed and optimize model training. The workload reports latency and throughput rates. As with the web-tier microservices workload, testers can use this workload’s metrics to compare IaaS stack performance and to evaluate whether any given stack is capable of meeting SLA thresholds.

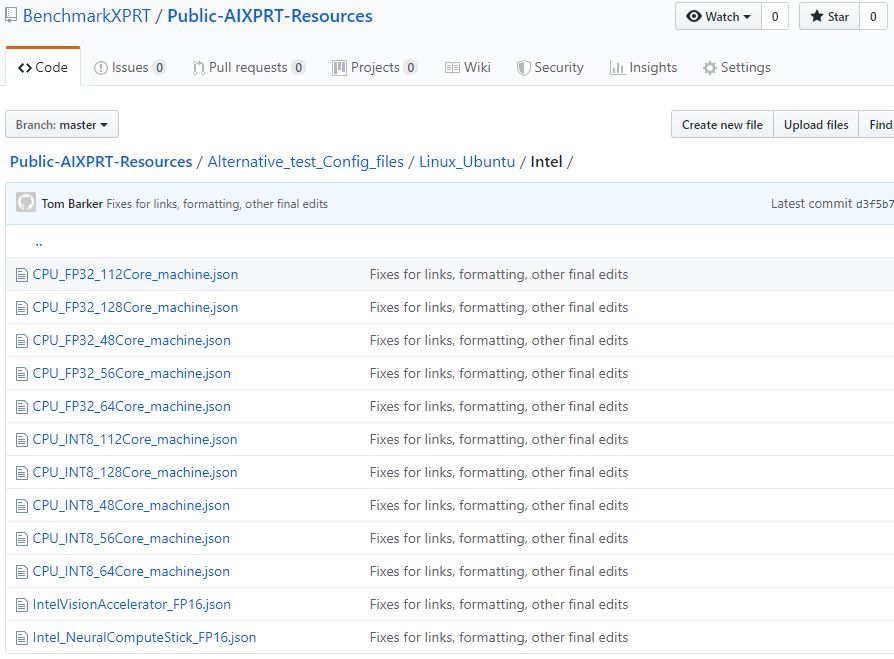

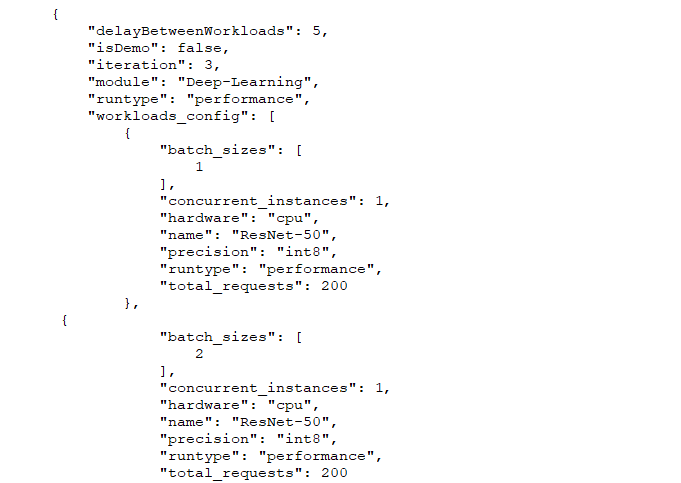

- The AI-themed container scaling workload starts up a container and uses a version of the AIXPRT harness to launch Wide and Deep recommender system inference tasks in the container. Each container represents a fixed amount of work, and as the number of Wide and Deep jobs increases, CloudXPRT launches more containers in parallel to handle the load. The workload reports both the startup time for the containers and the Wide and Deep throughput results. Testers can use this workload to compare container startup time between IaaS stacks; optimize the balance between resource allocation, capacity, and throughput on a given stack; and confirm whether a given stack is suitable for specific SLAs.

We’re continuing to move forward with CloudXPRT development and testing and hope to add more workloads in subsequent builds. Like most organizations, we’ve adjusted our work patterns to adapt to the COVID-19 situation. While this has slowed our progress a bit, we still hope to release the CloudXPRT preview build in April. If anything changes, we’ll let folks know as soon as possible here in the blog.

If you have any thoughts or comments about CloudXPRT workloads, please feel free to contact us.

Justin