The WebXPRT 5 development process heading into the final stretch, so we’d like to share more information about the workloads you’re likely to see in the WebXPRT 5 Preview release—and when that release may be available. We’re still actively testing candidate builds, studying results from multiple system tests, and so on, so some details could change. That said, we’re now close enough to provide a clearer picture of the workload lineup.

Core workloads

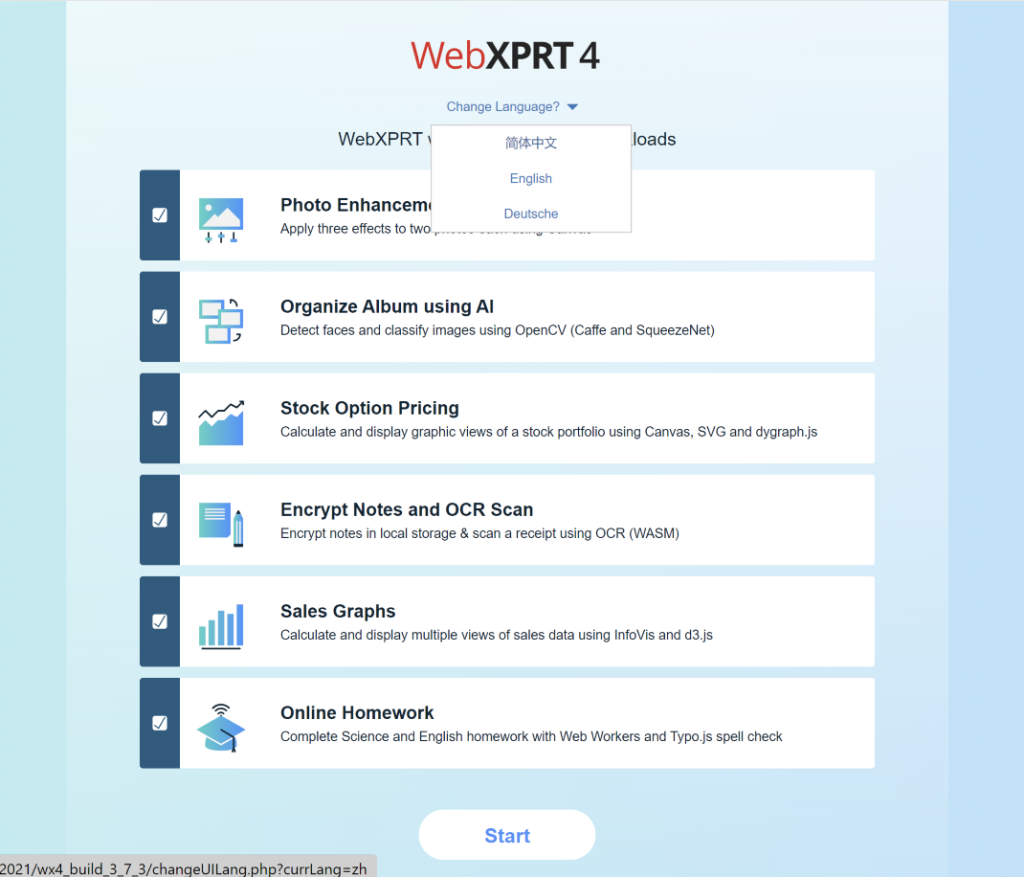

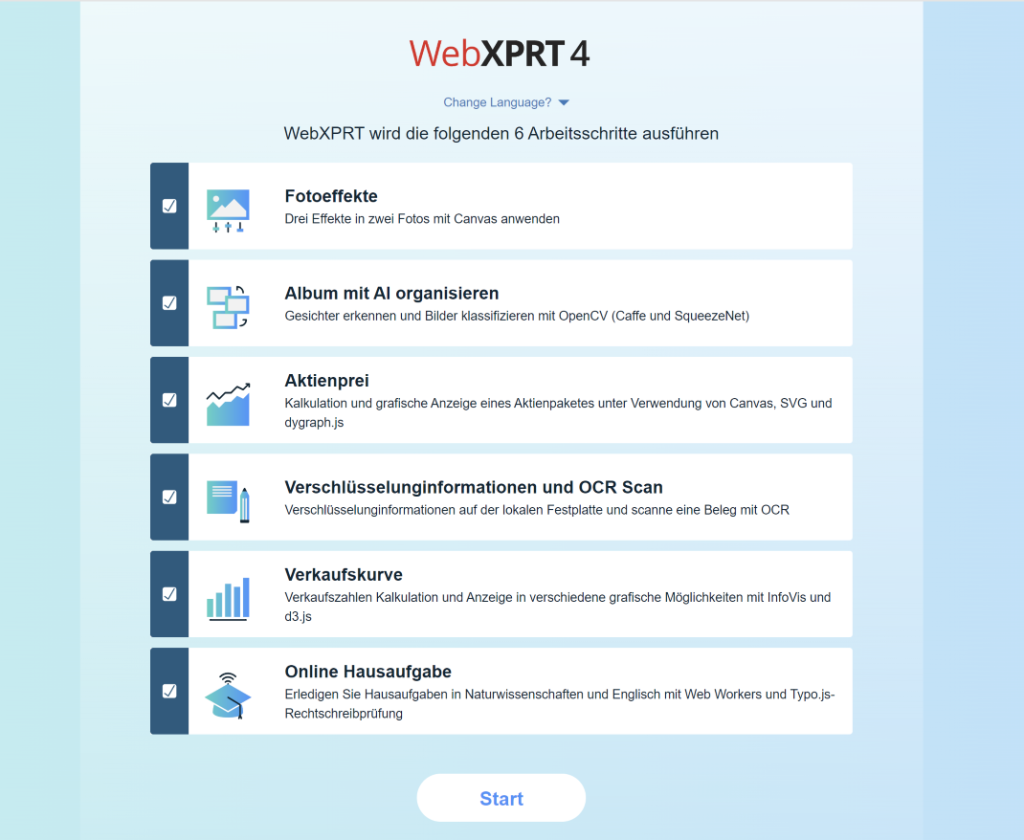

WebXPRT 5 will likely include the following seven workloads:

- Video Background Blur with AI. Blurs the background of a video call using an AI-powered Segmentation model.

- Photo Effects. Applies a filter to six photos using the Canvas API.

- Detect Faces with AI. Detects faces and organizes photos in an album using computer vision (OpenCV.js with Caffe Model).

- Image Classification with AI. Labels images in an album using machine learning (OpenCV.js and ML Classify with the SqueezeNet model).

- Document Scan with AI. Scans a document image and converts it to text using ML-based OCR (Wasm with LSTM).

- School Science Project. Processes a DNA sequencing task using Regex and String manipulation.

- Homework Spellcheck. Spellchecks a document using Typo.js and Web Workers.

The sub-scores for each of these tests will contribute to WebXPRT 5’s main overall score. (We’ll discuss scoring in future blogs.)

Experimental workloads

We’re currently planning to include an experimental workload section, something we’ve long discussed, in WebXPRT 5. Workloads in this section will use cutting-edge browser technologies that may not be compatible with the same broad range of platforms and devices as the technologies in WebXPRT 5’s core workloads. For that reason, we will not include the scores from the experimental section—in the Preview build and future releases—in WebXPRT 5’s main overall score.

In addition, WebXPRT 5’s experimental workloads will be completely optional.

Moving forward, WebXPRT’s experimental workload section will provide users with a straightforward way to learn how well certain browsers or systems handle new browser-based technologies (e.g., new web apps or AI capabilities). We’ll benefit from the ability to offer workloads for large-scale testing and user feedback before committing to including them as core WebXPRT workloads. Because future experimental workloads will run independently of the main test, we can add them without affecting the main WebXPRT score or requiring users to repeat testing to obtain comparable scores. We think it will be a win-win scenario in many respects.

We’re still evaluating whether we can finish the first experimental workload in time to include it in the WebXPRT 5 Preview release, but we will definitely have at least the section and the framework for adding such a workload. When we are confident that an experimental workload is ready to go, we’ll share more information here in the blog and be all set up to incorporate it.

Timeline

If all goes well, we hope to publish the WebXPRT 5 Preview very soon, followed by a general release in early 2026. If that timeline changes significantly, we’ll provide an update here in the blog as soon as possible.

What about an “AI score”?

We’re still discussing the concept of a stand-alone WebXPRT 5 “AI score,” and we go back and forth on it. That score would combine WebXPRT’s AI-related subscores into a single score for use in AI capability comparisons. Because we’re just now beefing up WebXPRT’s AI capabilities, we’ve definitely decided not to include an AI score right now. We would love your feedback on the concept as we plan WebXPRT’s future. If that’s something that you would be interested in, please let us know!

If you have any questions about the WebXPRT 5 details we’ve shared above, please feel free to ask!

Justin