If you only recently started using the XPRT benchmarks, you may not know about one of the free resources we offer—the XPRT results database. Our results database currently holds more than 3,650 test results from over 150 sources, including global tech press outlets, OEM labs, and independent testers. It serves as a treasure trove of current and historical performance data across all the XPRT benchmarks and hundreds of devices. You can use these results and the results of the same XPRTs on your device to get a sense of how well your device performs.

We update the results database several times a week, adding selected results from our own internal lab testing, reliable media sources, and end-of-test user submissions. (After you run one of the XPRTs, you can choose to submit the results, but don’t worry—this is opt-in. Your results do not automatically appear in the database.) Before adding a result, we also look at any available system information and evaluate whether the score makes sense and is consistent with general expectations.

There are three primary ways that you can explore the XPRT results database.

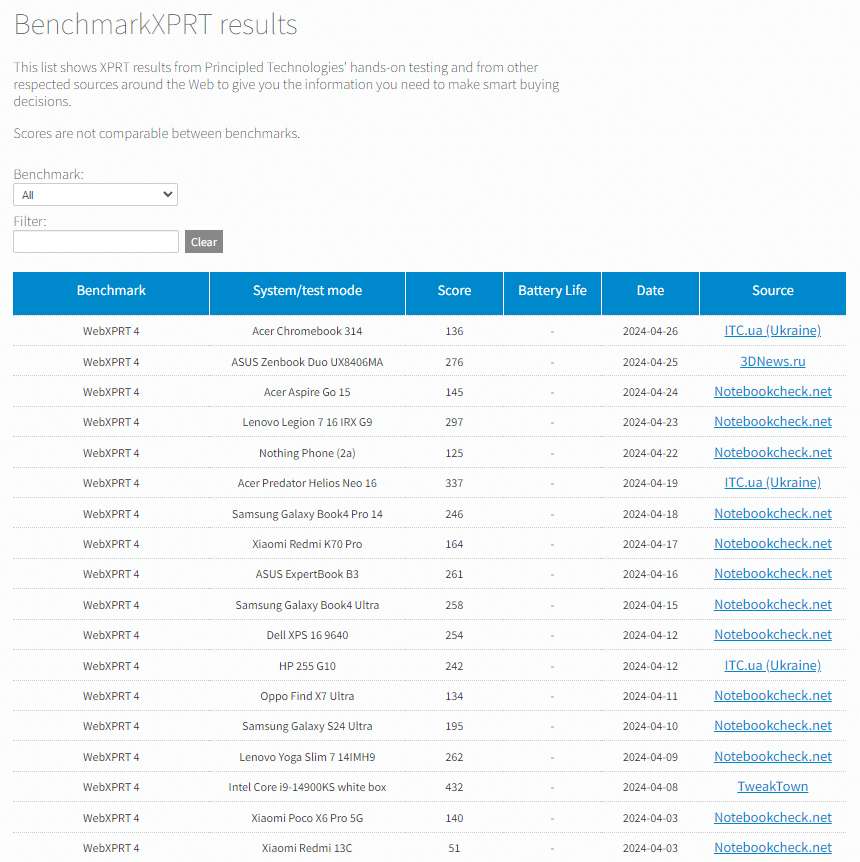

The first is by visiting the main BenchmarkXPRT results browser, which displays results entries for all of the XPRT benchmarks in chronological order (see the screenshot below). You can filter the results by selecting a benchmark from the drop-down menu. You can also type values, such as a vendor name (e.g., Dell) or the name of a tech publication (e.g., PCWorld) into the free-form filter field. For results we’ve produced in our lab, clicking “PT” in the Source column takes you to a page with additional configuration information for the test system. For sources outside our lab, clicking the source name takes you to the original article or review that contains the result.

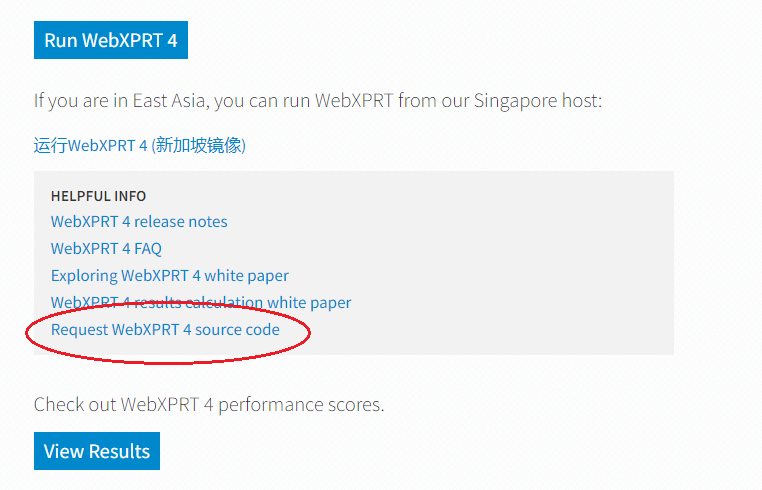

The second way to access our published results is by visiting the results page for an individual XPRT benchmark. Start by going to the page of the benchmark that interests you (e.g., CrXPRT.com) , and looking for the blue View Results button. Clicking the button takes you to a page that displays results for only that benchmark. You can use the free-form filter on the page to filter those results, and you can use the Benchmarks drop-down menu to jump to the other individual XPRT results pages.

The third way to view our results database is with the WebXPRT 4 results viewer. The viewer provides an information-packed, interactive tool with which you can explore data from the curated set of WebXPRT 4 results we’ve published on our site. We’ll discuss the features of the WebXPRT 4 results viewer in more detail in a future post.

You can use any of these approaches to compare the results of an XPRT on your device with our many published results. We hope you’ll take some time to explore the information in our results database and that it proves to be helpful to you. If you have ideas for new features or suggestions for improvement, we’d love to hear from you!

Justin