We hope everyone’s 2018 kicked off on a happy note, and you’re starting the year rested, refreshed, and inspired. Here at the XPRTs, we already have a busy slate of activity lined up, and we want to share a quick preview of what community members can expect in the coming months.

Next week, I’ll be travelling to CES 2018 in Las Vegas. CES provides us with a great opportunity to survey emerging tech and industry trends, and I look forward to sharing my thoughts and impressions from the show. If you’re attending this year, and would like to meet and discuss any aspect of the XPRTs, let me know.

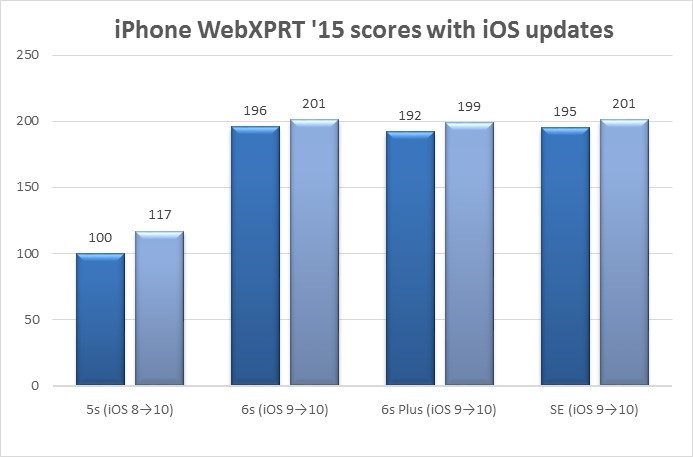

There’s also more WebXPRT news to come. We’re working on several new features for the WebXPRT Processor Comparison Chart that we think will prove to be useful, and we hope to take the updated chart live very soon. We’re also getting closer to the much-anticipated WebXPRT 3 general release! If you’ve been testing the WebXPRT 3 Community Preview, be sure to send in your feedback soon.

Work on the next version of HDXPRT is progressing as well, and we’ll share more details about UI and workload updates as we get closer to a community preview build.

Last but not least, we’re considering the prospect of updating TouchXPRT and MobileXPRT later in the year. We look forward to working with the community on improved versions of each of those benchmarks.

Justin