CloudXPRT is undoubtedly the most complex tool in the XPRT family of benchmarks. To run the cloud-native benchmark’s multiple workloads across different hardware and software platforms, testers need two things: (1) at least a passing familiarity with a wide range of cloud-related toolkits, and (2) an understanding that changing even one test configuration variable can affect test results. While the complexity of CloudXPRT makes it a powerful and flexible tool for measuring application performance on real-world IaaS stacks, it also creates a steep learning curve for new users.

Benchmark setup and configuration can involve a number of complex steps, and the corresponding instructions should be thorough, unambiguous, and intuitive to follow. For all of the XPRT tools, we strive to publish documentation that provides quick, easy-to-find answers to the questions users might have. Community members have asked us to improve the clarity and readability of the CloudXPRT setup, configuration, and individual workload documentation. In response, we are working to create more—and better—CloudXPRT documentation.

If you’re intimidated by the benchmark’s complexity, helping you is one of our highest priorities. In the coming weeks and months, we’ll be evaluating all of our CloudXPRT documentation, particularly from the perspective of new users, and will release more information about the new documentation as it becomes available.

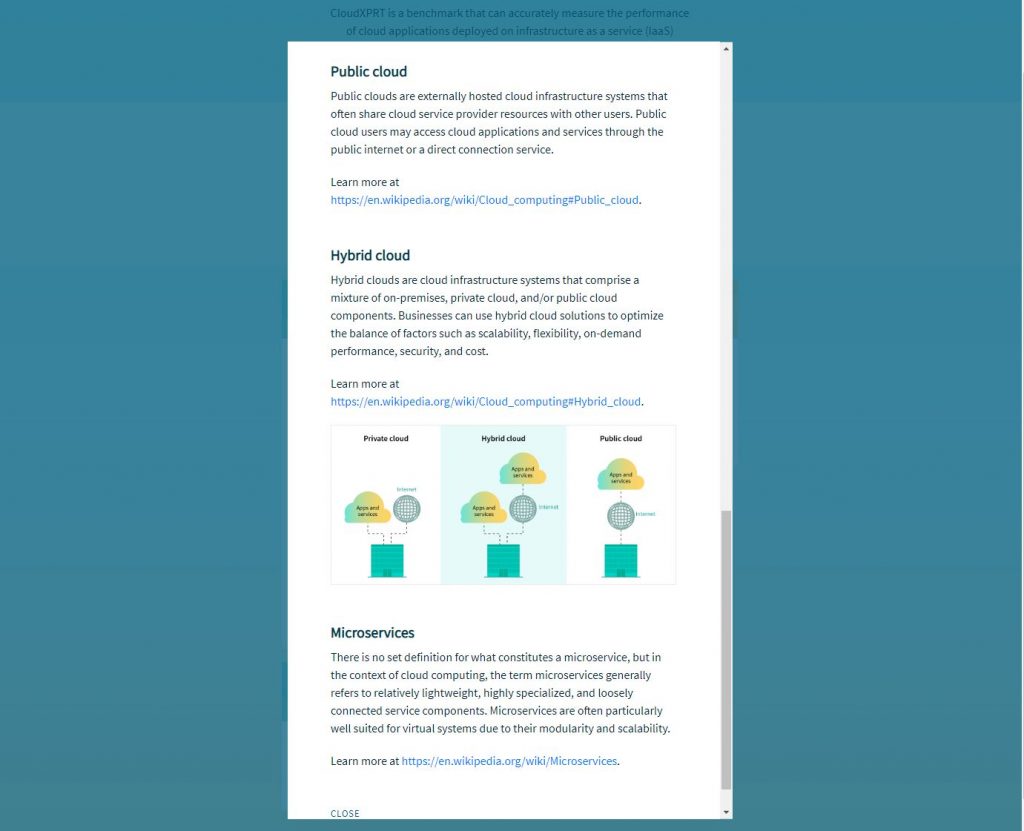

We also want to remind you of some of the existing CloudXPRT resources. We encourage everyone to check out the Introduction to CloudXPRT and Overview of the CloudXPRT Web Microservices Workload white papers. (Note that we’ll soon be publishing a paper on the benchmark’s data analytics workload.) Also, a couple of weeks ago, we published the CloudXPRT learning tool, which we designed to serve as an information hub for common CloudXPRT topics and questions, and to help tech journalists, OEM lab engineers, and everyone who is interested in CloudXPRT find the answers they need as quickly as possible.

Thanks to all who let us know that there was room for improvement in the CloudXPRT documentation. We rely on that kind of feedback and always welcome it. If you have any questions or suggestions regarding CloudXPRT or any of the other XPRTs, please let us know!

Justin