We (Bill and Mark) are on our way home from CES. There were lots of cool things to see, from electric cars to health and fitness wearables to all manner of mobile devices. And, more, a whole lot more.

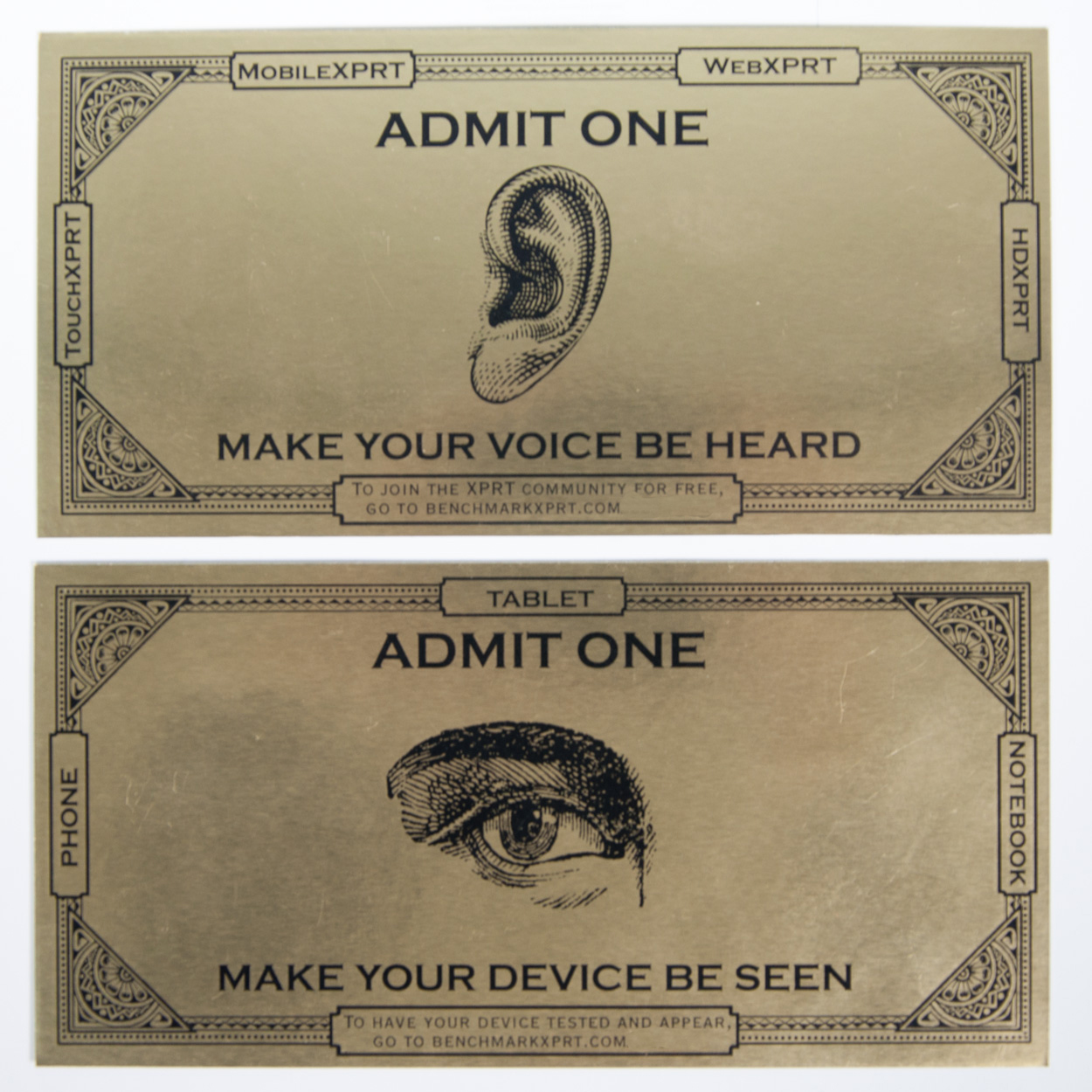

We enjoyed seeing many of those products, but that was not our primary mission at the show. Our main goal was to spread the word about the XPRT benchmarks. We did that by visiting multiple mobile-device members and giving out to many of them a very special golden ticket. Yes, we’re talking a physical, Willy Wonka-style golden ticket. The two-sided tickets look really cool.

One side invites folks to be heard by joining the BenchmarkXPRT community. The other offers them the opportunity to have PT test devices for free with all the applicable XPRT benchmarks. All a vendor has to do to get this free testing is send the device to PT. We hope to get many devices in-house and to provide a great many results on our Web sites.

We wore the new BenchmarkXPRT shirts as we walked the floor.

We will soon be sending one shirt—and one golden ticket—to each member of the community. Please make sure we have your latest mailing address so we can ship those to you.

-Bill & Mark