A couple of months ago, we talked about the senior project we sponsored with North Carolina State University. We asked a small team of students to take a crack at implementing a game that we could use as the basis of a benchmark test.

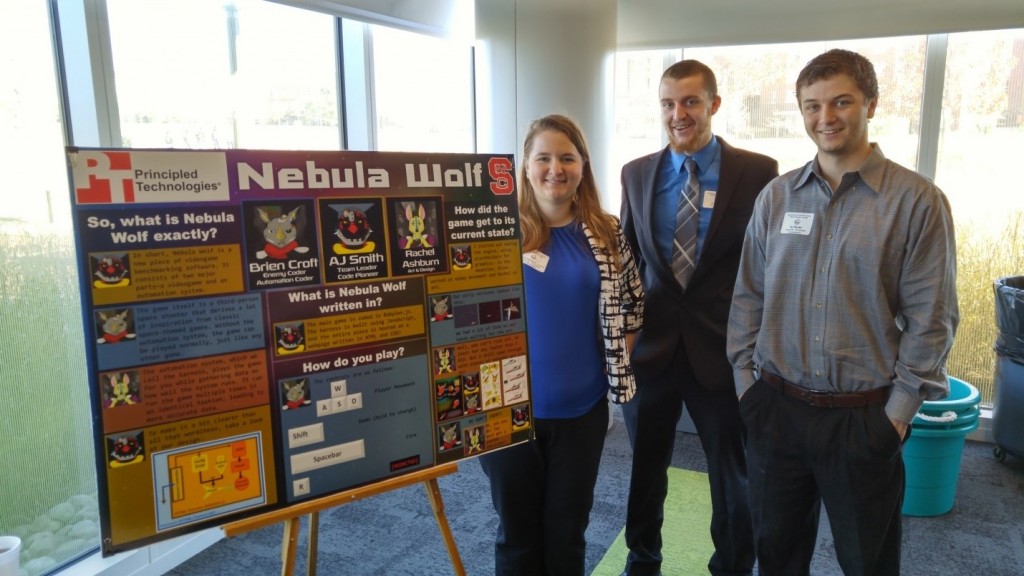

Last Friday, the project culminated with a presentation at the annual Posters and Pies event.

The team gave a great presentation, and I was impressed by how much they accomplished in 3 months. They implemented a game that they called Nebula Wolf – the mascot for NC State is a wolf. It’s a space-themed rail shooter. You can play the game, or click a button to run a script for benchmarking purposes. In the scripted mode, Nebula Wolf unlocks the frame rate so the device can run at full speed.

Over the next couple of weeks, we’re going to be testing Nebula Wolf, digging into the code and getting a deeper understanding of what the team did. We’re hoping to make the game available on our web site soon.

Tomorrow, AJ, Brien, and Rachel will present one last time, here at PT. It’s been a real pleasure working with them. I wish them all good luck as they finish college and start their careers.

Eric