This year’s CES features a familiar cast of characters: gigantic, super-thin 8K screens; plenty of signage promising the arrival of 5G; robots of all shapes, sizes, and levels of competency; and acres of personal grooming products that you can pair with your phone. In all seriousness, however, one main question keeps coming to mind as I walk the floor: Are we approaching the tipping point where AI truly starts to affect most people in meaningful ways on a daily basis? I think we’re still a couple of years away from ubiquitous AI, but it’s the heartbeat of this year’s show, and it’s going play a part in almost everything we do in the very near future. AI applications at this year’s show include manufacturing, transportation, energy, medicine, education, photography, communications, farming, grocery shopping, fitness, sports, defense, and entertainment, just to name a few. The AI revolution is just starting, but once it gets going, AI will continually reshape society for decades to come. This year’s show reinforces our decision to explore the roles that the XPRTs, beginning with AIXPRT, can play in the AI revolution.

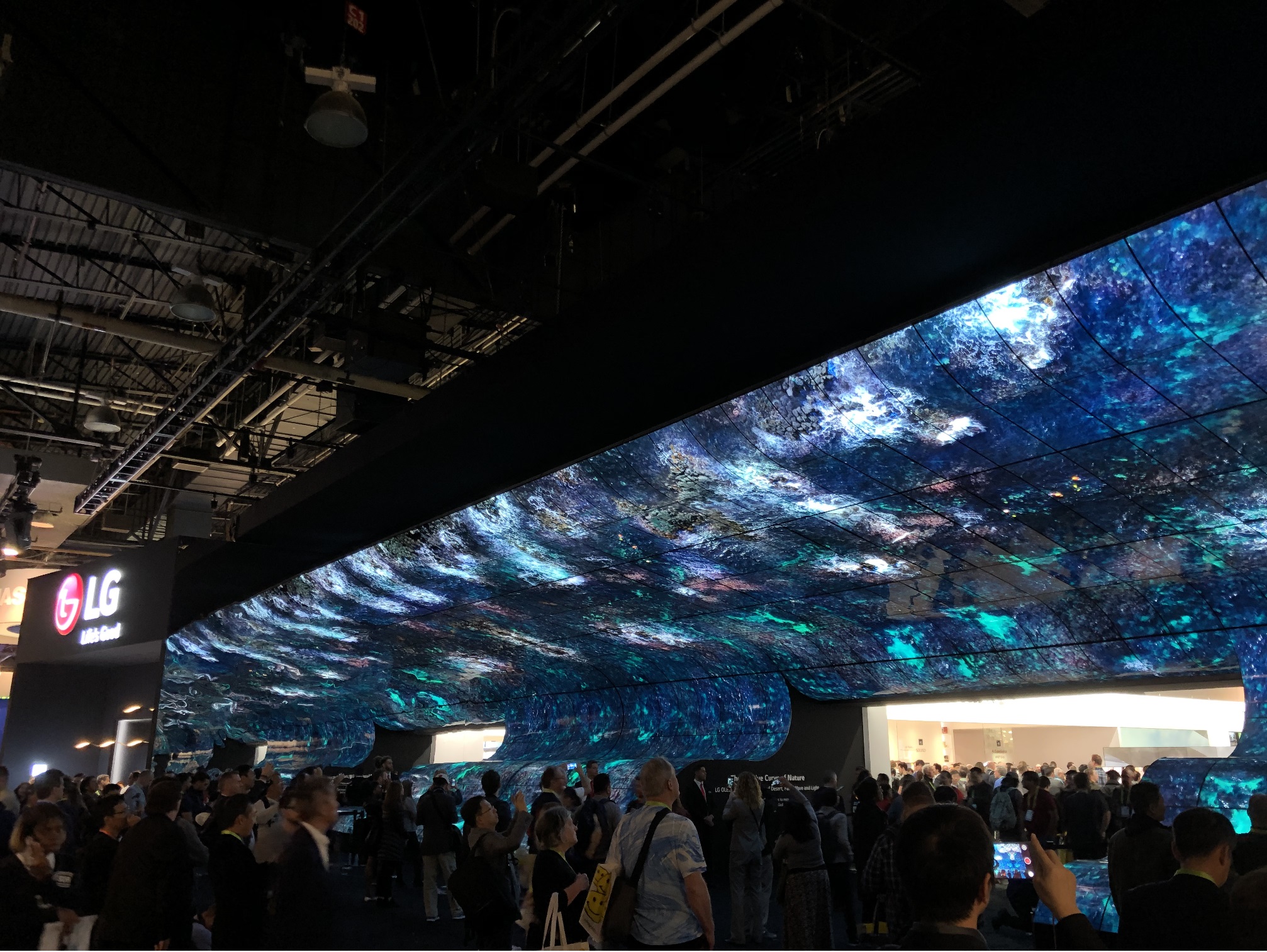

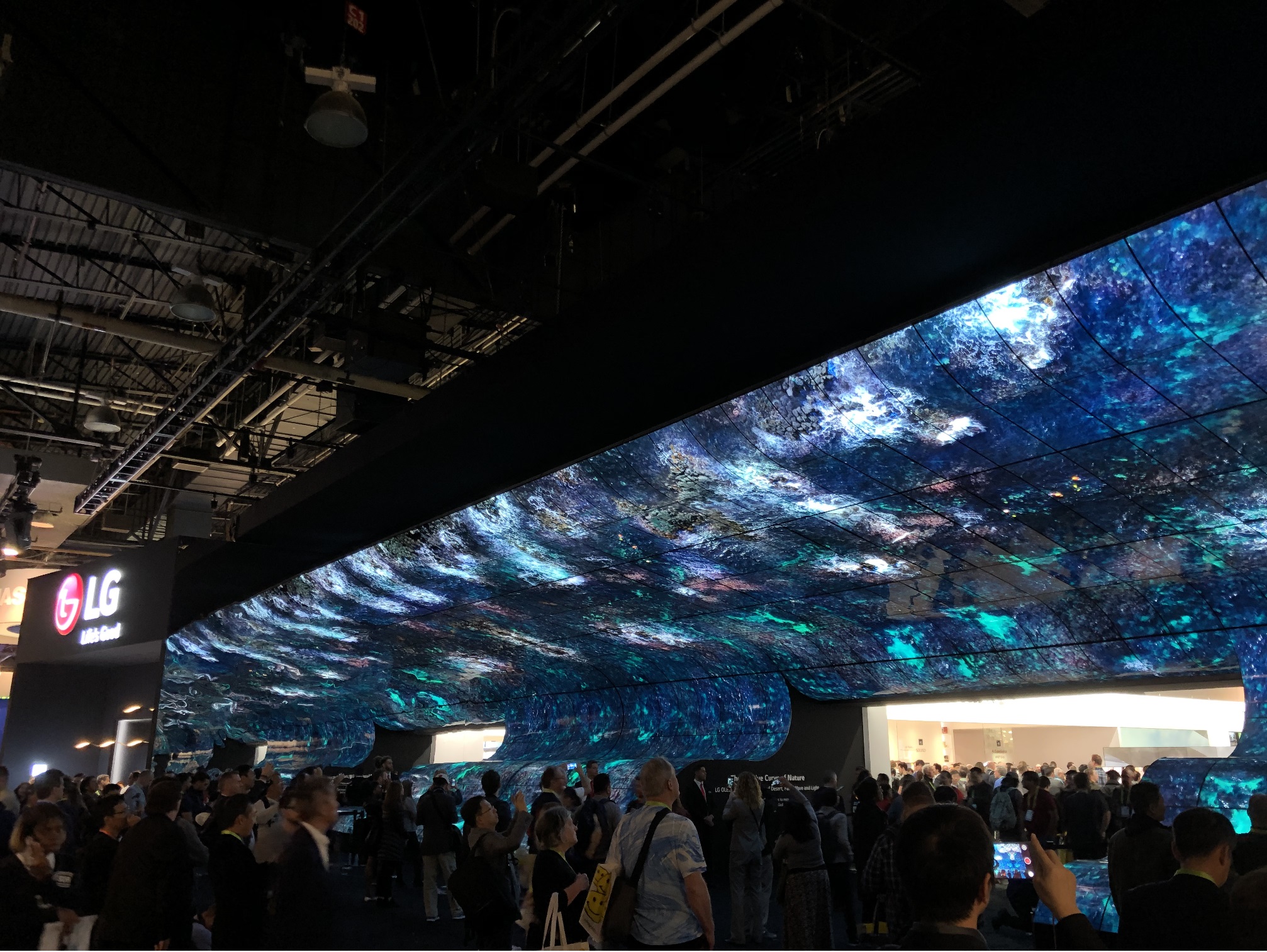

Now for the fun stuff. Here’s a peek at a couple of my favorite displays so far. As is often the case, the most awe-inducing displays at CES are those that overwhelm attendees with light and sound. LG’s enormous curved OLED wall, dubbed the Massive Curve of Nature, was truly something to behold.

Another big draw has been Bell’s Nexus prototype, a hybrid-electric VTOL (vertical takeoff and landing) air taxi. Some journalists can’t resist calling it a flying car, but I refuse to do so, because it has nothing in common with cars apart from the fact that people sit in it and use it to travel from place to place. As Elon Musk once said of an earlier, but similar, concept, “it’s just a helicopter in helicopter’s clothing.” Semantics aside, it’s intriguing to imagine urban environments full of nimble aircraft that are quieter, easier to fly, and more energy efficient than traditional helicopters, especially if they’re paired with autonomous driving technologies.

Finally, quite a few companies are displaying props that put some of the “reality” back into “virtual reality.” Driving and flight simulators with full range of motion that are small enough to fit in someone’s basement or game room, full-body VR suits that control your temperature and deliver electrical stimulus based on game play (yikes!), and portable roller-coaster-like VR rides were just a few of the attractions.

It’s been a fascinating show so far!

Justin