More people around the world are using WebXPRT 4 now than ever before. It’s exciting to see that growth, which also means that many people are visiting our site and learning about the XPRTs for the first time. Because new visitors may not know how the XPRT family of benchmarks differs from other benchmarking efforts, we occasionally like to revisit the core values of our open development community here in the blog—and show how those values translate into more free resources for you.

One of our primary values is transparency in all our benchmark development and testing processes. We share information about our progress with XPRT users throughout the development process, and we invite people to contribute ideas and feedback along the way. We also publish both the source code of our benchmarks and detailed information about how they work, unlike benchmarks that use a “black box” model.

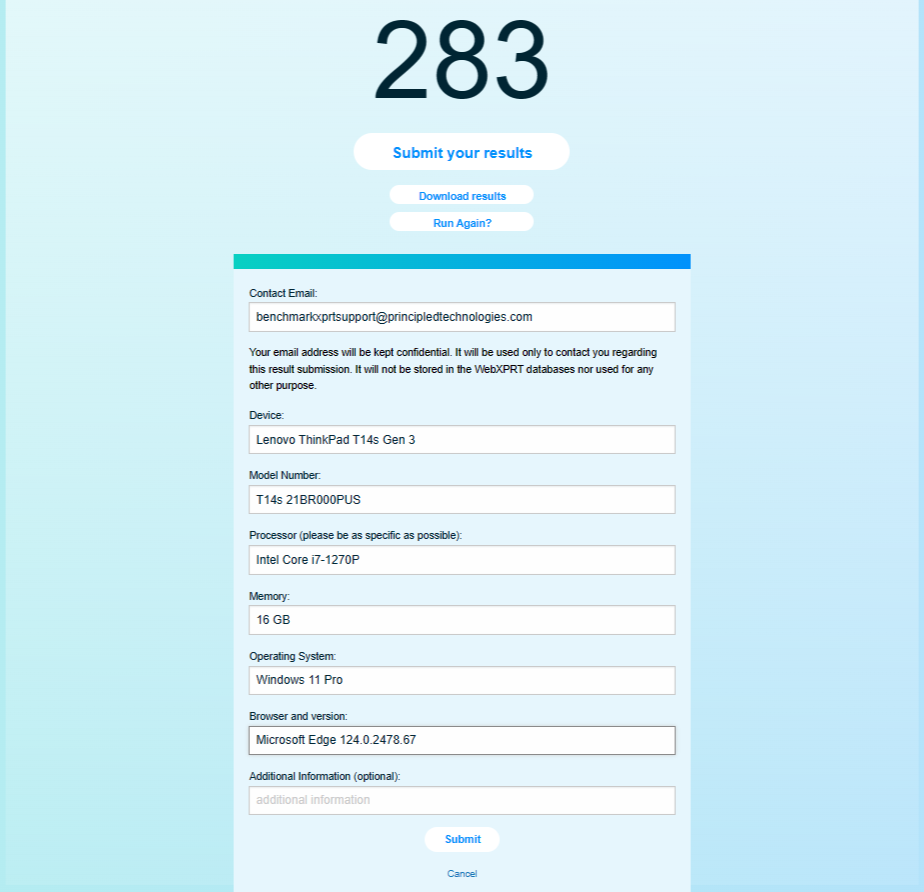

For WebXPRT 4 users who are interested in knowing more about the nuts and bolts of the benchmark, we offer several information-packed resources, including our focus for today, the WebXPRT 4 results calculation and confidence interval white paper. The white paper explains the WebXPRT 4 confidence interval, how it differs from typical benchmark variability, and the formulas the benchmark uses to calculate the individual workload scenario scores and overall score on the end-of-test results screen. The paper also provides an overview of the statistical methodology that WebXPRT uses to translate raw timings into scores.

In addition to the white paper’s discussion of the results calculation process, we’ve also provided a results calculation spreadsheet that shows the raw data from a sample test run and reproduces the calculations WebXPRT uses to generate both the workload scores and an overall score.

In potential future versions of WebXPRT, it’s likely that we’ll continue to use the same—or very similar—statistical methodologies and results calculation formulas that we’ve documented in the results calculation white paper and spreadsheet. That said, if you have suggestions for how we could improve those methods or formulas—either in part or in whole—please don’t hesitate to contact us. We’re interested in hearing your ideas!

The white paper is available on WebXPRT.com and on our XPRT white papers page. If you have any questions about the paper or spreadsheet, WebXPRT, or the XPRTs in general, please let us know.

Justin