A few weeks ago, we discussed how AIXPRT testers can adjust the key variables of batch size, levels of precision, and number of concurrent instances by editing the JSON test configuration file in the AIXPRT/Config directory. In addition to those key variables, there is another variable in the config file called “total_requests” that has a different default setting depending on the AIXPRT test package you choose. This setting can significantly affect a test run, so it’s important for testers to know how it works.

The total_requests variable specifies how many inference requests AIXPRT will send to a network (e.g., ResNet-50) during one test iteration at a given batch size (e.g., Batch 1, 2, 4, etc.). This simulates the inference demand that the end users place on the system. Because we designed AIXPRT to run on different types of hardware, it makes sense to set the default number of requests for each test package to suit the most likely hardware environment for that package.

For example, testing with OpenVINO on Windows aligns more closely with a consumer (i.e., desktop or laptop) scenario than testing with OpenVINO on Ubuntu, which is more typical of server/datacenter testing. Desktop testers require a much lower inference demand than server testers, so the default total_requests settings for the two packages reflect that. The default for the OpenVINO/Windows package is 500, while the default for the OpenVINO/Ubuntu package is 5,000.

Also, setting the number of requests so low that a system finishes each workload in less than 1 second can produce high run-to-run variation, so our default settings represent a lower boundary that will work well for common test scenarios.

Below, we provide the current default total_requests setting for each AIXPRT test package:

- MXNet: 1,000

- OpenVINO Ubuntu: 5,000

- OpenVINO Windows: 500

- TensorFlow Ubuntu: 100

- TensorFlow Windows: 10

- TensorRT Ubuntu: 5,000

- TensorRT Windows: 500

Testers can adjust these variables in the config file according to their own needs. Finding the optimal combination of machine learning variables for each scenario is often a matter of trial and error, and the default settings represent what we think is a reasonable starting point for each test package.

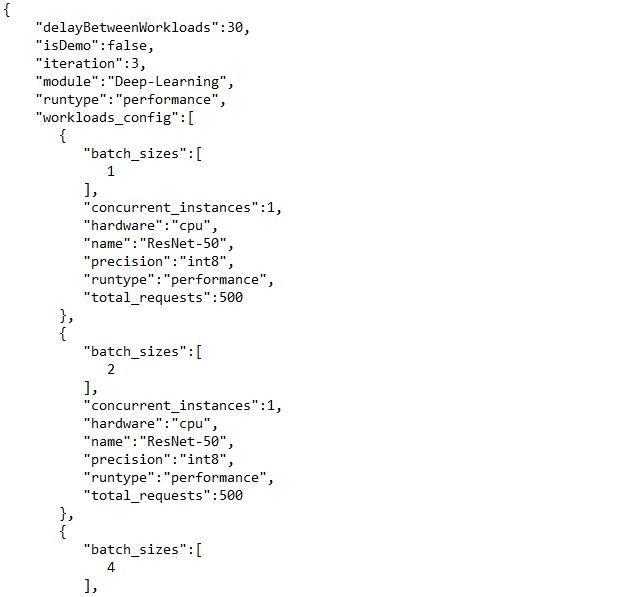

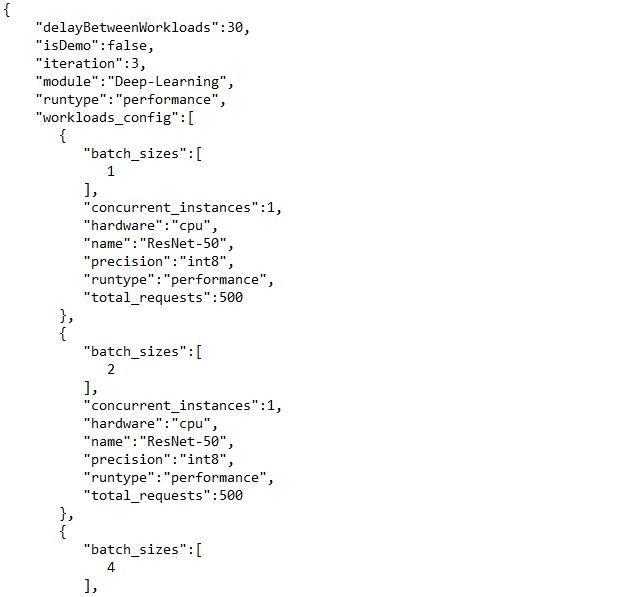

To adjust the total_requests setting, start by locating and opening the JSON test configuration file in the AIXPRT/Config directory. Below, we show a section of the default config file (CPU_INT8.json) for the OpenVINO-Windows test package (AIXPRT_1.0_OpenVINO_Windows.zip). For each batch size, the total_requests setting appears at the bottom of the list of configurable variables. In this case, the default setting Is 500. Change the total_requests numerical value for each batch size in the config file, save your changes, and close the file.

Note that if you are running multiple concurrent instances, OpenVINO and TensorRT automatically distribute the number of requests among the instances. MXNet and TensorFlow users must manually allocate the instances in the config file. You can find an example of how to structure manual allocation here. We hope to make this process automatic for all toolkits in a future update.

We hope this information helps you understand the total_requests setting, and why the default values differ from one test package to another. If you have any questions or comments about this or other aspects of AIXPRT, please let us know.

Justin

![]()