Bidirectional Encoder Representations from Transformers (BERT) is a deep learning framework for natural language processing (NLP). Basically, BERT sorts and analyzes large amounts of text data to make predictions, answer questions, and generate conversational responses. For the many organizations running these deep learning workloads, better BERT performance has the potential to produce quicker insight from textual data, which could lead to increased customer satisfaction, better service, and higher revenues.

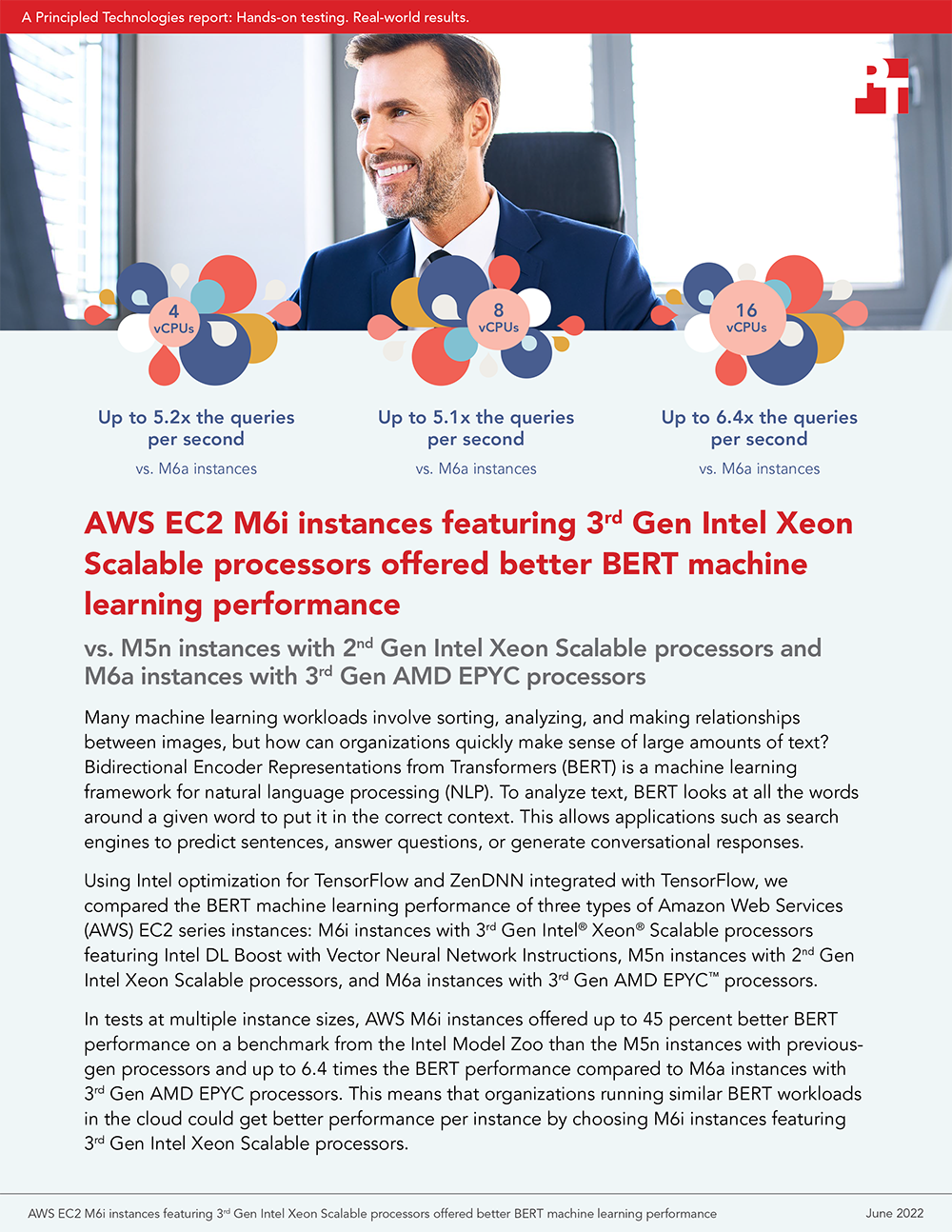

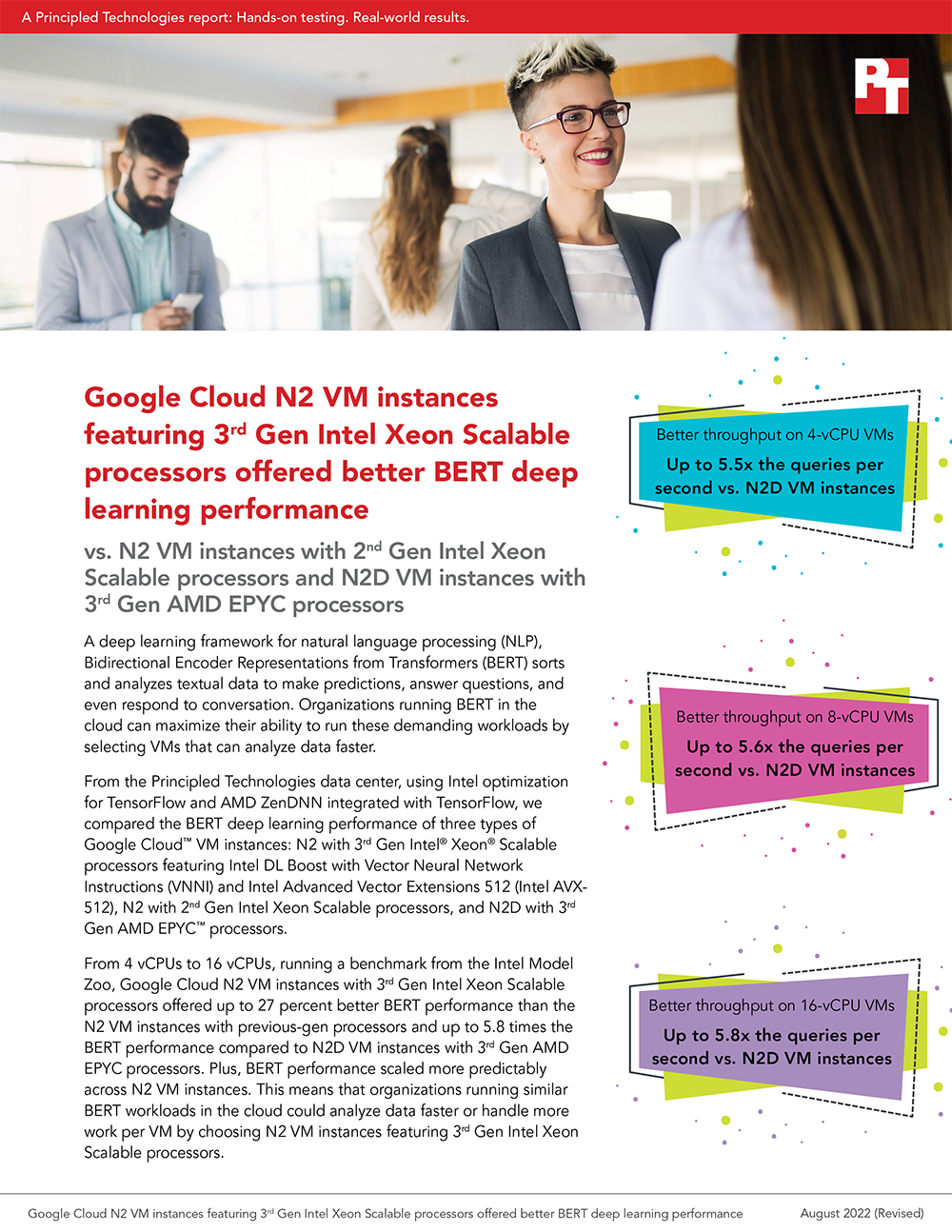

We tested machine-learning performance in the cloud using a BERT benchmark tool from Intel Model Zoo. In each study, we ran machine learning workloads on cloud instances, AWS EC2 M6i and Google Cloud N2, powered by 3rd Gen Intel Xeon Scalable processors vs. similar instances from each CSP powered by previous-generation Intel or 3rd Gen AMD EPYC processors. In both studies, instances powered by 3rd Gen Intel Xeon Scalable processors outperformed the others.

To find out more about which type of instance can deliver the BERT performance you need, see our studies.

Principled Technologies is more than a name: Those two words power all we do. Our principles are our north star, determining the way we work with you, treat our staff, and run our business. And in every area, technologies drive our business, inspire us to innovate, and remind us that new approaches are always possible.