The revolutionary effects of AI are reaching far beyond the tech sector to transform workflows and business models in almost every major industry worldwide. Large language model (LLM) tools are proving to be especially useful for many businesses, empowering them to rapidly expand automation, accelerate content creation, improve data analysis and customer outcomes, and much more. For many companies interested in launching an LLM application, an in-house solution augmented with their own data may be the way to go. Creating such an internal LLM can unlock the game-changing benefits of AI tools while enabling the better data security and potential cost savings of a private, customized solution.

To set up an in-house LLM app, organizations will typically need to feed large amounts of proprietary information into supporting, typically vector-searchable databases. Data ingestion is often resource-intensive, so it’s essential to have server hardware that’s up to the task. While the need for powerful hardware is clear, selecting a server solution that can handle LLM demands without also incurring expensive overprovisioning presents a different challenge. Latest-generation Dell PowerEdge servers can be an excellent choice for AI ingestion projects, but decision makers need objective performance data that demonstrates which model and processor configurations can strike that critical balance between power and cost.

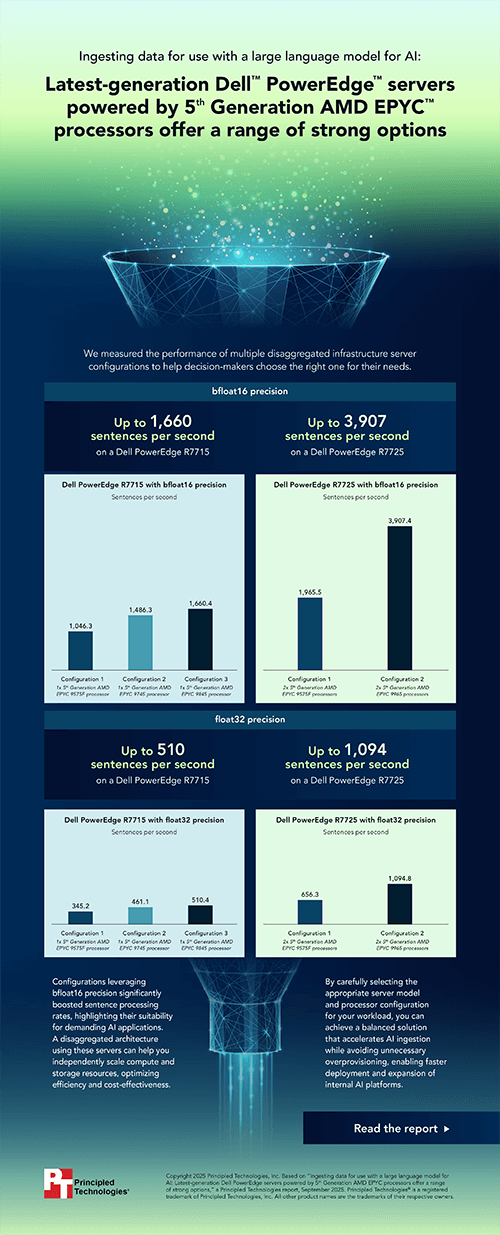

To provide such data, we evaluated the data ingestion capabilities of two latest-generation Dell servers—a PowerEdge R7715 and a PowerEdge R7725—equipped with various 5th Generation AMD EPYC processors. Specifically, we used a metric called sentences per second, which indicates the number of text inputs an LLM can convert, to measure ingestion speeds at float32 and bfloat16 levels of precision on each system.

In our tests, the PowerEdge R7725 and R7715 servers demonstrated strong data ingestion performance in LLM-supporting databases. The server and processor configurations that leveraged bfloat16 precision significantly boosted sentence processing rates, with the dual-socket PowerEdge R7725 models delivering up to 3,907 sentences per second.

By carefully selecting the appropriate server and processor configuration for their workload needs, companies can achieve a balanced solution that accelerates AI data ingestion while avoiding costly overprovisioning, giving them a faster path to the potential benefits of in-house AI tools.

To learn more about how we evaluated the data ingestion capabilities of latest-gen Dell PowerEdge R7715 and R7725 servers with 5th Gen AMD EPYC processors, check out the report and infographic below.

Principled Technologies is more than a name: Those two words power all we do. Our principles are our north star, determining the way we work with you, treat our staff, and run our business. And in every area, technologies drive our business, inspire us to innovate, and remind us that new approaches are always possible.